Key Takeaways from Google I/O

Article By : Rick Merritt

Google showed expanding use of on-device neural networks to make smartphones more useful and private at its annual developer conference

Google expanded its use of deep learning in new smartphones, home displays and Web services. It also revealed more tools, and made pledges for securing user privacy.

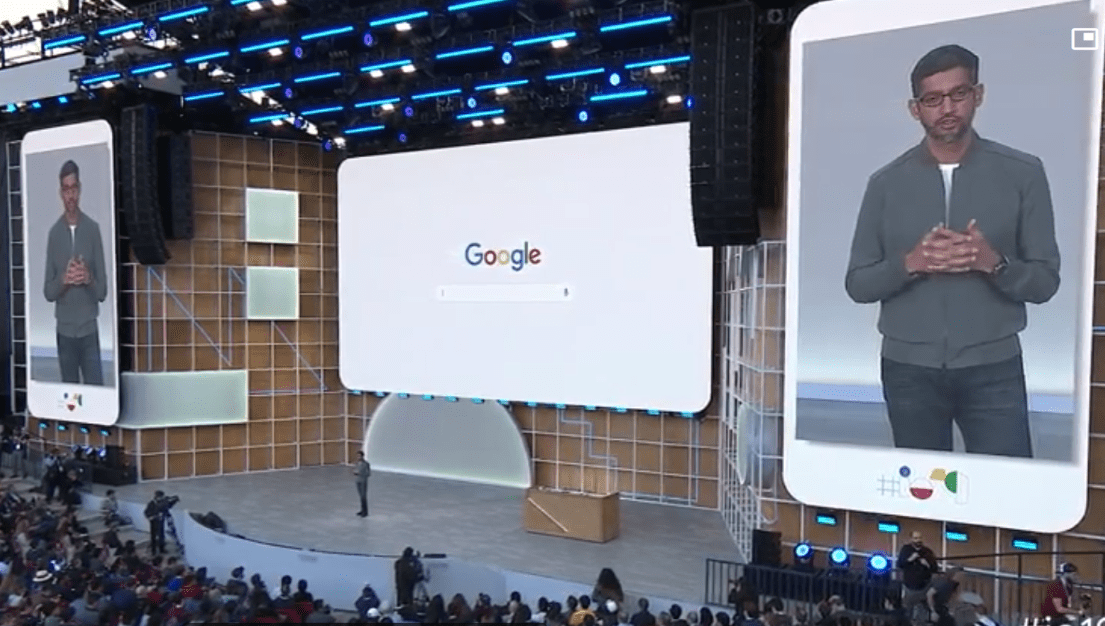

Google has “a deep sense of responsibility to create things that improve people’s lives…and benefit society as a whole,” said Sundar Pichai, Google’s chief executive in his keynote at Google I/O, the company's annual developer conference.

The chief executive of rival Facebook expressed similar sentiments at his annual developer conference a week ago. Mark Zuckerberg articulated a privacy vision that included end-to-end encryption and private social networks.

The focus on privacy comes in the wake of growing attention from regulators around the world on misuses of personal data and AI. Both tech giants are clearly accelerating efforts on privacy while they drive advances in AI that use similar emerging techniques.

At the event, the search giant expressed support for 5G and foldables as important trends in 2019 handsets. It launched two low-cost LTE handsets and said it will deliver later this year AI software to make voice interfaces a better alternative to touchscreen displays.

The Pixel 3a handset, which starts at $399, has a 5.6-inch OLED display and 64 GBytes storage (when unformatted). The Pixel 3a XL has a 6-inch display and starts at $479.

Both use Qualcomm’s 10nm Snapdragon 670 SoC with a Category 11/12 LTE modem, though the handset does not support its maximum data rates. Both use 12.2 megapixel front cameras and 8 megapixel rear cameras that can outperform (in some tests) more expensive handsets such as the iPhone X thanks to AI and software features.

Google demo'd a handset that responded quickly to a mix of voice commands and dictation across different applications while understanding some context. The code will be made available for the Pixel handsets later this year.

“We envision a paradigm shift,” to voice interfaces, said Scott Huffman, a vice president of engineering for the Google Assistant.

Meanwhile, Google is adding features to the next version of Android to support 5G and foldables. “Android Q is native with 5G. Twenty carriers will launch 5G services this year, and more than a dozen 5G Android phones will ship this year,” said Stephanie Cuthbertson, a senior Android director.

She showed Android Q automatically sending sessions from an outside to an inside display on a foldable handset when it was opened. Some of Samsung's first foldable models introduced just recently experienced some embarrassing glitches. Nonetheless, she said, “Multiple OEMs will launch foldables this year running Android.”

In addition, Android Q makes it easier for users to access privacy and location controls. It also sports expanded tools for limiting screen time. The updated OS is available in a beta version in 21 devices from 12 OEMs.

Separately, Google is rebooting its smart-home brand around the Nest name and team. It announced a $299 home display, Nest Hub Max, with a 10-inch screen and 6.5-megapixel wide-angle camera that works with existing Nest security cameras and video doorbells.

AI moves to on-device and self-learning models

Much of the focus at Google I/O was in shrinking neural network models so they could work independent of cloud services on handsets and home displays. For example, Android Q will support models for transcribing speech that fit on a smartphone so audio streams can remain private.

Google’s Assistant now uses a 500 Mbyte model to parse phonemes into sentences it can understand; that's slimmed down from a 100-GByte model, Pichai said. One text-to-speech model now fits in 100 kBytes, helping low-cost phones process up to a dozen languages, he added.

Separately, Google is stepping ahead with Duplex, a controversial feature for letting the Assistant make restaurant reservations with a phone call as if it were a person. Duplex on the Web lets the Assistant make online bookings that users can confirm.

Fairness in AI is a growing concern. Google has developed a tool, Testing with Concept Activation Vectors, to detect biases in models that are used by AIs. In a demo conducted by Pichai, the tool discovered a model that unfairly favored men in a search for images of doctors. The company is making the tool open-source.

Google, Facebook and others are also working a set of "pre-training" techniques that can shrink the large labeled data sets needed to train neural models. It involves blanking out known parts of a data set, such as words in a transcribed audio text, and getting the model to predict the missing words.

Last year, Google published a widely cited paper on its approach to the technique, which it calls Bidirectional Encoder Representations from Transformers. The company uses BERT for speech recognition. “Today it helps us better understand context, and we’re working with product teams across Google to use BERT to solve problems,” said Jeff Dean, a senior fellow at Google.

Facebook is using similar techniques it calls self-supervising models across problems in text, computer vision, video and speech. For example, such techniques now help identify more than half the hate speech and other inappropriate content the social network takes down.

In one case, the techniques reduced the amount of transcribed audio needed to train a model from12,000 hours down to just 80 hours while delivering a 9% lower word error rate. “Self-supervised learning is powering most of what we are doing,” in ferreting out unwanted content, said Facebook CTO Mike Schroepfer in a keynote last week.

Separately, Google provided more information about the surprising high price and performance of its TPU cloud services for deep learning, now moving from an alpha to a beta offering.

For example, a bank of Google’s third-generation TPUs containing more than 1,000 chips working at reduced precision can deliver the equivalent of 100 petaflops. In tests, they have trained a ResNet-50 image-recognition model on the ImageNet dataset in two minutes and a voice-recognition model for BERT in 76 minutes.

Prices are similarly impressive. Access to TPUs start at $24/hour for a version 2 cluster with 32 cores and range up to $384/hour for a cluster with 512 cores. Prices for using systems with the third-generation TPU are higher, starting at $32/hour for 32 cores.

Users can sign long term contracts for access to TPUs. Prices for access to version-2 TPUs range from $132,451 for a year’s access a 32-core cluster to a whopping $4,541,184 for three years’ access to a 512-core version. Prices for extended access to the third-gen TPU are higher.

Subscribe to Newsletter

Test Qr code text s ss