AI and Ethics

Article By : Nitin Dahad, EE Times

As the hype train abandons IoT and sets its sights on AI, Nitin Dahad ponders whether we have cause for concern with regards to AI and its ethics

Around five years ago, everyone was talking about IoT and how it was going to change everything as we connect billions of devices. We seem to go through cycles where a technology gets hyped so much and that’s what we seem to be going through right now with AI (artificial intelligence).

In my 33-year career in the electronics and semiconductor industry, I’ve seen three of the big changes in the evolution of technology — first the era of the microprocessor, then the internet, and then mobile. And now, as Rick Merritt said recently, “It’s the age of AI.” In his article he cites Aart de Geus, co-chief executive of Synopsys, indicating that AI will drive the semiconductor industry for the next few decades because big data needs machine learning and machine learning needs more computation, which generates more data.

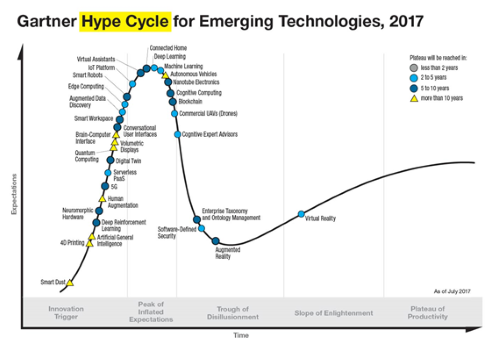

Indeed, we appear now to be on the ascendant of the Gartner hype cycle for AI, but unlike the periods when the previous technologies were being introduced, this time is different: I’ve never seen so much debate on the ethics of a technology. Well now, AI will most likely change lots of things. Autonomous vehicles, military and industrial drones, robots and many other applications in areas like healthcare, government and city functions are all potentially going to be impacted.

It’s so important that the UK government has this week released a 183-page report covering many of the issues on the accountability of AI systems, the regulatory aspects, and the ethics, as well as other topics such as innovation, investment and skills in AI research, innovation and commercialization.

“The UK contains leading AI companies, a dynamic academic research culture, and a vigorous start-up ecosystem as well as a host of legal, ethical, financial and linguistic strengths. We should make the most of this environment, but it is essential that ethics take centre stage in AI’s development and use,” said Lord Clement-Jones, the chairman of the House of Lords Select Committee on Artificial Intelligence.

“AI is not without its risks and the adoption of the principles proposed by the committee will help to mitigate these,” Jones said. “An ethical approach ensures the public trusts this technology and sees the benefits of using it. It will also prepare them to challenge its misuse. We want to make sure that this country remains a cutting-edge place to research and develop this exciting technology. However, start-ups can struggle to scale up on their own.”

Among its many recommendations, the committee’s report says transparency is needed in AI, and that a voluntary mechanism should be established to inform consumers when AI is being used to make significant or sensitive decisions. It also says it is not currently clear whether existing liability law will be sufficient when AI systems malfunction or cause harm to users, and clarity in this area is needed.

Can AI systems be transparent and intelligible? Is there a level of technical transparency enabling systems to be interrogated to challenge why a particular decision was made by an AI system?

There is detailed discussion on this, and the accountability of AI systems. In many deep learning systems, the feeding of information through many different layers of processing in order to come to an answer or decision can make the system look like a black box that even their developers cannot always be sure which factors led a system to decide one thing over another.

Timothy Lanfear, director of the EMEA Solution Architecture and Engineering team at Nvidia, giving evidence to the committee, said he doesn’t think so. He said machine learning algorithms are often much shorter and simpler than conventional software coding and are therefore in some respects easier to understand and inspect. “We are using systems every day that are of a level of complexity that we cannot absorb,” Lanfear said. “Artificial intelligence is no different from that. It is also at a level of complexity that cannot be grasped as a whole. Nevertheless, what you can do is to break this down into pieces, find ways of testing it to check that it is doing the things you expect it to do and, if it is not, take some action.”

The committee acknowledges that achieving full technical transparency is difficult, and possibly even impossible, for certain kinds of AI systems, and would in any case not be appropriate or helpful in many cases. However, there will be particular safety-critical scenarios where technical transparency is imperative, and regulators in those domains must have the power to mandate the use of more transparent forms of AI, even at the potential expense of power and accuracy.

“We believe it is not acceptable to deploy any artificial intelligence system which could have a substantial impact on an individual’s life, unless it can generate a full and satisfactory explanation for the decisions it will take. In cases such as deep neural networks, where it is not yet possible to generate thorough explanations for the decisions that are made, this may mean delaying their deployment for particular uses until alternative solutions are found,” the committee says in the report.”

EE Times spoke to Stan Boland, CEO of UK autonomous mobility startup FiveAI, focused on developing a full stack autonomous vehicle capability. Although he said he hadn’t been able to read the report or recommendations, he told us that it’s not as much of a black box scenario as the report seems to be suggesting.

“Our system is extremely modular, and this is essential to explain and debug the system to improve both the technology and service,” Boland said. “Any system that goes on a public road needs to go through lots of verification, and that needs to be independent.”

He added that autonomous driving is not going to be adopted in the same way globally. “It works differently in Mountain View and in London. Every city is going to be different, in terms of how people behave and the conditions in which they have to drive. The important thing for us is to build a company and solution that meets the needs of Europe,” he said.

The UK government report provides a little perspective on global AI research and development. Goldman Sachs data suggests that between the first quarter of 2012 and the second quarter of 2016, the United States invested approximately $18.2 billion in AI, compared with $2.6 billion by China, and $850 million in the UK. Meanwhile, China has explicitly committed itself to becoming a world leader in AI by 2030 and aims to have grown its AI ecosystem to $150 billion by then.

Given the disparities in available resources, the UK is unlikely to be able to rival the scale of investments made in the United States and China. Germany and Canada offer more similar comparisons. Of these two, Germany’s approach is strongly influenced by its flagship Industrie 4.0 strategy for smart manufacturing. This strategy seeks to use AI to improve manufacturing processes, and to produce “smart goods” with integrated AI, such as fridges and cars. Meanwhile, the Pan-Canadian Artificial Intelligence Strategy is less focused on developing AI in any particular sector, but is making $125 million available to establish three new AI institutes across the country, and attract AI researchers to the country.

Processor Limitations?

While deep learning has played a large part in the impressive progress made by AI over the past decade, it is not without its issues. Deep learning requires large datasets, which can be difficult and expensive to obtain, and can require a great deal of processing power. The report says that while recent advances in deep learning have been made possible with the growth of cheap processing power, with Moore’s law starting to break down, the growth in cheap processing power could also slow. Innovations such as quantum computing may yet restore, or even accelerate, the historic growth in cheap processing power, but it is currently too early to say this with any certainty.

Geoff Hinton, a pioneer in deep learning who is still revered in the field today, has warned that the deep learning revolution might be drawing to a close. Others are more optimistic, especially with advances in custom-designed AI chips, such as Google’s Tensor Processing Unit, and more speculatively, advances in quantum computing which might provide further boosts in future.

Criminal Misuse, Regulation

The report delves into areas like criminal misuse, regulation and innovation. The field of “adversarial AI” is a growing area of research, whereby researchers, armed with an understanding of how AI systems work, attempt to fool other AI systems into making incorrect classifications or decisions. Image recognition systems, in particular, have been shown to be susceptible to these kinds of attacks. For example, it has been shown that pictures, or even three-dimensional models or signs, can be subtly altered in such a way that they remain indistinguishable from the originals, but fool AI systems into recognising them as completely different objects.

Examples include making cars crash using false images to trick the car into stopping suddenly, or triggering the firing of autonomous weapons. Of course, both of these are possible with non-machine learning systems, too (or indeed with human decision-makers), but with non-machine learning approaches the reasoning involved can be interrogated, recovered, and debugged. This is not possible with many machine learning systems.

On regulation, the report observes that in China “less legislation around the application of technology is fueling faster experimentation and innovation, including when it comes to the use of data and AI.” It argues that the UK needs to be careful not to over-regulate by comparison.

The degree of accountability which companies and organizations will face if they violate regulations is also covered. Lanfear of Nvidia said while their workforce was aware of their ethical principles, and how they ensure compliance, he admitted that he struggled to answer the question, because “as a technologist it is not my core thinking.” It is unlikely Lanfear is alone in this, and mechanisms must be found to ensure the current trend for ethical principles does not simply translate into a meaningless box-ticking exercise.

We have the technology for AI to drive the next wave of computing, based on high-end AI processors and chips. But while the semiconductor and computing industries might be pushing the boundaries of what is possible, society will in the end decide how much of that technology takes its place in real world applications — we only have to see the outcry every time there is a crash or fatality involving a self-driving car. Having the debate about the ethics of AI is clearly useful in making us think about how our technology can be safely and usefully deployed — and accepted and adopted by society.

— Nitin Dahad is a European correspondent for EE Times.

Subscribe to Newsletter

Test Qr code text s ss