Nvidia Renders Interactive 3D Environments with AI

Article By : Junko Yoshida, EE Times

Nvidia Research announces "video-to-video synthesis"

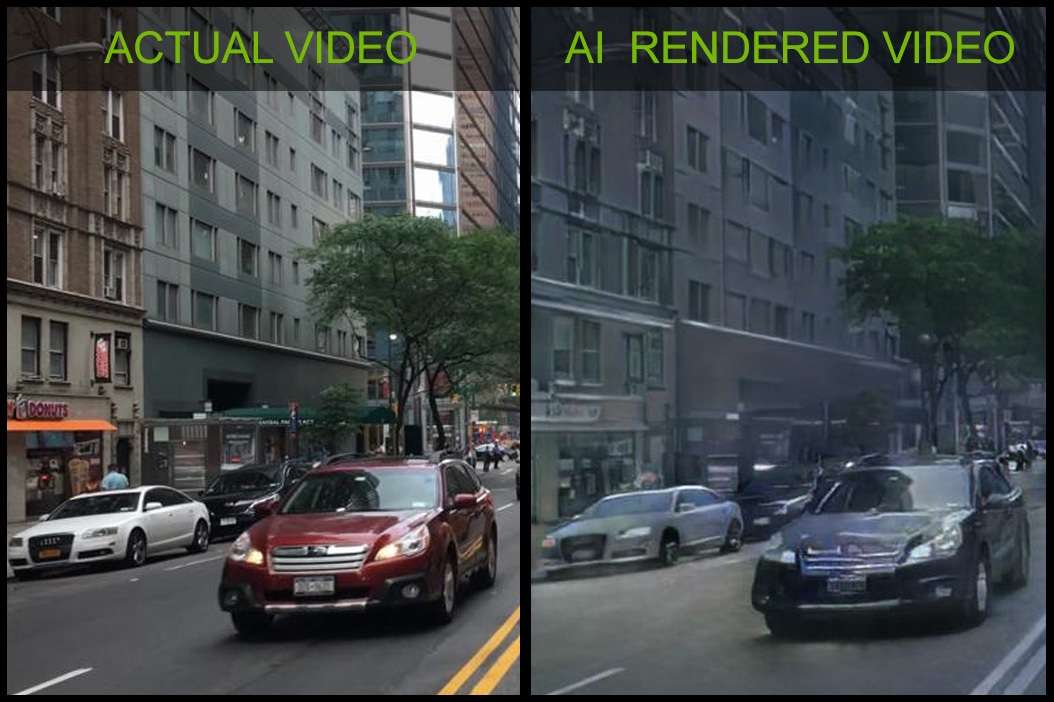

Nvidia Research revealed today that it has developed AI technology that can render entirely synthetic, interactive 3D environments. In creating realistic-looking 3D street scenes, shown in a demo, Nvidia’s R&D team did not use traditional graphics technology. Instead, it used neural networks — specifically, “generative models” — trained by real-world video.

Nvidia detailed its new AI technology in a technical paper entitled “Video-to-Video Synthesis” at the Neural Information Processing Systems conference in Montreal.

(Source: Nvidia)

For a graphic chip company like Nvidia, which has devoted its last 25 years to rendering realistic 3D environments triangle by triangle, the newly developed technology potentially could spell trouble. The company’s own research team has now demonstrated that AI could replace Nvidia’s core graphics business.

Asked if AI could potentially make Nvidia’s graphics technology obsolete, Bryan Catanzaro, vice president of applied deep learning at Nvidia, who led the research team developing this work, cautioned, “This is still early-stage research.”

But he acknowledged, “It could. But that’s why we must be the one to invent this.”

Using video to train a neural network

As Catanzaro explained, the research team’s new technical paper describes how the team succeeded in using a neural network — trained by video — to generate interactive 3D graphics.

The neural net used by the team for this project is called “generative models.”

Rather than using a neural net for classification (i.e. identifying whether an image, for example, is a cat or dog), generative models, according to Catanzaro, are designed to capture a whole image and develop algorithms that analyze and understand relationships between objects within the image. This method generates more elaborate results, such as synthetic 3D images and video sequences.

The trick is that generative models have parameters significantly smaller than the amount of video data fed to train the system. To generate synthetic images, the models must discover and efficiently internalize the essence of the data.

Generative models are widely regarded as one of the most promising approaches to foster machine’s “understanding” of relationships among mountains of data.

How generative models work

Catanzaro said, “Take the example of a face.” Generative models would learn not necessarily what an eye or a nose is, but the relationship and positioning of those different features on the face. It includes, for example, how a nose casts a shadow, or how a beard or hair can alter a face’s appearance. Once the generative models have learned a variety of parameters — including how they relate to one another — and how the data is structured, they can generate a synthetic 3D face.

Nvidia Research team applied the same AI model to street scenes. Generative models analyze objects observed, break them down into ground, trees, sky, buildings and cars, and develop algorithms to understand how each object relates to the others. The models create high-level sketches of urban streets which machines can then use to build and generate synthetic 3D scenes.

The generative neural network learned how to model the appearance of the world, including lighting, materials and their dynamics. “Since the scene is fully synthetically generated, it can be easily edited to remove, modify or add objects,” according to Nvidia.

Revolutionizing how graphics are created

Catanzaro is certain that generative models will eventually change how graphics are created. He explained that this will “enable developers, particularly in gaming and automotive, to create scenes at a fraction of the traditional cost.”

The Nvidia Research team explained that learning to synthesize continuous visual experiences “has a wide range of applications in computer vision, robotics, and computer graphics.” For example, it would be ideal for a plethora of simulation models based on different urban street scenes that carmakers could use to test algorithms developed for autonomous vehicles.

When asked what further developments must be made, Catanzaro told us, “We need to improve real-time aspect of it.”

Meanwhile, the team hopes to add more objects to the model. For example, in a demonstration video, the team shows a variety of urban streets. However, features like tunnels or bridges are absent from the rendered 3D video sequences. This is because the generative models haven’t been trained on such video examples, Catanzaro explained.

Last but not least, “We need to improve the visual fidelity of 3D images — so that they look more convincing,” he added.

In the technical paper, the team concluded:

We present a general video-to-video synthesis framework based on conditional Generative Adversarial Networks (GANs). Through carefully-designed generator and discriminator networks as well as a spatio-temporal adversarial objective, we can synthesize high-resolution, photorealistic, and temporally consistent videos. Extensive experiments demonstrate that our results are significantly better than the results by state-of-the-art methods. Its extension to the future video prediction task also compares favorably against the competing approaches.

However, the team pointed out limitations and future work as follows:

Although our approach outperforms previous methods, our model still fails in a couple of situations. For example, our model struggles in synthesizing turning cars due to insufficient information in label maps. We speculate that this could be potentially addressed by adding additional 3D cues, such as depth maps. Furthermore, our model still cannot guarantee that an object has a consistent appearance across the whole video. Occasionally, a car may change its color gradually. This issue might be alleviated if object tracking information is used to enforce that the same object shares the same appearance throughout the entire video. Finally, when we perform semantic manipulations such as turning trees into buildings, visible artifacts occasionally appear as building and trees have different label shapes. This might be resolved if we train our model with coarser semantic labels, as the trained model would be less sensitive to label shapes.

— Junko Yoshida, Global Co-Editor-In-Chief, AspenCore Media, Chief International Correspondent, EE Times

Subscribe to Newsletter

Test Qr code text s ss