ThinCI Joins AI Rush

Article By : Junko Yoshida, EE Times

AI startup secures serious funding, with Daimler among its backers

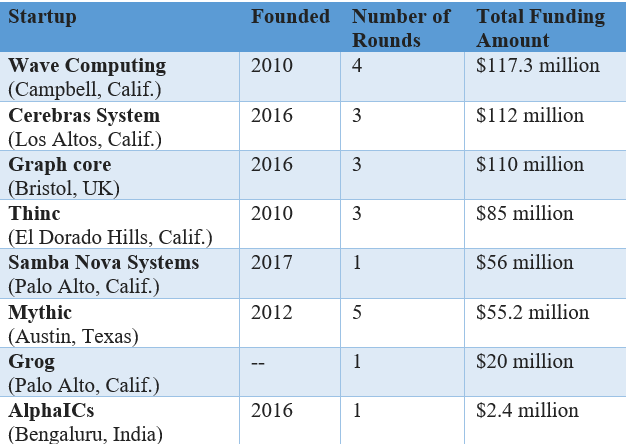

MADISON, Wis. — With a host of chip startups — each claiming development of a unique processing architecture ideally suited for AI/machine learning— all hot and bothered in an overheated AI market, how can you tell who’s ahead of whom?

In the absence of commonly applied benchmarks and commercial silicon on the open market, here’s where money speaks volumes. How much investment a startup has been able to raise thus far is a useful yardstick.

With a recently closed Series C round of $65 million, ThinCI Inc., an AI processor company (El Dorado Hills, California), joins an exclusive club of well-heeled AI hardware startups. Other members include Wave Computing, which has raised $117.3 million, Cerebras at $112 million, and Graphcore at $110 million.

Asked about how much investment the company received prior to Series C, ThinCI CEO Dinakar Munagala initially invoked his previous non-disclosure policy. Pressed, however, he cited a total of about $20 million raised before ThinCI got to Series C.

With that modest $20 million in hand, ThinCI, founded in 2010, has already gotten to “first silicon, steady and growing revenue stream, and contracts for multi-year revenue,” explained Munagala. Looking back on the humble beginning that literally started in his bedroom, he noted that the team’s collective bootstrap efforts have propelled ThinCI to “remain super capital-efficient.”

MIPS’ U.K. team joins ThinCI

“Now with market and customer validation done, we are viewing this round [of Series C] primarily as a growth round to fund the expansion of new offices — U.K., Silicon Valley, Utah — and existing facilities —India and El Dorado Hills,” he added.

Today, ThinCI already has about 180 employees, mostly engineers, worldwide. This includes 30 in the U.K. ThinCI, it turns out, picked up MIPS’ U.K. team based in Kings Langley. There, MIPS architects, chip designers, and developers of software compilers cut their teeth developing “auto-grade” MIPS processors now deployed inside Mobileye’s EyeQ chips, explained ThinCI. (Separately, Wave Computing announced in June its acquisition of MIPS Technologies.)

Automotive continues to be a key focus for ThinCI. According to Munagala, the first working silicon — fabricated by using a 28-nm process node — is already in the hands of its customers for validation and benchmarking. ThinCI is also “making revenue” from its design-ins with automotive systems — most likely, those of Denso, although Munagala declined to comment.

Denso, which participated in the Series B funding, is a lead investor for this most recent oversubscribed round of Series C, along with its subsidiary NSITEXE, Inc., a developer of semiconductor components enabling automated driving, and Temasek.

According to ThinCI, Temasek led a consortium including GGV Capital (U.S./Asia), Wavemaker Partners (U.S./Singapore), and SG Innovate (Singapore). Automotive ecosystem groups joining the round include Mirai Creation Fund (mainly funded by Toyota and SMBC and operated by SPARX Group) (Japan), and Daimler and an unnamed major Asia-based electronics company.

Going beyond automotive

In a recent interview with EE Times, ThinCI’s CEO painted a picture of ThinCI as setting itself up to apply the firm’s Graph Streaming Processor (GSP) architecture to all things artificial intelligence, machine learning, neural network, and vision processing. Besides the automotive market, ThinCI is finding opportunities in surveillance and security for the many players in the retail and industrial markets keenly interested in deploying AI.

“We see our sweet spot in the middle,” said Val Cook, ThinCI’s chief software architect, “between small, low-cost ASICs used in consumer edge devices like that of Amazon Echo and data centers,” where Nvidia and Intel dominate in AI training.

Anticipating interest from data scientists and AI app developers in a variety of market sectors, ThinCI offers a software kit that not only allows popular AI frameworks such as TensorFlow, Caffe2, and PyTorch but also lets developers directly program to the core by using C and C++.

ThinCI supports standard languages, machine-learning frameworks, and extensive libraries and apps. (Source: ThinCI)

Not stopping at silicon development, ThinCI is building a platform roadmap that includes ThinCI GSP SoC modules, PCIe cards, M.2 cards, and appliances for data center, edge, and clients, said the company.

Rob Lineback, senior market research analyst at IC Insights, told us, “The flexibility of software development that ThinCI’s management is promising will be crucial in satisfying a broad range of customers that have little in common except for their burning interest in applying AI, machine learning, neural networks, and vision processing.”

This software strategy would allow equipment developers “to do as much or little custom-code programming for specific applications or use modules for quick product launches,” said Lineback. Flexibility in development is critical for ThinCI “to successfully penetrate applications outside of automotive AI and serve the huge and wide-ranging potential in surveillance and security, consumer electronics, gesture recognition, commercial, and industrial systems.”

It’s all about architecture

ThinCI firmly believes that its GSP architecture ultimately sets itself apart.

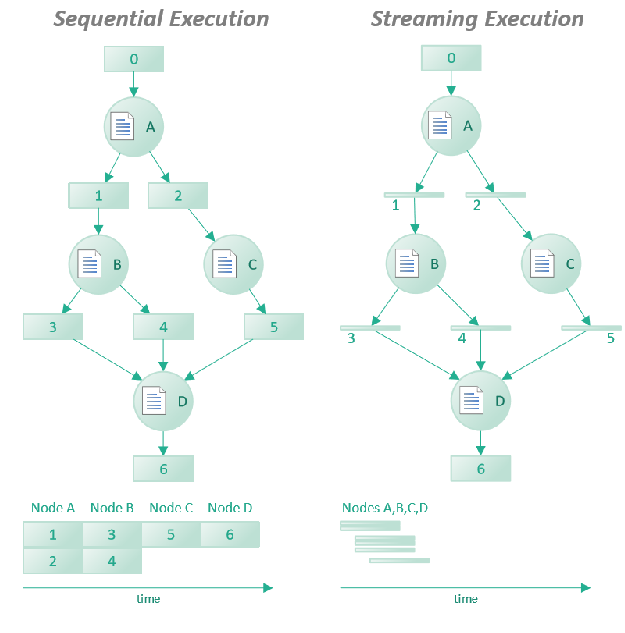

In describing the evolution of computing, the company stressed that in the era of CPU, it was all about “single-thread” pipeline computing. In the GPU era, data parallelism has come into the focus, with many computing units on a single task. Now, in the era of AI, which ThinCI calls the “GSP era,” many tasks run concurrently to provide “task + data” parallelism.

ThinCI highlighted that one advantage of GSP architecture is its very small intermediate buffers. Because GSP runs not “instructions” but “graphs” a collection of tasks, in parallel, it doesn’t require traditional sizable intermediate buffers that need to be written back and forth to memory.

Lineback is curious if ThinCI can really deliver on the reduction of intermediate buffer memory, lower power consumption, and reduced memory bandwidth as the company claims. With its streaming-execution “global optimizing” compiler, Lineback suspects that it is supposed to extract extra data from each task and shrink the size of buffers to perform them concurrently within its GSP architecture. He said, “At least that’s what I think is going to reduce intermediate buffers to about 1% of the size in GPU-/DSP-based sequential execution of AI.”

Analysts remain skeptical…

But without seeing the GSP in action, analysts remain skeptical of many ThinCI claims.

Kevin Krewell, principal analyst at Tirias Research, told us, “I cannot corroborate ThinCI claims at this point, but I will allow that data flow (graph processing) architectures will be major competitors for machine-learning designs.” He added, “From my conversations with ThinCI, they seem to understand the issues and have strong support from companies like Samsung. The key to their success will be development tools.” Calling the battle is at least about 50% software, said Krewell, “They seem to understand the issues but still need to overcome Nvidia’s head start with CUDA.”

Linley Gwennap, principal analyst at The Linley Group, pointed out that ThinCI certainly is not alone in designing a unique architecture for AI processing.

“[Because] ThinCI has released few details on its architecture or products, assessing the pros and cons of its design remains impossible,” he said. “Other companies have adopted similar principles of developing a specialized instruction set, creating massive parallelism, optimizing for streaming data, and enabling access through standard neural-network frameworks.”

In short, “The field of AI accelerators is at the frontier of processor design — a Wild West, if you will,” said Gwennap. “People are inventing many new architectures and new names for them.”

Just to name a few, “Graphcore calls its design an Intelligence Processor, AlphaICs calls theirs an Agent Processor, ThinCI uses Graph Processor, Wave has a DataFlow Processor, Intel offers a Neural Network Processor, and so on. Mythic uses the term ‘Intelligence Processor’ for an architecture that is vastly different from Graphcore’s.”

Gwennap added, “So the names don’t really mean anything right now. Maybe in three years or so, we will be able to better construct a taxonomy, but for now, I just place all of these designs in the general category of AI accelerators.”

Of course, the proof will come in the performance per watt that the chip can deliver, which has yet to be disclosed, said Gwennap.

…but investment speaks volumes

That said, “The good news here is that ThinCI has working silicon and was able to raise a large investment round from VCs and strategic investors (i.e., potential customers). These investors have surely reviewed the product details, so their investment adds credibility.” Gwennap believes that this round of funding was critical for ThinCI to reach that milestone to deliver a credible hardware and software solution to the market.

EE Times reached out to a few investors who participated in this round of funding in ThinCI and asked what prompted them to invest in the startup.

Steve Leonard, founding CEO of SGInnovate, told us, “In the last few decades, we have seen an explosive growth in data collected and increasingly sophisticated algorithms to derive meaningful information from this data more quickly. Unfortunately, the evolution of hardware has progressed at a much slower pace.”

He added, “We feel that ThinCI’s GSP-based chips offer a powerful alternative to products in the market by optimizing graph and data-stream algorithms that are being deployed across more systems. This allows ThinCI to be positioned at the forefront of the latest developments in cloud computing, edge computing, EV, and AV, just to name a few.” SGInnovate is a private organization wholly owned by the Singapore government.

Meanwhile, SPARX Group, which operates the Mirai Creation Fund in Japan, told us that the investment group particularly values ThinCI’s “highly skilled management team with years of experience in massively parallel processing architectures and the software structures to execute on these computing engines.” The group also likes the progress that ThinCI has been making, calling the startup “in the final phase of developing its artificial intelligence and vision processing solutions.”

— Junko Yoshida, Global Co-Editor-In-Chief, AspenCore Media, Chief International Correspondent, EE Times

Subscribe to Newsletter

Test Qr code text s ss