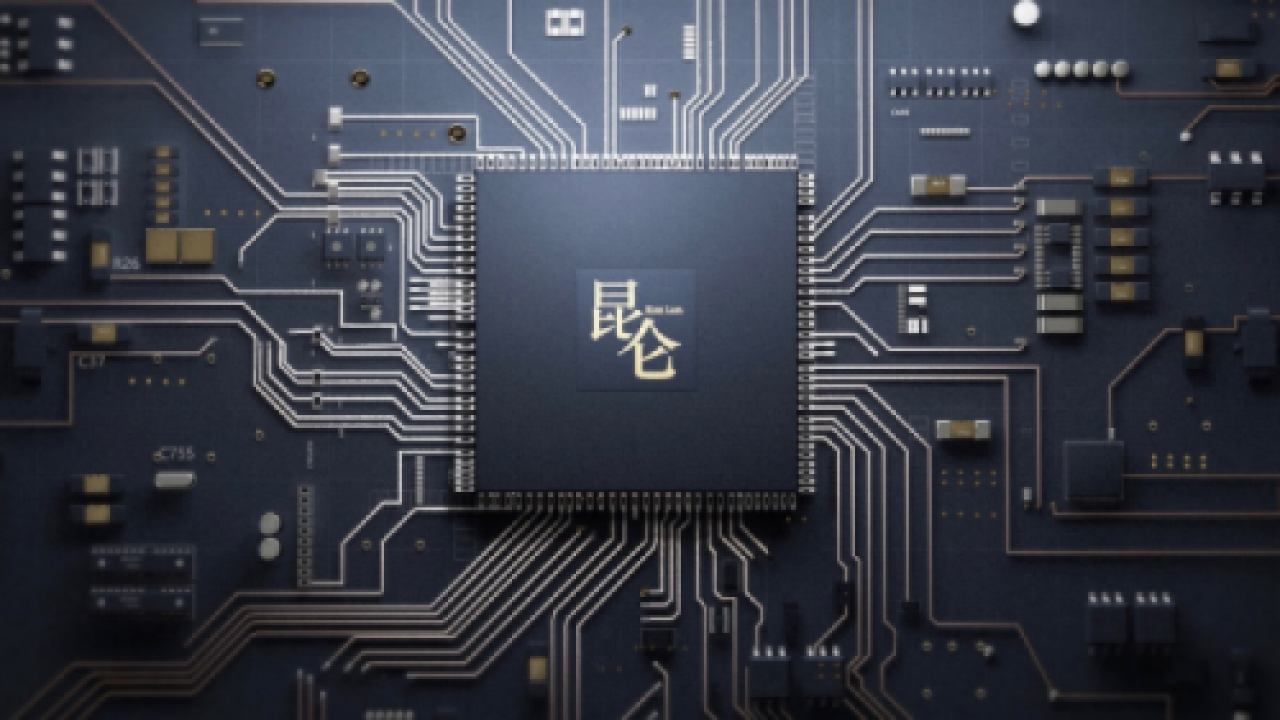

Baidu Announces AI Accelerator Kunlun

Article By : Rick Merritt

A 260 TOP/s chip consuming 100W, built on Samsung's 14nm process, likely to use HBM2 memory

SAN JOSE, Calif. — China’s Baidu followed in Google’s footsteps this week, announcing it has developed its own deep learning accelerator. The move adds yet another significant player to a long list in AI hardware, but details of the chip and when it will be used remain unclear.

Baidu will deploy Kunlun in its data centers to accelerate machine learning jobs for both its own applications and those of its cloud-computing customers. The services will compete with companies such as Wave Computing and SambaNova who aim to sell to business users appliances that run machine-learning tasks.

Kunlun delivers 260 Tera-operations/second while consuming 100 Watts, 30 times as powerful as Baidu’s prior accelerators based on FPGAs. The chip is made in a 14nm Samsung process and consists of thousands of cores with an aggregate 512 GBytes/second of memory bandwidth.

Baidu did not disclose its architecture, but like Google’s Tensor Processing Unit, it probably consists of an array of multiply-accumulate units. The memory bandwidth likely comes from use of a 2.5D stack of logic and the equivalent of two HBM2 DRAM chips.

Kunlun will come in a version for training (the 818-300 chip) and one for less computationally intensive inference jobs (the 818-100). It is aimed for use both in data centers and edge devices such as self-driving cars. Baidu did not comment on when it will offer access to the chip as a service on its Web site or its plans for merchant sales, if any, to third parties,

Baidu said the chip will support Baidu’s PaddlePaddle AI framework as well as “common open source deep learning algorithms.” It did not mention any support for the wide variety of other software frameworks. It is geared for the usual set of deep learning jobs including voice recognition, search ranking, natural language processing, autonomous driving and large-scale recommendations.

One of the few Western analysts at the Baidu Create event where Kunlun was announced on July 4 described the chip as “definitely interesting, but still [raising] lots of remaining questions.

“My sense is that they will first leverage it in their data centers and offer it via an AI service that developers can tap into…in particular, it could get optimized for Baidu’s Apollo autonomous car platform,” said Bob O’Donnell, chief analyst at Technalysis Research LLC.

Based on raw specs, Kunlun is significantly more powerful than the second-generation of Google’s TPU which delivers 45 TFlops at 600 GB/s memory bandwidth. However, “you always have to be careful making comparisons, since Baidu apparently didn’t describe what it’s operations are,” said Mike Demler of The Linley Group.

Given it’s still early days for deep learning, Web giants such as Google and Baidu may use a mx of their own ASICs along with GPUs and FPGAs for some time, said Kevin Krewell of Tirias Research.

“In areas where algorithms are changing, it may still be important to use more programmable and flexible solutions like CPUs, GPUs, and FPGAs. But in other areas where the algorithms become more fixed, then ASICs can provide a more power-efficient solution,” said Krewell.

Kunlun is not Baidu’s first hardware initiative. Last year, it launched Duer, its own smart-speaker services with OEM and silicon partners.

At the Beijing event this week, Baidu also announced an upgrade of its machine-learning service called Baidu Brian 3.0, supporting 110 APIs or SDKs including ones for face, video and natural language recognition. Users implementing the service with Baidu’s EasyDL tool to create computer vision models include one unnamed U.S. company deploying it at checkout stands in more than 160 grocery stores to check for unpaid products on the bottom shelf of a shopping cart.

— Rick Merritt, Silicon Valley Bureau Chief, EE Times

Subscribe to Newsletter

Test Qr code text s ss