Who’s at Fault When AVs Attack?

Article By : Junko Yoshida

Accidents will happen, but agreed-upon standards could go a long way toward building regulator and consumer confidence in robocars.

Robocars will not be accident-free.

For regulators who harbor hopes of fostering a future of autonomous vehicles (AVs), this is a political reality that’s likely to haunt them. For the public, it’s a psychologically untenable prospect, especially if a robocar happens to flatten a loved one.

From a technological standpoint, though, this inevitability is the starting point for engineers who want to develop safer AVs.

“The safest human driver in the world is the one who never drives,” said Jack Weast, Intel’s senior principal engineer and Mobileye’s vice president for autonomous vehicle standards. He delivered the quip in a tutorial video explaining what the company’s Responsibility-Sensitive Safety (RSS) entails.

“[Weast] is right,” Ian Riches, vice president for the global automotive practice at Strategy Analytics, told EE Times. “The only truly safe vehicle is stationary.”

So, if we hope to see commercially available AVs that will actually run on public roads, what must happen?

Before the AV ecosystem can answer that question, it must open a long-overdue dialog on another: Who’s to blame when a robocar kills a human? 2020 will be the year the industry at last confronts that demon.

On one hand, AV technology suppliers love to cite such figures as the “1.35 million annual road traffic deaths” reported by the World Health Organization (WHO) when they pitch their highly automated technologies as the ultimate solution to road safety. Eager to paint a rosy picture of zero-collision future, they use the stats to explain why the society needs AVs.

More than 1.3 million traffic deaths occurred on the world’s roadways last year. (Source: WHO Global Status Report on Road Safety 2018)

On the other hand, most in the technical community labor to avoid answering the question of whom to blame when robocars fail. Daunted by legal questions beyond their power to answer or control, they prefer to defer the blame game to regulators and lawyers.

Against this backdrop, Mobileye, an Intel company, stands out. As Weast told EE Times, “Intel/Mobileye is not afraid of asking a tough question.” In developing RSS, Intel/Mobileye engineers spent a lot of time pondering how safe is safe enough — “the most uncomfortable topic for everyone,” as Weast described it in a recent interview with EE Times.

“We all want to say that autonomous vehicles will mitigate traffic accidents, but there are limitations to the statement for non-zero chance of AV accidents,” he said. “The truth is that there will be an accident. Of course, our goal is to make the chances for accidents as low as possible. But you can’t start AV development from the zero-accident position or by thinking one accident is one too many.”

Recommended

Intel: Pushing for Higher Safety Standards

A predetermined set of rules

Intel/Mobileye led the AV industry by developing RSS, “a predetermined set of rules to rapidly and conclusively evaluate and determine responsibility when AVs are involved in collisions with human-driven cars.”

When a collision occurs, Mobileye wrote, “There will be an investigation, which could take months. Even if the human-driven vehicle was responsible, this may not be immediately clear. Public attention will be high, as an AV was involved.”

Given the inevitability of such events, Mobileye pursued a solution that would “set clear rules for fault in advance, based on a mathematical model,” according to the company. “If the rules are predetermined, then the investigation can be very short and based on facts, and responsibility can be determined conclusively.

“This will bolster public confidence in AVs when such incidents inevitably occur and clarify liability risks for consumers and the automotive and insurance industries.”

Most scientists agree that the zero-collisions goal is impossible even for AVs, which neither drink and drive nor text at the wheel.

“Of course we’d prefer to have zero collisions, but in an unpredictable, real world that is unlikely,” Phil Koopman, CTO of Edge Case Research and a professor at Carnegie Mellon University, told EE Times. “What is important is that we avoid preventable mishaps. Setting an expectation of ‘dramatically better than human drivers’ is reasonable. A goal of perfection is asking too much.”

Even so, the notion of assigning blame for an accident makes everyone uncomfortable.

“The biggest problem that I saw in the original RSS paper is its emphasis on blame,” said Mike Demler, senior analyst at The Linley Group. “It includes mathematical models for defining an AV’s movements and actions, which I see as its strength. But the weakness is that it states, ‘The model guarantees that from a Planning perspective there will be no accidents which are caused by the autonomous vehicle.’”

Put yourself in the consumer’s shoes. If you’re a passenger in an AV, or in a vehicle that might be involved in an accident with one, who — or what — is at fault is the least of your concerns. That’s for the lawyers and insurance companies to determine. You just don’t want to be killed or injured.

If you design autonomous vehicles, though, you can’t afford to disregard culpability.

Demler cited The Safety Force Field paper, which Nvidia published in response to RSS. The paper focuses almost entirely on the mathematical models. “The issues that are more difficult to formalize are what constitutes ‘safe’ driving,” he said.

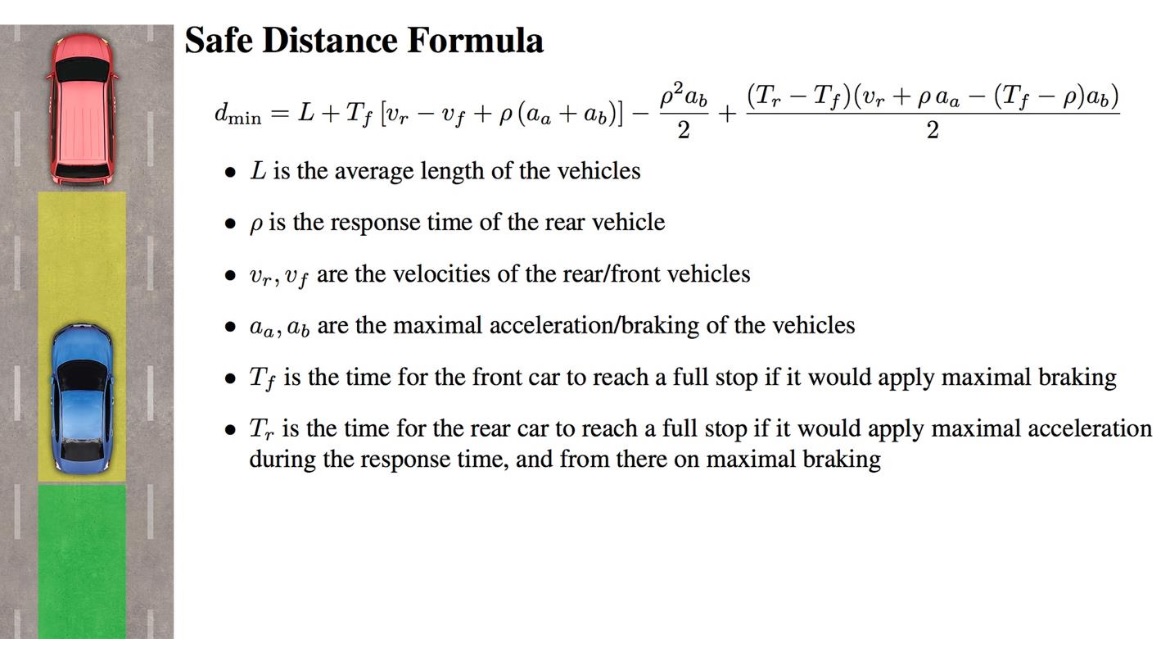

RSS, for example, creates what Weast has called a “safety bubble” around an AV. “How far a vehicle should be from one in front of it seems rather obvious,” said Demler. “But modeling every possible driving scenario and describing a ‘safe’ course of action is impossible.”

Demler noted that, “as powerful as the AI is getting, computers still can’t reason.” AI-driven AVs, therefore, “can only follow rules.”

But human drivers understand that rules are made to be broken. “In some cases, avoiding an accident may require an evasive maneuver that would otherwise be considered unsafe, such as rapidly accelerating around an obstacle even though you’re cutting into another lane at an ‘unsafe’ distance from other vehicles,” said Demler.

The formula shown calculates the safe longitudinal distance between the rear vehicle and the front vehicle. (Source: Intel/Mobileye)

Demler wonders whether we are truly capable of anticipating all the potential scenarios and teaching robocars a safe course of action for each case. It’s likely a rhetorical question.

RSS as ‘an enabler of a discussion’

Meanwhile, not everyone sees RSS as the final word in clarifying blame for accidents.

Rather, RSS “enables a discussion between the industry and regulators as to where the line should be drawn between utility and safety,” said Strategy Analytics’ Riches. “It also allows that line to be a dynamic one that can change across geographies and time.”

Riches acknowledged that there is “an inherent political challenge here,” since “many still cling to the ‘one death is one too many’ mantra. As a theoretical target, [zero accidents] is fine, and it should continue to inspire and drive the industry. As a practical maxim, it is, unfortunately, unworkable.”

He predicted that strict enforcement could delay or even stop the introduction of technologies that would, on balance, save many lives. “Pragmatism isn’t always pretty, but it often ends up hurting fewer people than a beautiful absolute,” he said.

Embracing AI-driven technologies

Expect many more AI-driven technology solutions to emerge in 2020. We’ve already seen powerful — some say biased — surveillance cameras that are capable of identifying (or sometimes misidentifying) individuals in a crowd. Technology-platform companies such as Facebook, Google, and Amazon, meanwhile, have demonstrated a liberal use of AI-driven technologies in such apps as voice, simultaneous interpretations/translation, and modifications/improvements of images created by individuals.

What separates autonomous vehicles from other apps and products that incorporate AI advancements is that AVs pose unintended consequences that can cost lives.

It is often argued that technology advancements are an inevitable, immutable fact of life. In this light, despite some inconvenient truths (one being that accidents involving AVs will happen), the tech community should follow the march of progress and not let unattainable perfection — Riches’ “beautiful absolute” — stand in the way.

Indeed, many engineers rationalize AV accidents by saying they are “not a technical issue, but a psychological and societal issue,” Demler observed.

But here’s the rub. “How many people are saying that they ‘need’ AVs?” asked Demler. “The benefits have been overblown. My view is that people will progressively become more accepting of autonomous features, akin to the evolution of cruise control from an analog system to digital ACC, but this leap to a world of self-driving cars is just too great. The only place that will happen is in countries with authoritarian governments.”

Efforts for creating industry standards

While political and social problems might be hard for the tech community to grasp, let alone mitigate, the development of the industry’s agreed-upon AV technical standards could go a long way toward building confidence in robocars among regulators and consumers.

Recommended

AV Safety Standards Set to Arrive in 2020

Riches noted that RSS is just one piece in a very large jigsaw. “To continue with that metaphor, it’s probably fair to say that we are not yet 100% certain of what the picture is meant to be and still need to find some of the pieces.”

In a recent interview with EE Times, Riccardo Mariani, vice president of industry safety at Nvidia, laid out a wave of AV safety milestones to reach in 2020. They include several freshly approved IEEE working groups aimed at AV safety — IEEE P2846, IEEE P2851 and IEEE P1228 — in addition to the upcoming UL 4600 and two existing ISO standards: ISO 21448, covering the “Safety of the Intended Functionality” (SOTIF) and ISO 26262, addressing functional safety.

This is not to suggest that these standards cover all that needs to be developed for ensuring the safety of AVs.

For example, as Intel stated in its write-up on RSS, “While the RSS decision-making software is designed to not allow decisions that would result in an accident that would be blamed on the AV driving policy, there could still be accidents caused by mistakes of the sensor system (i.e., the information about the driving environment that is used to base decisions) or mechanical failure.”

Calling RSS “important and very useful,” Carnegie Mellon’s Koopman noted that RSS provides a part of what “safe enough” means in terms of vehicle motion control. RSS assumes that perception and related functions are working properly, he said. By design, then, RSS leaves it “to some other standard to establish the perception parts of ‘safe enough.’”

Riches said, “In terms of other standards-type efforts, the key is probably some form of agreed-on approach [for] actually determining how safe is safe enough.” He cautioned that RSS does not hold the answer to the question of “how many accidents per 1,000 km” society is prepared to tolerate.

“That’s far more in the realms of the political than the scientific,” he said. “What RSS does is provide a framework to enable that discussion to take place.”

Driver’s license for AVs?

Ultimately, Riches said, “the key ‘standard’ may be some form of ‘electronic driving license’ that every proposed automated solution needs to pass before being allowed into the hands of the public. Most people I talk to agree, but they also agree that we likely still don’t understand the problem quite well enough to define that driving license.”

A driver’s test for robocars is a popular topic in the media. Asked about it, Weast said, “Sure, I understand that just like drivers must pass a vision test, people want AVs to demonstrate their ability for perception and good decision-making while driving.”

While RSS isn’t that test, he said, “in spirit, we support the idea” of a driving test for AVs.

Koopman, one of the principal writers of UL 4600, likewise acknowledged that the UL standard “is not a road test.”

Recommended

When Your Teenage Robot Can Drive

Passing such a test — checking the driver’s skills — is only one piece of Riches’ jigsaw puzzle, said Koopman. “In my mind, the driver’s test has three big pieces,” he said. One is a written test to see if you know all the rules of the road. Another is the road test. “Certainly, it makes sense to see if these vehicles can do the basic things.

“But the problem is that it [a road test] doesn’t prove it’s safe, because the third piece [required in obtaining a driver’s license] is a birth certificate to prove you are 16 years old and human, or whatever the local age is.”

The question that must be asked, Koopman said, is whether an AV model has the same “common sense” that a 16-year-old is expected to possess when the teen gets behind the wheel alone and hits the road.

In all fairness to the experts’ earnestness, no one in the scientific and engineering community has yet come up with a credible answer.

Subscribe to Newsletter

Test Qr code text s ss