Where Does NXP Stand on its AI Strategy?

Article By : Junko Yoshida

NXP's AI strategy has been a mystery since CEO Richard Clemmer revealed in 2016 the company has had "no internal technology development going on" in machine learning.

Chip giants such as Nvidia, Intel and Qualcomm rarely miss an opportunity to tout their achievements and technology prowess in artificial intelligence.

The message on AI from NXP Semiconductors, however, is neither so loud nor so clear.

NXP’s AI strategy has been a mystery ever since CEO Richard Clemmer revealed the company has had “no internal technology development going on” in machine learning. That declaration, in October 2016, came as a shock. How in the world, was NXP — a leading automotive chip supplier — planning to lead the market in the era of ADAS and AV with “no internal development of machine learning?”

Clemmer brought up AI as an area in which his company could potentially profit, once Qualcomm consummated its NXP acquisition plan. But last summer, Qualcomm walked away from the deal. NXP was left high and dry, with no access to Qualcomm’s AI technologies.

So last week when I bumped into Ali Osman Ors at an automotive event in Minneapolis (held by boutique AV technology research firm VSI Labs), imagine my surprise when I read the business card he offered: he is NXP’s director of AI Strategy and Partnerships.

I asked Ors "whatever happened to NXP’s internal development for machine learning?"

First, Ors walked back what Clemmer said in 2016. He clarified that what the CEO meant then was, “NXP had no high, open compute-level hardware such as GPU, TPU or any other accelerators” designed for machine learning. “We weren’t doing servers [for data centers] either.” NXP’s focus was [and still is] on embedded systems such as automotive and IoT, Ors explained. “Our goal is to identify areas that it makes sense to apply machine learning.”

Clemmer never divulged back then that NXP already had a small team of resident experts in machine learning, which NXP gained via its Freescale acquisition.

That team was CogniVue Corp., an image cognition IP developer based in Ottawa, Canada, which Freescale bought in September 2015. As a key vision IP partner, CogniVue had been playing a critical role in Freescale’s advanced driver assistance system SoC solutions. By bringing CogniVue’s IP and its development team in-house, Freescale hoped to lead the advance of safety-critical ADAS and eventually the whole autonomous car market with IP that was developed from the start as “automotive qualified.”

As CogniVue’s ownership changed in a short few months (from Freescale to NXP), the team in Ottawa, of about 30 members, maintained a low profile.

Ors, as it turns out, was CogniVue’s director of engineering. Once he moved to Freescale in the fall of 2015, he became the director of automotive microcontrollers and processors. There he led hardware, software and systems teams in developing embedded processor technology for AI in autonomous driving applications. He also managed academic and corporate partnerships in neural network and deep learning research and development.

By January 2018, Ors was director of Automotive AI Strategy and Partnerships, Automotive Microcontrollers and Processors. Today he sets the overall strategy for AI and Autonomy for the ADAS and AV product line within Automotive Microcontrollers and Processors Business Line.

Competition against Qualcomm, Intel/Mobileye?

AI, especially deep learning, has played a central role in the intense competition among tech and automotive companies pushing for highly automated vehicles.

Compared with its rivals, there are, however, fundamental differences in NXP’s approach for ADAS and AV chip solutions. For NXP, AI is important, but not the end of the story.

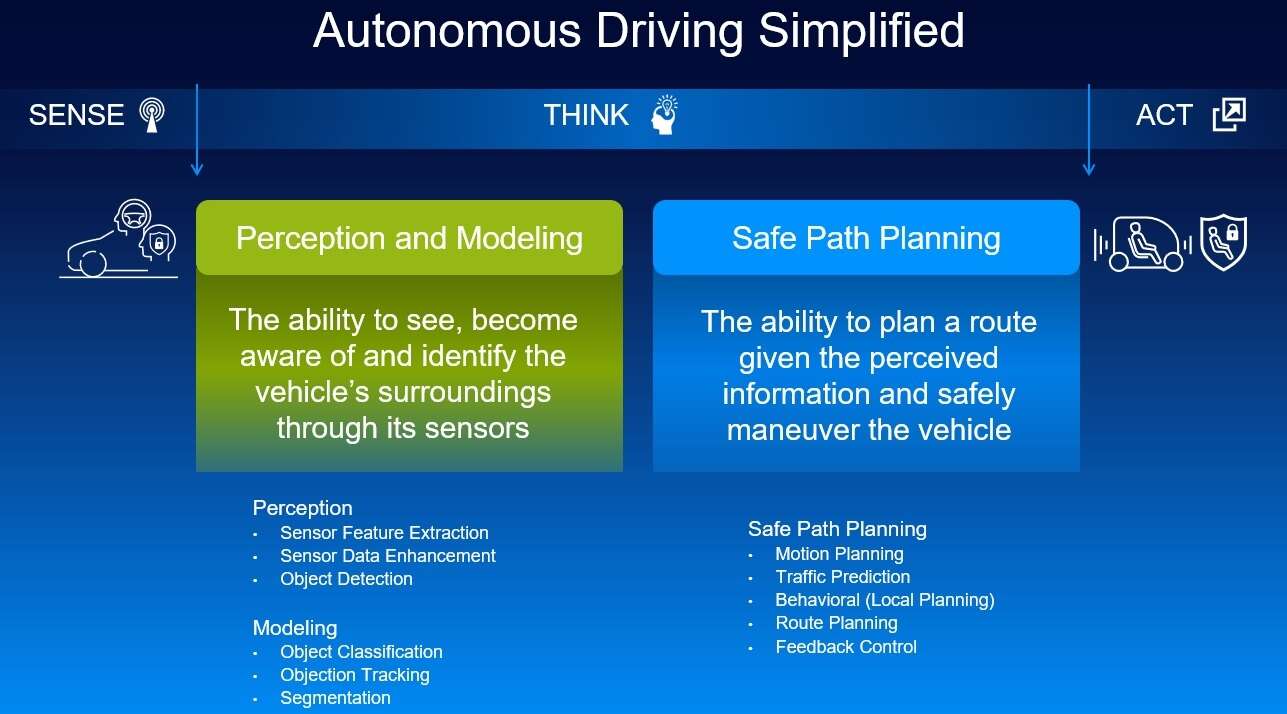

Stressing NXP’s automotive-grade semiconductor pedigree, Ors explained that NXP takes a “modular approach” toward its ADAS/AV solutions. The company’s two prongs are a perception domain (sensors) and a planner domain (actuation). NXP actively applies machine learning to vision-based processing (CogniVue’s vision IP is running on NXP's S32V vision processor). But NXP hopes to stand out with a separate ASIL-D qualified higher compute-level safety device, in which a rule-based safety checker resides to “augment machine learning,” Ors noted. Referring to “the lack of explainability in AI,” he said NXP seeks to ensure that its rule-based device in a planner domain will be able to check its safety.

NXP also expects this modular approach to attract Tier Ones and OEMs who want to plug in their own flavor of software for a perception domain. The modular approach will also allow customers to scale two separate devices (perception domain and planner domain) to varying degrees.

In contrast, the Intel/Mobileye team is investing more heavily in developing a whole perception software stack that will run on top of Mobileye’s EyeQ chips. Ors said, “We offer software stack enablement, but we remain open to our customers software stack.”

Applying Machine Learning to radar

Vision won’t be the only sensory data that use machine learning. Radar is next, according to NXP. With ample radar expertise, NXP is banking on the future for machine learning in radar.

Compared to vision-based machine learning, however, “this is still a very nascent area,” Ors cautioned.

To classify radar-based data, “the datasets are still very limited,” he noted. “We also need to figure out a way to clean up radar signals.” Higher-performance imaging radar must become more broadly available.

A few universities and small labs have been working on point-cloud data generated by radars. But compared to vision-based machine learning where researchers and vendors have been able to take advantage of widely accessible cost-effective cameras, radar is more expensive, less accessible and its use is regulated.

The path to radar-based machine learning will be a slow journey of many steps. But asked how soon machine learning will be used for radar signals, Ors said, “within the next five years.”

AI deployment

While rivals beat their chests over bigger, fancier AI-driven SoCs and accelerators, NXP modestly says its goal is to bring AI closer to the deployment of its own embedded devices.

Instead of one big center for AI development, NXP is taking a more distributed approach. NXP will develop AI closer to where actual devices are being designed, from Germany to Austin, Texas and Ottawa, said Ors.

To enable AI in ADAS and AV systems, NXP is bringing in a host of external partners. They include Kalray, a spin-off of Grenoble-based CEA, whose Massively Parallel Processor Array (MPPA) processor is used to accelerate vision/radar processing, Green Hills for RTOS and hypervisor, and Embotech, a spin-off from the ETH Zurich, specialized in physics-based motion planning.

NXP is by no means an AI powerhouse in the same sense that Nvidia and Intel strive to be. NXP’s objective is, rather, a roadmap for broader AI deployment in a variety of embedded systems. In NXP’s mind, it takes a village.

Subscribe to Newsletter

Test Qr code text s ss