When ADAS Goes People-Blind

Article By : Junko Yoshida

AEB is fundamental to ADAS. But AEB adapted to pedestrians is “an order of magnitude harder than AEB,” an expert says.

For enthusiasts captivated by the promise of automated vehicles (AV), a now-viral video clip (shown below), originally screened at the Flir booth during CES, should come as a wake-up call.

There is good reason to be encouraged by the progress that AV developers are making with autonomous driving systems that keep drivers safe, but anyone getting too enchanted with the current state of autonomous technology should click on the video below, which illustrates the significant problems AVs have assuring the safety of people outside of the car.

Why would ADAS vehicles, supposedly equipped with pedestrian-detecting automatic emergency brakes (AEB), mow down crash-test dummies, one after the other, during a closed-course test?

Flir, a supplier of thermal imaging cameras for the automotive industry, made this clever clip using video footage provided by AAA. Last fall, AAA conducted tests on AEB with pedestrian detection (AEB-P).

The purpose of ADAS is to assist drivers for better road safety. But if automakers really mean it, they should put more money where it’s needed. How about safety first, then autonomy? As Pierre Cambou, principal analyst at Yole Développement pointed out in his LinkedIn post, “I agree with Flir, pedestrian safety should be the primary focus of ADAS.”

What did AAA find?

Last year AAA conducted tests on ADAS vehicles, specifically focused on pedestrian detection. The test resulted in AAA’s publication of devastating results.

By peeling back the onion by a few layers, we can learn why the AEB-P feature inside today’s ADAS vehicles is so violently ineffective.

AAA conducted AEB-P testing last fall on four 2019 model-year vehicles: a Chevrolet Malibu with Front Pedestrian Braking, a Honda Accord with Honda Sensing-Collision Braking System, a Tesla Model 3 with Automatic Emergency Braking and a Toyota Camry with Toyota Safety Sense.

Here are the key findings:

If an adult was encountered crossing the road in daylight by a test vehicle going 20 mph, the car avoided hitting the pedestrian only 40 percent of the time. Worse, if the test vehicle traveling at 20 mph met a child darting into traffic from between two cars, the kid got nailed 89 percent of the time. At 30 mph, none of the test vehicles avoided a collision.

What about an adult crossing the road at night? Forget about it. The pedestrian detection systems proved ineffective.

The results led AAA to issue recommendations that include: “Never rely on pedestrian detection systems to avoid a collision. These systems serve as a backup rather than a primary means of collision avoidance.”

Collision warning vs. collision mitigation

It is important to note the difference between a collision warning and collision mitigation system. A warning system will alert the driver to an imminent collision but will take no evasive action such as applying the brakes. A mitigation system will alert the driver and if no action is taken, the system will hit the brakes to avoid or lessen the severity of the collision.

“Mitigation” was what AAA exclusively evaluated in its “pedestrian detection” tests.

To any layman, the sight of an ADAS car not stopping for a pedestrian is a shock. While AAA’s test results have gotten a ton of press coverage, Flir’s video clip triggers fresh thinking about a host of unanswered questions.

All four vehicles tested by AAA use “a camera + radar package.” Given this combination, what elements cause the AEB-P features to function so inconsistently?

- Does the problem come from inadequate resolution in the imaging sensor and/or radars?

- Or does it have to do with sensor fusion algorithms?

- A company like Flir pitches the idea that the use of thermal imaging sensors like theirs help vehicles see pedestrians at night. We have no doubt about that. But, then, is this a problem we can easily solve simply by adding another sensor (of different modality) on top of the sensors already installed in these ADAS cars?

What makes AEB-P so difficult to pull off?

Phil Magney, founder and principal at VSI Labs, told EE Times, “AEB is fundamental to ADAS and you could not even think about doing automated driving without it. Moreover, it is the most important of all ADAS functionalities and is the one application that has the potential to save the most lives.” However, Magney makes a crucial distinction between AEB and AEB-P. AEB adapted to pedestrians, he stressed, is “an order of magnitude harder than AEB.”

So, what makes AEB so hard to do?

Experts often cite the false positives to which radars are prone, and the limited field of view provided by image sensors. Even when radars and cameras are combined, the fused data could still present only a limited understanding of the vehicle’s surroundings. Perhaps most important is the issue of cost. Automakers tend to use less costly sensors for ADAS vehicles. Given that ADAS features are expected in mass market vehicles, it’s unlikely that car OEMs will shell out more money for specialty sensors — whether lidar or thermal imaging — to make AEB-P less likely to fail.

False positives

Magney noted that AEB is hard because “false positives in the context of AEB can in themselves lead to deadly hazards.”

Magney explained that radar is a critical component in AEB systems, due to its ability in measuring time to collision. But radar is also subject to false positives, mistaking parked cars as dangerous objects, for example. “So, you end up having to filter out a lot of data in the interest of limiting false positives. You also have a lot of noise in radar and this too can lead to false positives.” He said, “This is why you get unusual collision warnings from time to time if your car has a collision warning feature.”

Against the general background of AEB, Magney explained, “AEB-P heightens the performance requirements quite a bit because now you have to identify and track humans in your path.” He acknowledged that radar is getting better “but still lacks the confidence when dealing with humans so you generally couple it with a camera.”

But here’s the thing. “While coupling camera with radar of AEB-P is good, it may not be good enough.”

In Magney’s view, there are “so many environmental conditions that limit the camera’s performance and this leads to the poor performance of the current AEB-P systems.”

Narrow field of view

Yole Développement analyst Cambou told EE Times that the success of AEB system based on camera, or radar or camera+rader or camera+laser-ranger is well documented in terms of safety. The world is seeing “more or less 50 percent less rear-end crashes and fatalities, and 10 to 15 percent less crash/fatalities overall,” he noted.

But when the same AEB technology is applied to pedestrian detection, the stats — 10 to 15 percent fewer crash/fatalities — aren’t so comforting.

Asked why AEB-P is hard to do, Cambou said the problem lies in “a relatively narrow field of view” in front of the vehicle in first-generation AEB systems.

Those first-generation systems use vision processors such as Intel-Mobileye EyQ3 (in GM, Ford, VW) or Toshiba Visconti 2 (in Toyota). Referring to those vehicles’ relatively narrow field of view, Cambou said, “This is the primary reason the AEB system cannot understand much more than what is going on in front of the vehicle.”

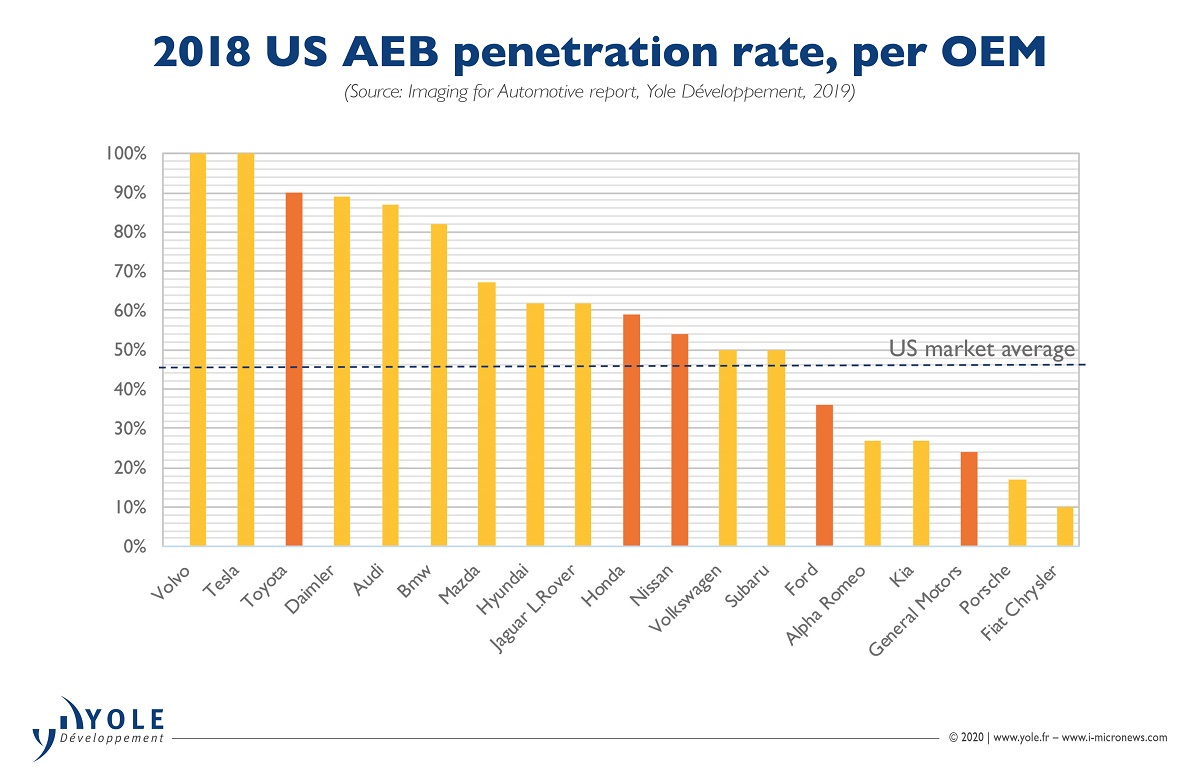

Cambou estimates that the first-generation AEB system is already deployed in roughly six percent of cars on the road, and 30 percent of new cars. Calling the effectiveness of first-generation AEB at around 10 to 15 percent, Cambou said, this is why cars equipped with AEB in the North America and Europe by 2022 will remain far from achieving an often-cited goal of “Vision Zero.”

But as time goes by, things are expected to get better.

“The new generation of AEB systems are based on Intel-Mobileye EyeQ4 or Visconti 4 and they will improve on this FOV parameter, typically by putting more cameras with wider field of views,” Cambou noted.

“Today we do not know about the safety benefit of a triple cameras versus a mono camera, but it should be better.”

Next come third-generation AEB systems. Cambou noted that these will use full-surround cameras. “This is what Tesla will do with its Full Self-Driving (FSD) Computer. Zenuity is also providing such approach to OEMs,” he added. “By being aware of the full environment, the AEB should improve over time. But the question is how quickly?”

For AEB to protect pedestrians from getting hit by an ADAS car, what must happen? Cambou suspects that carmakers would need pressure from regulators or an outcry from the general public.

What do we need for effective AEB-P?

So, what’s needed to make AEB consistently work for pedestrian detection?

Flir is obviously pitching its thermal imaging technology for AEB-P. The company describes a thermal camera capable of offering “complementary data to RGB cameras and radar.” As thermal cameras “see” heat, Chris Posch, Flir’s engineering director responsible for automotive, said, “We can detect pedestrians in challenging conditions including night, through sun and headlight glare and fog.” Flir is claiming it can see up to four times farther than typical headlights illuminate in darkness.

Meanwhile, at CES, Prophesee, a Paris-based startup, showed a video clip created by an unnamed automaker in Germany. It compares an AEB system using a regular frame-based vision camera with another deploying Prophesee’s event-driven camera. The video showed that Prophesee’s camera consistently scored higher in spotting a pedestrian.

Yole’s Cambou sees three ways to approach to attack the AEB-P hurdle.

First, “automakers can use more of the same data, more of the same compute,” he noted. This is an approach to keep pace with Mobileye’s rollout of EyeQ4, EyeQ5, and Toshiba’s introduction of Visconti 4, Visconti 5. “The dollar content stays more or less the same,” said Cambou, “at around $150, and we wait for Moore’s Law to improve.”

Second, automakers could seek “better data, and go with more or less the same compute.” Cambou said this approach is “advocated by Flir, Prophesee and solid-state lidar companies.” He said, “The drawback is that it could probably cost a little more at first.” But from a marketing point of view, “These guys have to provide a much better system at current market price.”

The third approach is “better data and better compute,” Cambou noted. Calling it “a new paradigm,” he explained that it’s about combining new sensors with new ways to compute. “I think this is the promise of Neuromorphic sensing and compute. Some companies are already innovating on both sensor and compute… I am thinking of Outsight which brings to market an innovative hyperspectral lidar + perception algorithm.”

What about Flir?

Among solutions available now, thermal cameras hold promise. Compared to regular RGB cameras, VSI Labs’ Magney said, “Thermal is much better at detecting and classifying pedestrians, because classification is based on the heat signature of the subject rather than visible light.”

But the most frequently asked question about thermal cameras is cost. If carmakers add a thermal camera to an ADAS vehicle to enable effective AEB-P, how much would it cost? Flir’s Posch told EE Times, “It will be in the range of hundreds of dollars, not in thousands — which would be the case with lidars.”

While Flir’s thermal cameras are already designed into some models by BMW, Audi and others, they are neither designed nor deployed for AEB-P. Instead, they do things like animal detection on the road at night. For AEB-P applications, Flir has developed a new thermal camera with VGA, with four times higher resolution than current thermal car cameras.

Last fall, Flir’s thermal sensing technology was selected by Veoneer, a tier 1 automotive supplier, for its level-four autonomous vehicle production contract with a top global automaker, planned for 2021.

Can you prove it?

VSI Labs, contracted by Flir, has been working on a proof-of-concept to demonstrate the benefits of thermal sensing for automatic emergency braking. VSI Labs conducted initial tests in December 2019, in the American Center for Mobility near Detroit.

VSI Labs’ model for this AEB-P testing used, according to Magney, a single Delphi ESR radar coupled with the Flir camera. “We had our RGB disabled from this test. We did, however, have to fuse other sensor inputs coming from the CAN bus such as inertial, wheel speed, steering angle, pedal position, etc. This was necessary to program the AEB functionality.”

Aside from the claim that as a passive sensor, nothing detects pedestrians better than a thermal camera, Magney mentioned the impact of AI on thermal cameras.

He claimed, “At VSI, we have proven that applying AI to thermal image capture has the capacity to outperform a traditional RGB camera.” VSI labs trained its neural network using the Flir ADK (automotive development kit) dataset. The dataset includes roughly more than 40,000 annotated thermal images, he noted. VSI also built out the AEB algorithms and then conducted numerous tests at ACM, he explained.

Magney concluded, generally speaking, the thermal camera was better at spotting and classifying pedestrians in low light and cluttered environments. “Thermal also picked up pedestrians that were partially occluded,” he added.

Beyond that, he said, “What we like about Flir is their Automotive Development Kit as this gives the developer the ability to make their own detection algorithms. What’s more, Flir’s ADK comes with a license.

Subscribe to Newsletter

Test Qr code text s ss