TinyML: Opportunities Bigger than the Challenges

Article By : Rick Merritt

200 engineers and researchers gathered in California to discuss forming a community to cultivate deep learning in ultra-low power systems, a field they call TinyML.

SUNNYVALE, Calif. – A group of nearly 200 engineers and researchers gathered here to discuss forming a community to cultivate deep learning in ultra-low power systems, a field they call TinyML. In presentations and dialogs, they openly struggled to get a handle on a still immature branch of tech’s fastest-moving area in hopes of enabling a new class of systems.

“There’s no shortage of awesome ideas,” said Ian Bratt, a fellow in machine learning at Arm, kicking off a discussion.

“Four years ago, things were getting boring, and then machine learning came along with new floating-point formats and compression techniques—it’s like being young again. But there’s a big shortage of ways to use these ideas in a real system to make money,” Bratt said.

“The software ecosystem is a total wild West. It is so fragmented, and a bit of a land grab with Amazon, Google, Facebook and others all pushing their frameworks… So how can a hardware engineer get something out that many people can use,” he asked.

An engineer from STMicroelectronics agreed.

“I just realized there are at least four compilers for AI, and the new chips won’t be used by the traditional embedded designer. So, we need to stabilize the software interfaces and invest in interoperability – a standards committee should work on common interfaces,” the STM engineer suggested.

It may be too soon for software standards, said Pete Warden, a co-chair of the TinyML group and the technical lead of Google’s TensorFlow Lite, a framework that targets mobile and embedded environments.

“We blame the researchers who are constantly changing the operations and architectures. They are still discovering things about weights, compression, formats, and quantization. The semantics keep changing, and we have to keep up with them,” Warden said.

“Over the next few years, there’s no future for accelerators that don’t run general-purpose computation to handle a new operation or activation function because two years from now it's likely people will bring different operations to the table,” he added.

A Microsoft AI researcher agreed. “We are very far from where we think we should be, and we won’t get there in a year or two. This was the reason Microsoft invested in FPGAs” to accelerate its Azure cloud services. “We need to build the right abstraction layers to enable hardware innovation…and if there was an open source hardware accelerator, it might help,” he added.

“Maybe a compliance standard is the first step, so researches get the same experience at the edge as in the cloud,” Bratt of Arm suggested.

“We need robust functional specs for whatever level you live in. If we have them at enough levels, it will give people an entry point to other layers, and this group is the best one to tackle defining them,” said Naveen Verma, a Princeton professor whose research focuses on AI processors-in-memory.

One nirvana—smart embedded voice interfaces

The group heard a talk describing DARPA projects pursuing big AI challenges such as getting swarms of robots to collaborate on tasks and building systems that continually learn in the field. Boris Murmann, a Stanford professor and researcher, advised using existing hardware to explore the research projects and near-term opportunities.

“Once we answer some of these big questions and the software settles down we may find some killer apps and can make custom TinyML systems for them. It’s difficult to get the eggs until the chicken is here,” Murmann quipped.

If engineers create neural network models and accelerators that can run in tens of Kbytes of memory they will spawn new killer apps, just as 4G smartphones with embedded GPS spawned services such as Uber, observed Evgeni Gousev, a senior director of engineering at Qualcomm Research who co-chaired the event.

“What’s the Palm Pilot or PDA of IoT, the precursor to the mainstream market,” asked Kurt Keutzer, a Berkeley professor and researcher.

“You can work with today’s chips on TinyML… new technologies take ten years and we are only three or four years into this area,” said Zach Shelby, a serial entrepreneur now overseeing Arm’s work with software developers on IoT.

“I’ve spent 20 years working on IoT. One big misconception we had was around seeking a killer app or platform. There are a hundred segments in embedded and no one is killer. We need great apps in many markets,” Shelby said.

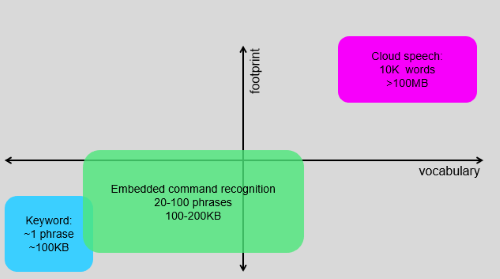

“If we can get the cost and efficiency of speech recognition delivered on a 50-cent chip with a year of battery life, it will go into billions of devices–and that’s a motivating use case,” said Warden who worked on transcription services for Google’s Pixel phones using neural net models of about 100 Mbytes.

One of the TinyML organizers is building neural net models for just such use cases. Chris Rowen described the work of his startup, BabbleLabs, creating moderately complex models with about 20 layers that can fit into less than 100 Kbytes of memory to bring voice commands to TVs and other embedded devices.

Rowen’s software is running in NXP i.MX, Ambiq Apollo 3 and Tensilica Fusion F1 chips. Engineers can go from a new command set to a trained model in as little as two weeks, although the training itself is said to cost as much as $20,000 in GPU cloud services.

BabbleLabs aims to carve out a unique niche in voice recognition engines for embedded systems based on deep learning. (Source: BabbleLabs)

Many big challenges and one huge opportunity

Someday TinyML systems might be ingested to detect and even repair polyps, said the Microsoft researchers. Indeed, a broad generation of ultra-small and inexpensive systems is still ahead, said Dennis Sylvester, a professor and researcher from the University of Michigan, calling it IoT2.

“We need an order of magnitude lower power consumption, too…tens of nanowatts to a microwatt is what I’m interested in,” Sylvester said, calling for TinyML systems that run on thin-film batteries.

Jeff Bier, a veteran DSP analyst and founder of the Embedded Vision Alliance, agreed both power and cost need to come down by “multiple orders of magnitude…The good news is deep neural networks can deliver better results, but they have greater computational requirements,” he added.

Engineers will need to innovate in circuits, architectures and algorithms to meet the challenge, said Marian Verhelst, a professor and researcher at KU Leuven.

“No decision can be made in isolation…so we need to model the whole system for energy versus error rates to find optimal solutions…There’s a strong need for flexible processors that can play with parallelism and memory hierarchies,” she said.

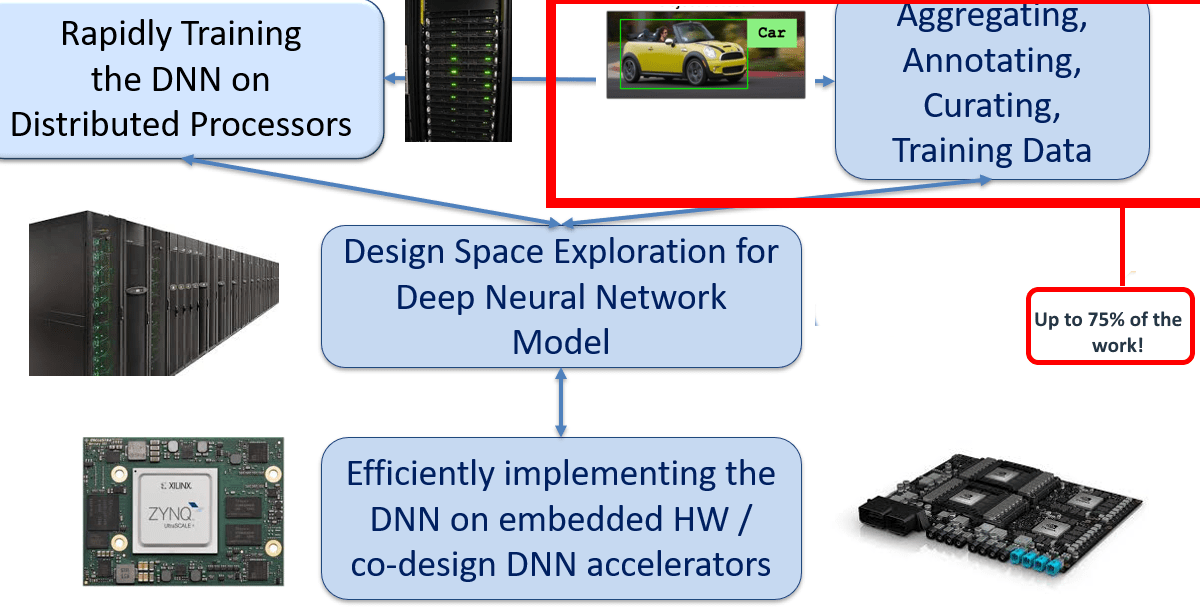

Some say finding, labeling and curating big data sets is the bulk of the problem for deep learning. (Source: Berkeley, Keutzer)

The challenges with silicon and neural net models pale before the problems getting and curating the large data sets deep learning requires, said Keutzer of Berkeley. He noted reports that minor changes in data sets can significantly throw off a model’s accuracy.

“We sometimes use engineers and interns to generate some data to get started, but this is not the best approach,” said Christoph Lang, a director of chip design at sensor maker Bosch, calling for data services to fill the gap.

“The open data sets available today are insufficient – the biggest thing is the data problem,” agreed one Qualcomm senior engineer.

A sales manager for one silicon startup in deep learning lamented that many potential customers are interested in AI, but when they learn it may require large, labeled data sets and data scientists interest fades.

The good news is the opportunities seem bigger than the challenges. “We have a once in a lifetime opportunity… We can make edge AI ubiquitous and its potential is hard to overstate,” said Bier.

After a two-day meeting at a Google office here, the group adjourned on an upbeat note. They agreed to hold a second summit next year and reach out broadly for more participants, including some from potential end users.

Subscribe to Newsletter

Test Qr code text s ss