Teraki Takes ‘Quantum’ Leap in Edge Data Processing

Article By : Junko Yoshida

Teraki has developed software technology that can resize and filter data, for more accurate object detection and machine learning.

LAS VEGAS – Big data is an essential element in connected devices. Nevertheless, most vendors are struggling to deal with the exponential growth in data volume, specifically in paying for a CPU inside a system powerful enough to process data, and to send big data to the cloud for AI training.

One answer comes from Teraki, a Berlin, Germany-based startup. Its mission in life is to tackle challenges in edge data processing. Teraki applies signal processing to enable embedded systems that can leverage incoming data more efficiently — by minimizing latency and maximizing algorithm accuracy.

When EE Times first met Teraki last week at CES, we were a little overwhelmed by the trifecta of “big data,” “artificial intelligence” and “quantum computing” that appears to be the foundation of Teraki’s technology.

However, the good news is that Teraki isn’t trying to turn this bewildering combination into a marketing pitch. Rather, Teraki, founded by Daniel Richart — formerly a researcher in Quantum Optics at Max Planck Institute — is focusing on the technology.

In that light, Richart explained that the idea of quantum information theory isn’t actually so far from what’s required for today’s edge computing.

Just as quantum computing needs to find a quality, stable “qubit state” out of a lot of noise plaguing atoms, IoT and electronics manufacturers must be able to “extract information — fast enough, at the quality they need,” said Richart, “for data processing.”

Richart and his team started dabbling with Teraki’s basic concept in 2014 and formally established a company in 2015.

Teraki certainly isn’t the first company to talk about edge computing. But everyone is grappling with big data, said Richart.

Teraki guns for efficient embedded processing (Source: Teraki)

Consider highly automated vehicles. If little pre-processing occurs at the sensor level, for example, carmakers could pay dearly for heavy-duty sensor fusion in a vehicle because it will require a more powerful and costly central computing unit.

Sending and storing a lot of data to the cloud for AI training could also cost OEMs serious money. Add to this the issue of latency, which could trigger delays and inaccuracy in object detection.

Software to reduce data

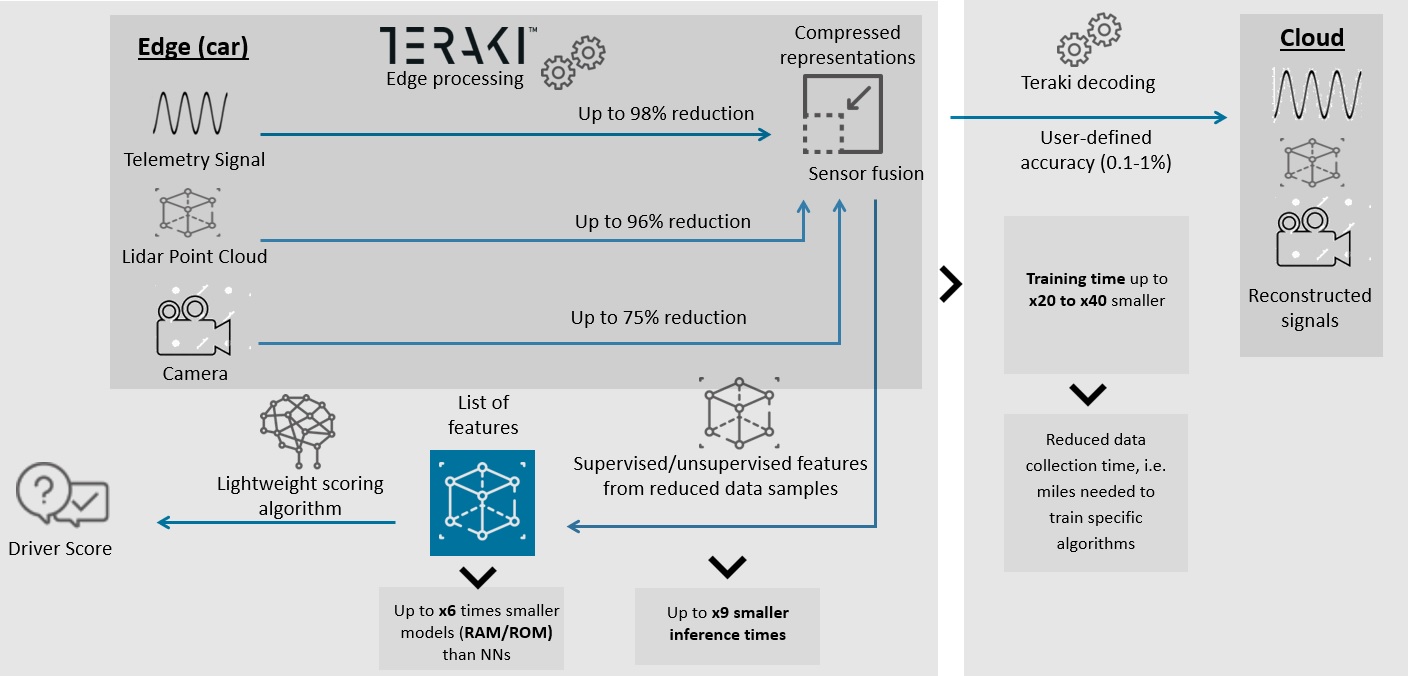

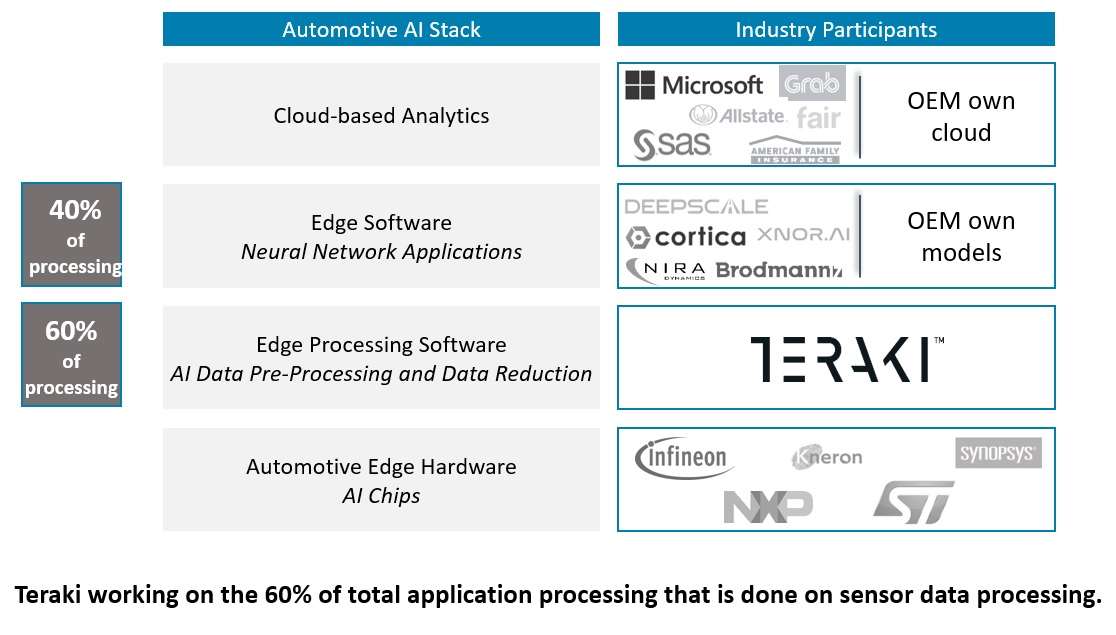

Focused on embedded edge processing, Teraki has developed software technology that can reduce data on signals from three different types of sensors: telematics, cameras and lidars/radars.

Teraki said that its software can run on a standard automotive chipset such as NXP Semiconductors’ Bluebox and Infineon’s Aurix microcontroller. Even better, “the first series contracts are underway,” according to Teraki.

Teraki’s software can reduce telematics signals 90-97 percent, the company claims. Once reduced, what is the data used for? It’s good for “predictive maintenance, monitoring driver behavior or crash detection,” according to the company.

Teraki has also developed software that pre-processes 2D image signals from cameras. By processing video frames “prior to an existing codec such as H.264,” Teraki claims it can reduce data up to 75 percent. Allegedly, that would result in enhancing video-based perception. This software development is already complete. OEM customers are now testing it.

The most promising of Teraki’s software innovations might be its solution to 3D point cloud data, generated from lidars, time-of-flight sensors and radars. “We are engaged with many lidar companies now,” said Richart. This software, currently in demo, will be commercially available in the first quarter this year, according to Teraki.

Where Teraki sits in the ecosystem (Source: Teraki)

What’s the secret in Teraki’s data reduction technology? The company isn’t just blindly reducing bits by compression. Pre-processing of sensory signals can’t be allowed to degrade the data quality and accuracy required for training and running machine learning models.

Richart said, “The key is in the technology that can adaptively resize and filter data, for more accurate object detection and machine learning.” He added, “It’s to know what to extract at the quality you need.”

The reduction of data early in processing sensory data in an embedded system leads to faster inference time and a smaller RAM/ROM requirement. Further, it substantially reduces training time.

Frame-based vs. event-based camera

But if reducing latency is so critical to safety in highly automated vehicles, why spend time in pre-processing frames from a conventional frame-based camera? We asked, “How about using an event-based camera such as those engineered by Prophesee?”

Richart, fully aware of advances in neuromorphic engineering, said, “We think we complement each other. Event-driven image sensors excel in latency domain so that it can function as an early warning sign. Meanwhile our software can be much more adaptive in extracting information from any sensors — at a latency below 10 milliseconds.”

Just last month, Teraki closed $11 million in Series A funding. The round was led by Horizons Ventures, joined by strategic investors. Among these are a leading Japanese technology company, US-based State Auto Labs Fund managed by Rev1 Ventures, Bright Success Capital and Castor Ventures. Prior investors Paladin Capital Group and innogy Ventures also participated. This brings total capital invested in Teraki to $16.3 million.

Subscribe to Newsletter

Test Qr code text s ss