PCI Express Builds Towards 64G Leap

Article By : Rick Merritt

PCI Express will leverage today's 56G PAM4 interconnects to deliver a 64G spec in 2021 in a speed race already forcing new kinds of system designs

PCI Express (PCIe) will get a 6.0 spec in 2021 enabling data rates up to 64 gigatransfers per second (GT/second), leveraging PAM-4 modulation. The news shows copper interconnects will have a long life, albeit with an increasingly short reach.

The PCI Special Interest Group (SIG) is bringing to mainstream designers the PAM-4 capabilities that serdes developers are already running at 56G and beyond for high-end systems. At the bleeding edge, other groups already have multiple 112G specs in the works and some experts say there’s a clear line of sight to 200G copper links and beyond.

The perpetual trade-off is that the faster the link is, the shorter the distance it can travel. There are some caveats. The tradeoff can be mitigated by adopting more expensive printed-circuit board materials or retimer chips. Another consideration is that PAM-4 requires forward error correction (FEC) blocks that add latency.

Systems designers are already moving to cabled links inside servers and networking gear to avoid the costs of retimers and premium board materials. The SIG is still debating what latency its 6.0 spec will support, but one expert said it will need to match the latency of DRAMs measured in tens of nanoseconds.

PAM-4 and FEC are new to the PCIe specs which up to now have relied on a more relaxed non-return to zero (NRZ) technique.

“It will be challenging… We’re going to squeeze everywhere — materials, connectors — nothing is free… It’s all about the PHY and analog and bit error rates, but we’re lucky our organization has a lot of smart engineers,” said Al Yanes, president of the SIG.

Gen6, as the 6.0 spec is also known, will need to be backward-compatible with all earlier PCIe specs so motherboards and adapter cards can evolve at different timeframes. Delivering a spec that lets products shift between NRZ and PAM-4 schemes presents “a tax burden,” Yanes said.

Large cloud computing providers are among the drivers for the faster speeds. The SIG finished a 32 GT/s Gen5 spec just last month that is already getting tapeouts in chips for AI accelerators, data center processors and storage systems. The move to 400G and 800G networks at big datacenters also is driving the need for fast interconnects.

“We rested for a month before we called a face-to-face with our electrical working group [on Gen6] because it’s all about the PHY these days,” Yanes said, referring to the highly analog-based physical layer block.

Dealing with shorter reaches and rising costs

While PCIe and other copper interconnects are moving ever faster, they are not moving as far. To enable support for the same distances as prior generations, engineers will have to adopt better board materials or use retimer chips — both seen as often prohibitively expensive.

In the days of PCIe 1.0, the spec sent signals as much as 20 inches over traces in mainstream FR4 boards, even passing through two connectors. Signals based on today’s high-end products using the 16-GT/s PCIe 4.0 spec can peter out before they travel a foot without going over any connectors.

As of the Gen4 spec, the SIG stopped reporting distances that its specs will support, given the diversity of possible designs. Instead, the latest specs define a height and width of an eye diagram for a good signal. It also provides rough guidance on signal loss — 28 dB for Gen4, 36 dB for Gen5, and a similar loss expected but, so far, not defined for Gen6.

“We don’t know for a given design its level of cross-talk, reflections of connectors, and materials used — there’s too many unknowns” to report a distance expectation, said a SIG board member from Keysight.

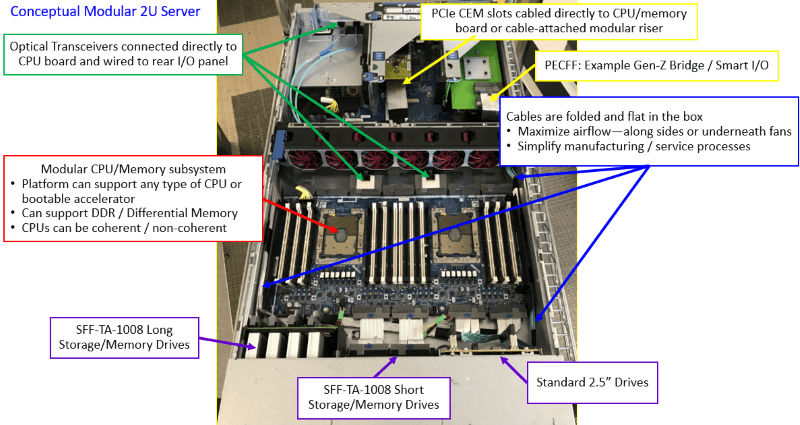

To shave costs, OEMs are increasingly turning to short cables to link components mounted on multiple small boards inside a system.

“For example, instead of designing a motherboard that runs the length of the enclosure, a system could be designed such that the compute is isolated to a very small mechanical that holds just the sockets and DIMMs, and then cables are used to run any distance,” said Michael Krause, a fellow from Hewlett-Packard Enterprise who has shared his ideas for system designs with partners. “Many platform vendors are moving or will be moving to modular mechanical designs.”

Kraus believes that OEMs will need to standardize on a few small board sizes and connector types to drive volumes up and thus costs down for the new approach. Some standards groups are already defining some of the new form factors, he added.

“We haven’t had a lot of success with external PCIe cabling, but I’ve heard cabling inside the box has been used by some members — twenty years ago, cabling was seen as a bad thing,” said Yanes.

At the annual SIG event here, several IP and test vendors showed demos of Gen4 and Gen5 designs. A Synopsys engineer said that the company has 160 licensees for its Gen4 IP, including AI accelerators from startup Habana.

At the annual SIG event, an IP team from Marvell showed a working demo for x4 Gen5 test block that could someday be part of a controller for a solid-state drive. Intel has said that it will support Gen5 in its processors in 2021.

A Synopsys engineer said that multiple customers have its Gen5 IP in working silicon and products that have taped out generally in 16-nm and finer processes. PLDA described Gen5 IP that it is selling for two switches and a bridge, with multiple customer tapeouts expected before April.

Subscribe to Newsletter

Test Qr code text s ss