LED Flicker and AV Machine Vision

Article By : Junko Yoshida

The OmniVision will start sampling its new 8.3 megapixel automotive image sensors capable of mitigating LED flicker in the first quarter of 2020.

A vast number of things could blind a driver on the road. When exiting a tunnel with the sun low on the horizon, the sudden transition from dark to very bright can be dazzling. A machine vision unit fitted into a highly autonomous vehicle (Level 3+ or above) would struggle in the same situation. But it would also struggle in circumstances that humans can handle just fine, too. One of those is dealing with LED flickering.

Designed to pulse to control brightness and power, LEDs flicker because they are not always on.

Celine Baron, OmniVision’s staff automotive product manager, noted during an interview with EE Times that LEDs are everywhere, ranging from headlamps and traffic lights to road signs, billboards and bus displays. Given their ubiquity, it’s hard to avoid LED flickering. It can be distracting enough to human eyes, but it could be fatal to an AVs’ machine vision. Human vision can compensate for flickering. AV machine vision can’t.

Machine vision can suffer LED flickering and a sudden transition from dark to very bright. (Source: OmniVision)

OmniVision will start sampling in the first quarter of 2020 the company’s new 8.3 megapixel automotive image sensors capable of mitigating LED flicker while offering 140dB high dynamic range.

Although this is not the first image sensor supplier to come up with LED flicker mitigation capabilities, OmniVision’s Baron explained that the market is yet to see any image sensors that can combine LED flicker mitigation with high dynamic range (HDR). “Typically, you’d have to choose one or the other.”

OmniVision claimed that conventional HDR combinations cannot guarantee the combined image is free from flicker.

Split pixel technology

One way to prevent flicker is to increase the exposure time of an image sensor so that it can capture LED pulsing. But the flip side of this is that it can cause saturation and lose dynamic range.

OmniVision, according to Baron, worked around the problem by developing a proprietary technology called Split Pixel.

Split Pixel, deployed in the company’s image sensor, first uses one large photodiode (PD) to capture the signal of the scene – short exposure time. In addition, the OmniVision sensor deploys one small photodiode using a long exposure to capture the LED signal. Split Pixel joins both images into the final picture.

HALE on Chip

Earlier this year, OmniVision developed a single chip called “HALE on Chip.” HALE means the combination of HDR and LFM (LED flicker mitigation) Engine on one chip. By leveraging what the company calls “intelligent combination,” Omnivision developed new image sensors whose dynamic range survives while capturing flickering LEDs.

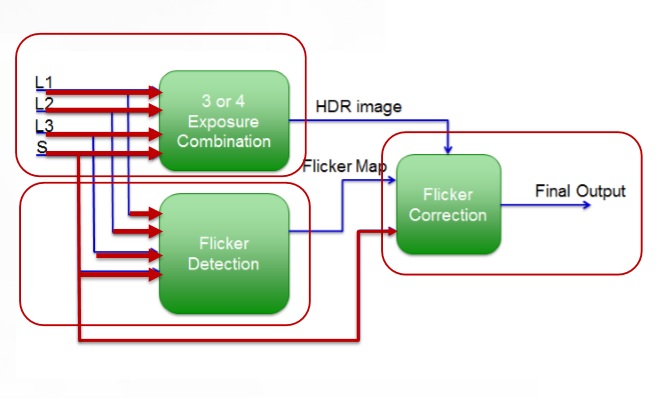

How Omnivision’s HALE on Chip works (Source: OmniVision)

Asked how the LFM + HDR combination concept works, the company explained: 1) the large PD (L and VS) and small PD captures generate an HDR image via HDR combiner, 2) the small PD captures LED flicker (with >11ms exposure time), 3) the small PD capture detects flicker pixels in large PD images to generate flicker pixel map. 4) Using generated flicker map and small PD image to correct the flicker pixels in the combined HDR image è Flicker-free HDR image.

3D stacking technology

OmniVision’s new 8.3 megapixel image sensor platform consists of two products. One is OX08A, featuring what the company claims to be the automotive industry’s best HDR. Another is the pinout-compatible OX08B, which does both HDR and LFM.

The OX08B is a stacked sensor, Baron explained. OmniVision added HALE on Chip – capable of running HDR and LFM engine combination algorithm – at the bottom of its CMOS image sensor by using 3D stacking technology.

Pierre Cambou, principal analyst at Yole Développement, called OmniVision’s use of a stacked semiconductor approach “exciting.” He said in a statement, “OmniVision’s new automotive CIS platform includes key features such as HDR and LFM, and is enabled by a stacked semiconductor approach. Introducing such [stacking] technology to its automotive lineup allows for on-chip integration that reduces BOM costs while providing a high level of performance and features in a very compact package, indeed much in sync with current market expectations.”

Additional features

OmniVision’s 8.3 Megapixel image sensors feature a couple of new wrinkles. One is what the company describes as “Multiple Regions of Interest (ROIs).”

The new image sensor is capable of selecting multiple regions (up to two) of the interest deemed most relevant to machine vision. Both OX08A and OX08B provide two independent and configurable regions of interests, thus reducing bandwidth in autonomous driving applications. Baron acknowledged that the idea is similar to event-driven machine vision technology developed by Prophesee (Paris), but called such a neuromorphic computing-based sensor “not ready” for prime time.

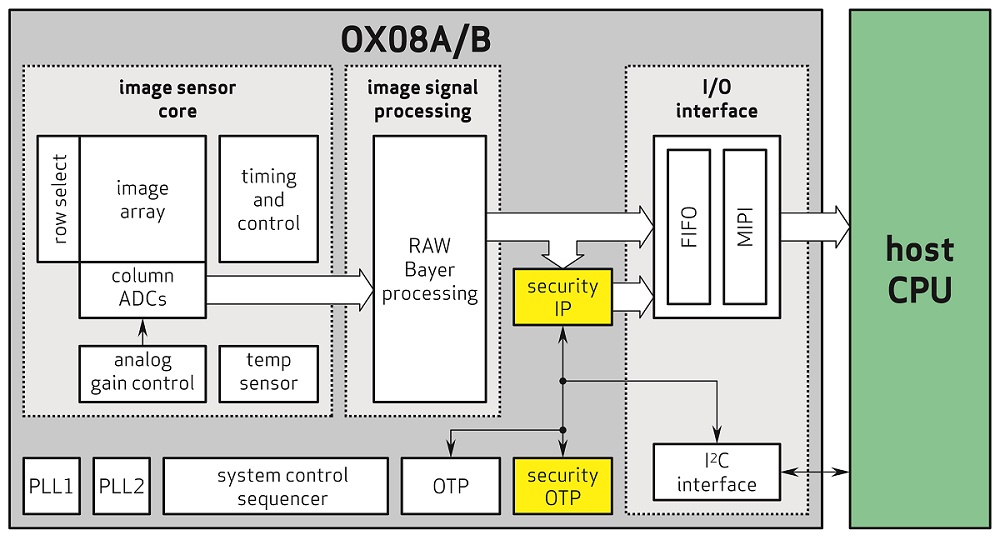

Block diagram of OmniVision OX08A and OX08B (Source: Omnivision)

Security is another addition to the new image sensors. Describing insertions of fake images or videos into machine vision applications as potential threats to security, the new image sensors come with security elements based on industry-standard encryption techniques. The good news, said Baron, is that it consumes less than 10 percent of the sensor’s total power. The activation of this feature is up to a car maker, she added. Baron explained that the automotive industry has not reached any agreement as to where best to implement security.

OmniVision positions the company’s new image sensors as “premium” elements suited to Level 3 or above highly autonomous vehicles. The company, however, would not reveal pricing.

Both the OX08A and OX08B are scheduled for sampling in the first quarter of next year and will be mass produced in 2020, the company said.

Subscribe to Newsletter

Test Qr code text s ss