Harvard embeds Flex Logix FPGA in deep-learning SoCs

Article By : Vivek Nanda

Start-up Flex Logix has scored a design win with Harvard researchers selecting the company's reconfigurable RTL technology for their deep learning SoC work.

A research group at Harvard have developed an SoC targeting machine learning in various applications, including data centres, mobile and IoT, using embedded reconfigurable logic from start-up Flex Logix Technologies Inc.

The research group of Professors David Brooks and Gu-Yeon Wei at Harvard’s John A. Paulson School of Engineering and Applied Sciences have already completed the chip design and tape-out and are now going into fabrication in TSMC 16FFC.

“Embedded FPGA is changing the way chips are designed and we recognize the power of being able to reconfigure RTL when designing our deep learning chip,” said Professor Gu- Yeon Wei, Gordon McKay Professor of Electrical Engineering and Computer Science at Harvard University said in a press statement. “An important attribute of modern deep learning research is how quickly the algorithms change and improve. With embedded FPGAs from Flex Logix we can accommodate these changes in real-time and iterate them for rapid improvement.”

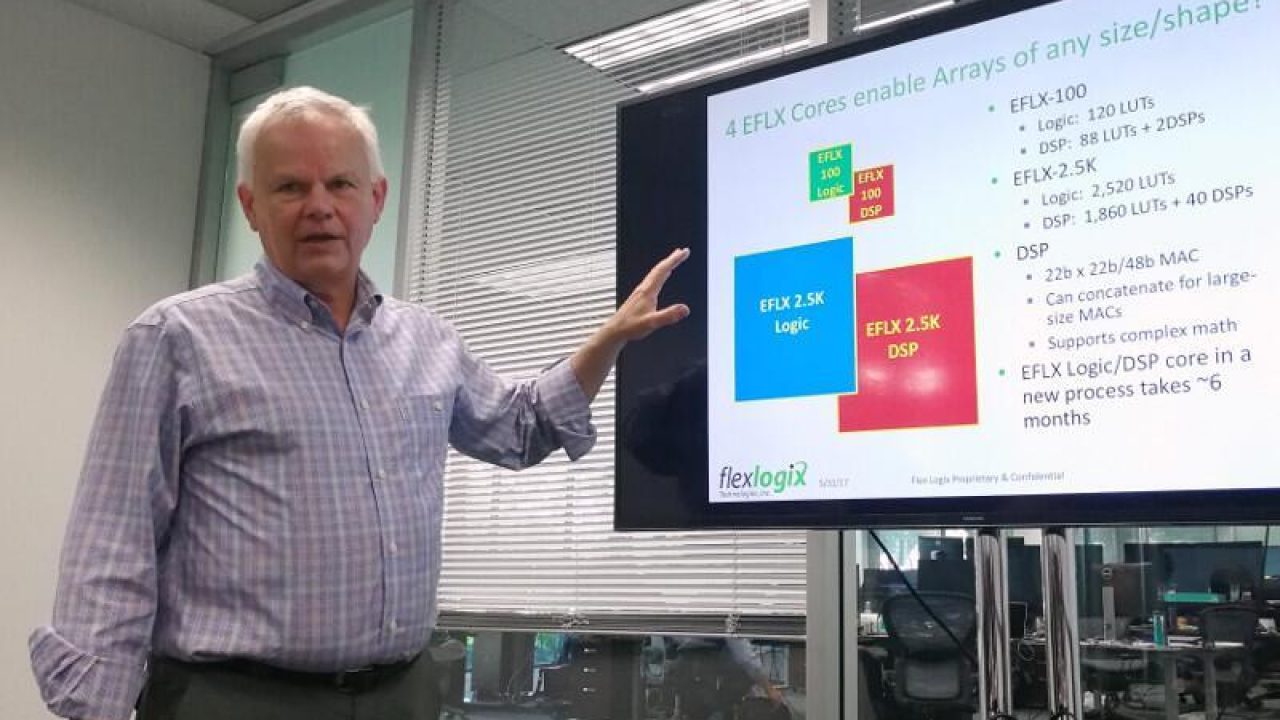

Talking to EE Times Asia, Geoff Tate, CEO and co-founder of Flex Logix (pictured above), said that a lot of [their] customers always wanted to embed FPGAs to bring flexibility into the chip level. "The reason people want to update RTL on a chip is that industry standards keep changing. If you design a switch chip today and packet standards change a year later, the switch chip has to send packets over to a processor which is 1,000 times slower and if it can't keep up with all the packets you have to replace all the chips. So the data centre people are asking for chips where you can change the protocols programmably," he explained.

"At 90nm a mask is $50,000 whereas at 40nm the mask costs close to $1 million," he said. "Using our IP it is cheaper and faster for companies to do all the variations they need than doing them as a separate mask.

He added that networking, wireless base stations and data centres—where FPGAs and Xeon processors are getting integrated—are seeing a lot of activity in this direction. "There are a lot accelerators for things like neural networks where people are looking into integrating FPGAs," he said.

"In deep learning, the algorithms are changing rapidly. A chip accelerates the execution of the algorithms but by the time the chip comes back from the fab, the algorithms have gotten better. So, Harvard has put some embedded FPGA in their chip to have the ability to update some of the algorithms in real time so that they are accelerate the rate at which they can improve their algorithms and thereby the value of their academic chip."

Next: An FPGA array of many sizes »

Subscribe to Newsletter

Test Qr code text s ss