Groq Rolls out AI Chip on the Cloud

Article By : Sally Ward-Foxton

Only the second startup to make AI accelerator silicon available in the cloud.

Groq’s tensor streaming processor (TSP) silicon is now available to accelerate customers’ AI workloads in the cloud. Cloud service provider Nimbix now offers machine learning acceleration on Groq hardware as an on-demand service for “selected customers” only.

While there are several startups building AI silicon for the data center, Groq now joins Graphcore as the only two with accelerators commercially available for customers to use as part of a cloud service. Graphcore previously announced its accelerators are available as part of Microsoft Azure.

“Groq’s simplified processing architecture is unique, providing unprecedented, deterministic performance for compute intensive workloads, and is an exciting addition to our cloud-based AI and Deep Learning platform,” said Steve Hebert, Nimbix’ CEO.

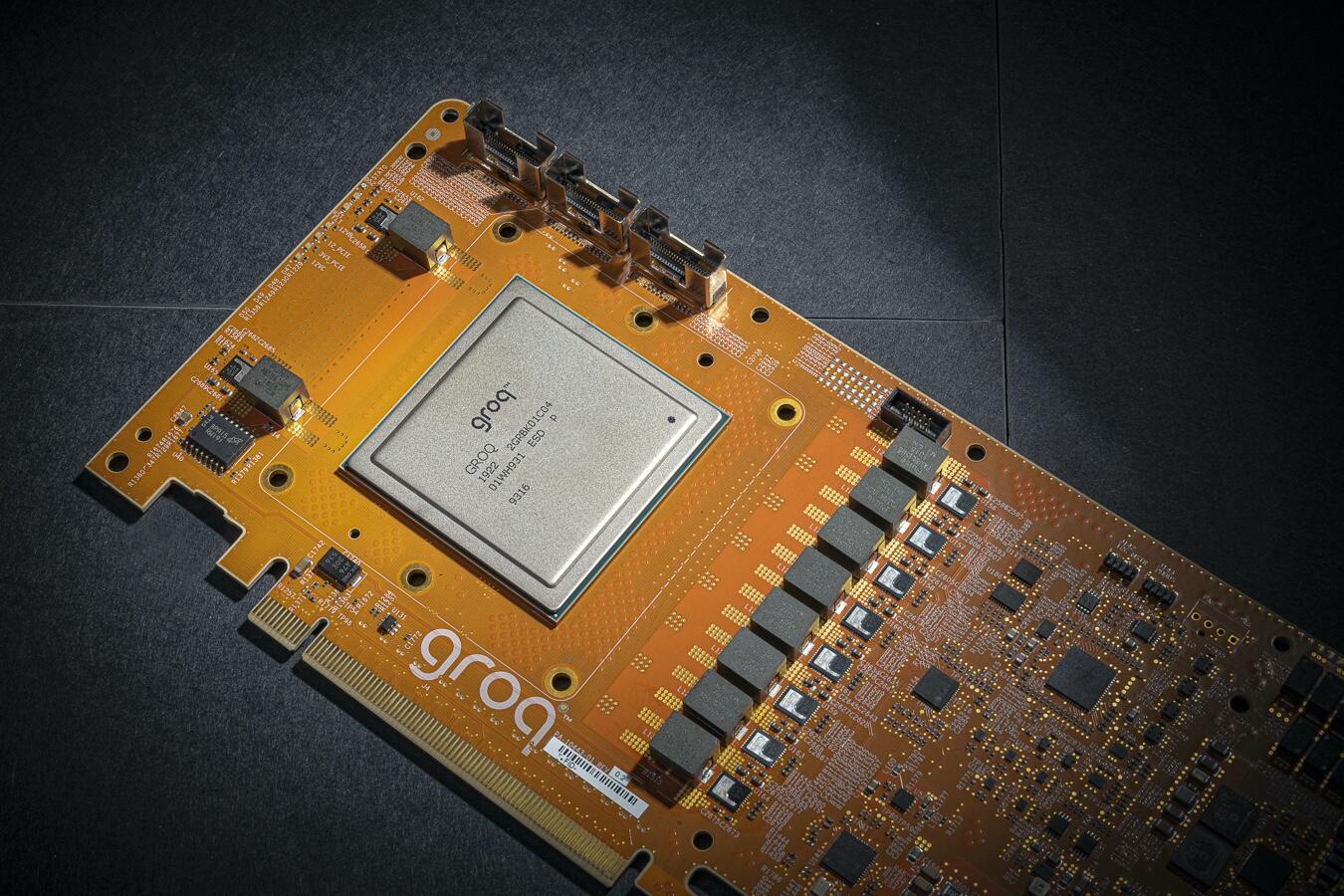

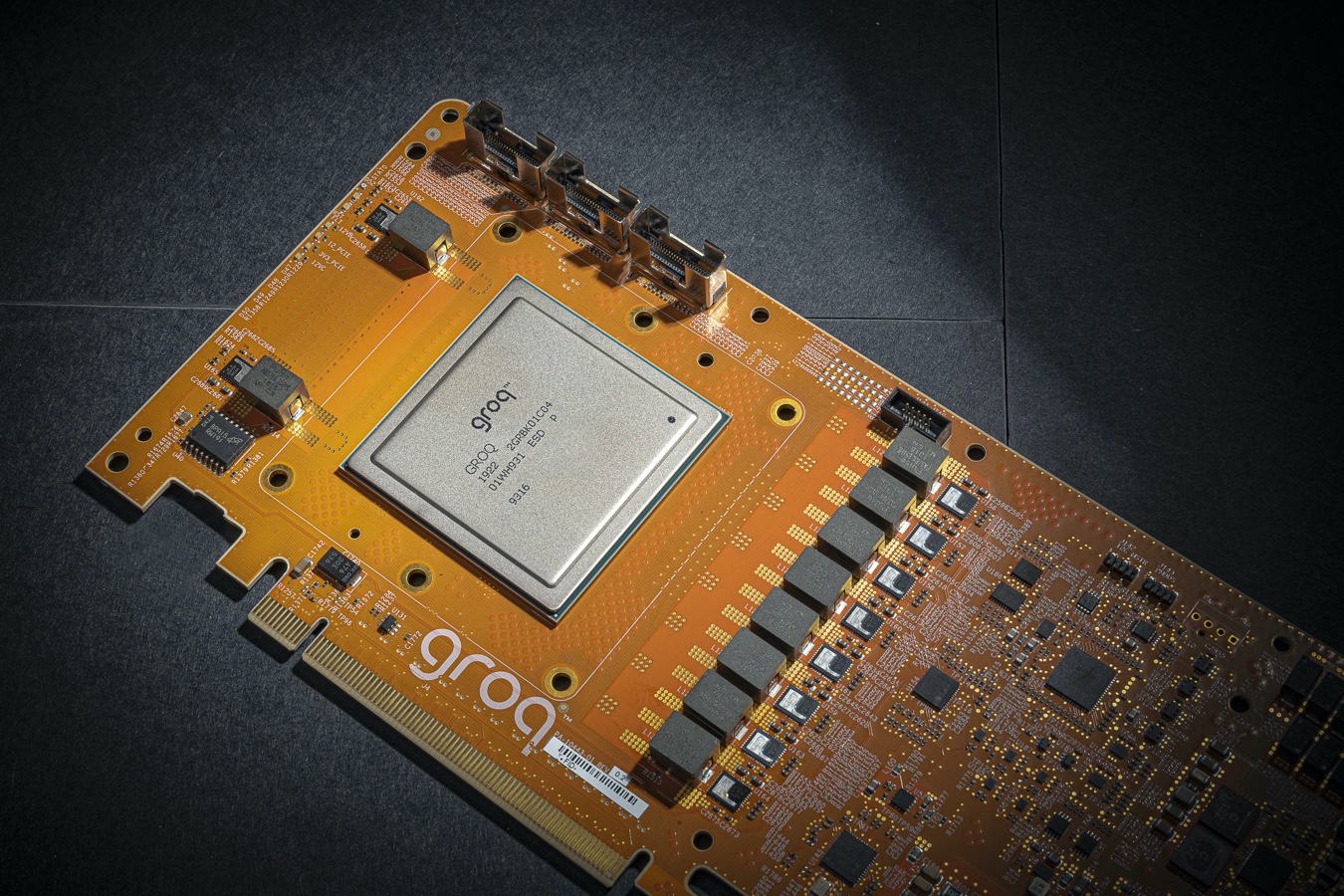

Groq is only the second AI accelerator startup to make its hardware available in the cloud (Image: Groq)

Groq’s TSP chip, launched last fall, is capable of an enormous 1,000 TOPS (1 peta operations per second). Recent results published by the company show the chip can achieve 21,700 inferences per second for ResNet-50 v2 inference, which according to Groq more than doubles the performance of today’s GPU-based systems. These results suggest Groq’s architecture is one of the fastest, if not the fastest, commercially available neural network processor.

“These ResNet-50 results are a validation that Groq’s unique architecture and approach to machine learning acceleration delivers substantially faster inference performance than our competitors,” said Jonathan Ross, Groq’s co-founder and CEO. “These real-world proof points, based on industry-standard benchmarks and not simulations or hardware emulation, confirm the measurable performance gains for machine learning and artificial intelligence applications made possible by Groq’s technologies.”

Groq says its architecture can achieve the massive parallelism required for deep learning acceleration without the synchronisation overhead of traditional CPU and GPU architectures. Control features have been removed from the silicon and given to the compiler instead, as part of Groq’s software-driven approach. This leads to completely predictable, deterministic operation orchestrated by the compiler, allowing performance to be fully understood at compile time.

Another key feature to note is that Groq’s performance advantage does not rely on batching – a common technique in the data center where multiple data samples are processed at a time, to improve throughput. According to Groq, its architecture can reach peak performance even at batch = 1, a common requirement for inference applications that may be working on a stream of data arriving in real-time. While Groq’s TSP chip offers a moderate 2.5x latency advantage over GPUs at large batch sizes, at batch = 1 the advantage is closer to 17x, the company said.

Subscribe to Newsletter

Test Qr code text s ss