El Capitan to Be Powered by AMD CPUs and GPUs

Article By : Brian Santo

With AMD, HPE/Cray increases the supercomputer's performance from 1.5 exaflops to 2 exaflops. El Capitan promises to be the world's fastest machine when switched on in 2023.

AMD scored its biggest coup yet in high-performance computing (HPC), getting both its CPUs and GPUs adopted in El Capitan, the supercomputer likely to be the world’s fastest — by far — once it’s up and running in 2023.

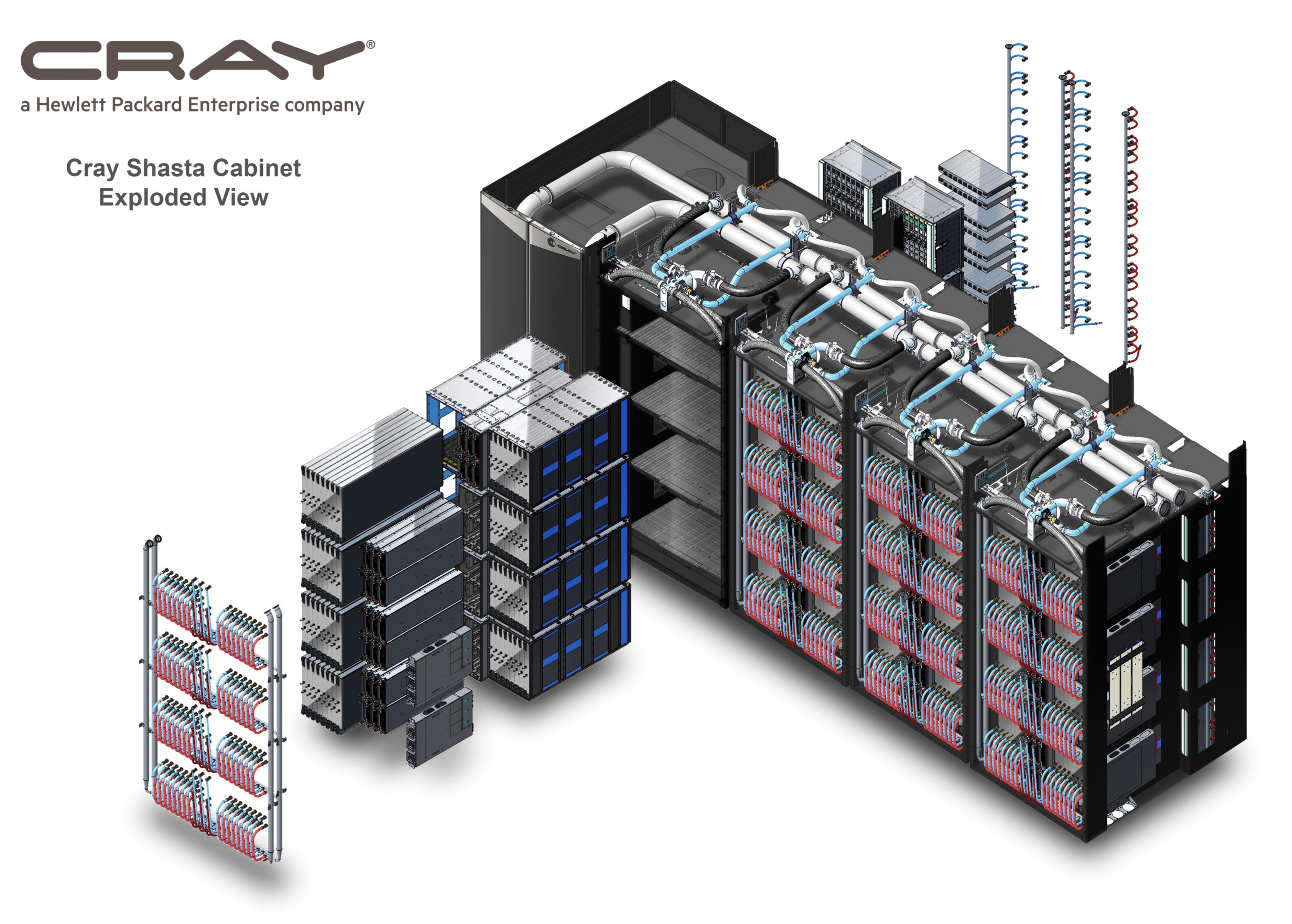

The U.S. Department of Energy’s National Nuclear Security Administration (NNSA) announced El Capitan in August of last year. The DoE awarded the $600 million supercomputer project to Cray Inc., at the time still in the process of being purchased by Hewlett Packard Enterprise. With that acquisition completed, HPE takes over the contract.

When the El Capitan project was announced, neither the DoE nor HPE/Cray had specified whose processors would be used. El Capitan was originally projected to operate at 1.5 exaflops, but AMD convinced HPE/Cray and the DoE that that with future versions of its Epyc CPUs and Radeon GPUs, working in combination with its Slingshot interconnect, AMD could help HPE/Cray deliver a system capable of 2 exaflops.

Two exaflops is about 10 times faster than today’s most powerful supercomputer and faster than the world’s top 200 existing supercomputers combined, said Steve Scott, long the lead architect at Cray and now senior vice president and chief technology officer for HPC & AI at HPE.

HPE/Cray expects to deliver El Capitan to Lawrence Livermore National Laboratory in 2023. The machine will be used by LLNL and two other national labs (Los Alamos and Sandia) primarily to model the United States’ aging stockpile of nuclear arms to ensure its safety, reliability and security.

LLNL operates what is currently the second-fastest supercomputer in the world. Called Sierra, it was built by IBM, and it integrates Power9 CPUs from IBM and Nvidia V100 GPUs.

The kind of modeling done by Sierra (and eventually by El Capitan) requires very complex simulations, and as the nuclear stockpile ages, the complexity only increases, explained Bronis R. de Supinski, the CTO of Livermore Computing at LLNL. “We need larger and larger systems to make sure we have the performance we need. El Capitan will meet that,” he continued. He added that with El Capitan, LLNL will be able to routinely do three simulations simultaneously. The lab will also have much greater statistical confidence in the results, de Supinski said.

El Capitan will use next-generation AMD Epyc processors, codenamed “Genoa,” featuring AMD’s Zen 4 processor core. These will be standard parts, said Forrest Norrod, senior vice president and general manager of the Datacenter and Embedded Solutions Business Group, AMD. Processors used in El Capitan will scale down all the way to desktop machines.

Norrod also said El Capitan processors will be based on the third generation of the company’s Infinity architecture, which is essentially an on-chip fabric network.

“The component that provides most of the computational power is next-gen Radeon Instinct, optimized for deep learning – designed with our lead customers – for machine learning and artificial intelligence,” Norrod said.

Unified memory will be available across the CPU and GPU complex. That will make it easier for programmers to use El Capitan, he said, because it will relieve them from having to handle memory resources. “We think that’s key in unlocking performance of El Capitan,” Norrod said.

Part of what LLNL wants to do with El Capitan is explore applications of artificial intelligence. De Supinski said LLNL’s workloads do no rely on deep learning, but the lab is doing cognitive simulation. “We’re bringing AI and other models to bear on our workloads, mostly for accelerating our simulations and improving our accuracy,” he said.

Asked if the El Capitan architecture is open for adding purpose-built AI chips, Scott said that the Radeon GPUs are currently the de facto AI accelerators today, but the system is architected in such a manner that specialized AI accelerators could be added.

De Supinski said LLNL is looking at specialized AI accelerators, and said LLNL can add additional nodes to El Capitan designed specifically for that purpose. “We’ll see how that goes” with LLNL’s current supercomputers, he said, and if it goes well, it’ll engage with HPE to add those nodes to El Capitan.

AMD has been leveraging its competitive position in GPUs and its growing prowess in CPUs to muscle its way into the exclusive supercomputer processor club that includes Intel, IBM, Nvidia, and a small handful of others. The company has its Epyc “Rome” CPUs built into the Joliet-Curie Rome supercomputer operated by the French Alternative Energies and Atomic Energy Commission (known by the acronym CEA). In May of last year, AMD announced it was working with Cray to build the Frontier supercomputer, a 1.5 exaflops machine for Oak Ridge National Laboratory. When it was announced, it was going to be the world’s fastest supercomputer.

Comments from Norrod, de Supinski, Scott were from a recent online press conference.

Subscribe to Newsletter

Test Qr code text s ss