Details of Secretive Startup’s AI Accelerator Emerge

Article By : Sally Ward-Foxton

Mixing memory, control and compute blocks, keeps data local to achieve 26 TOPS, 3 TOPS/W.

Secretive Israeli AI accelerator startup Hailo has revealed some key details of its novel compute architecture, following the launch of its first chip, the Hailo-8, in May.

The company was founded in February 2017 by members of the Israel Defense Forces’ elite intelligence unit. Today, Hailo has around 60 employees at its HQ in Tel-Aviv.

Hailo has managed to get from concept to product in less than two years, having raised $21 million to date in Series A funding. They have “at least ten” patents pending.

Performance and Power

Hailo-8, launched at the Embedded Vision Summit, boasts performance of 26 TOPS with notable power efficiency of 2.8 TOPS/W. The power consumption was measured running ResNet-50 on low resolution video (224 x 224 at 672 fps) at 8-bit precision with a batch size of 1. This is an order of magnitude better than the current market-leading solution for automotive vision inference at the edge.

In an interview with EETimes, Liran Bar, Hailo’s Director of Product Marketing, stressed that the 2.8 TOPS/W figure was a realistic figure based on a real application.

“Companies are claiming all kinds of power efficiency, but they actually take the theoretical number for how many TOPS they have, and then divide it,” (by the power consumption) he said. “The [Hailo-8’s] 26 TOPS is the maximum theoretical number, but this is assuming you have 100% utilisation, which is not the case [in real applications].”

Results for image detection on 720p video using Mobile-Net-SSD, and semantic segmentation using FCN-16 on 1080p video, were just as impressive.

“Running the [semantic segmentation] network on HD resolution footage, unlike other solutions that require downscaling of the input sensor resolution in order to meet real-time requirements, means we can identify objects from further away, and means OEMs can leverage the expensive input sensors they are using,” Bar said.

A demonstration of semantic segmentation using the Hailo-8 (Video: Hailo)

Compute Architecture

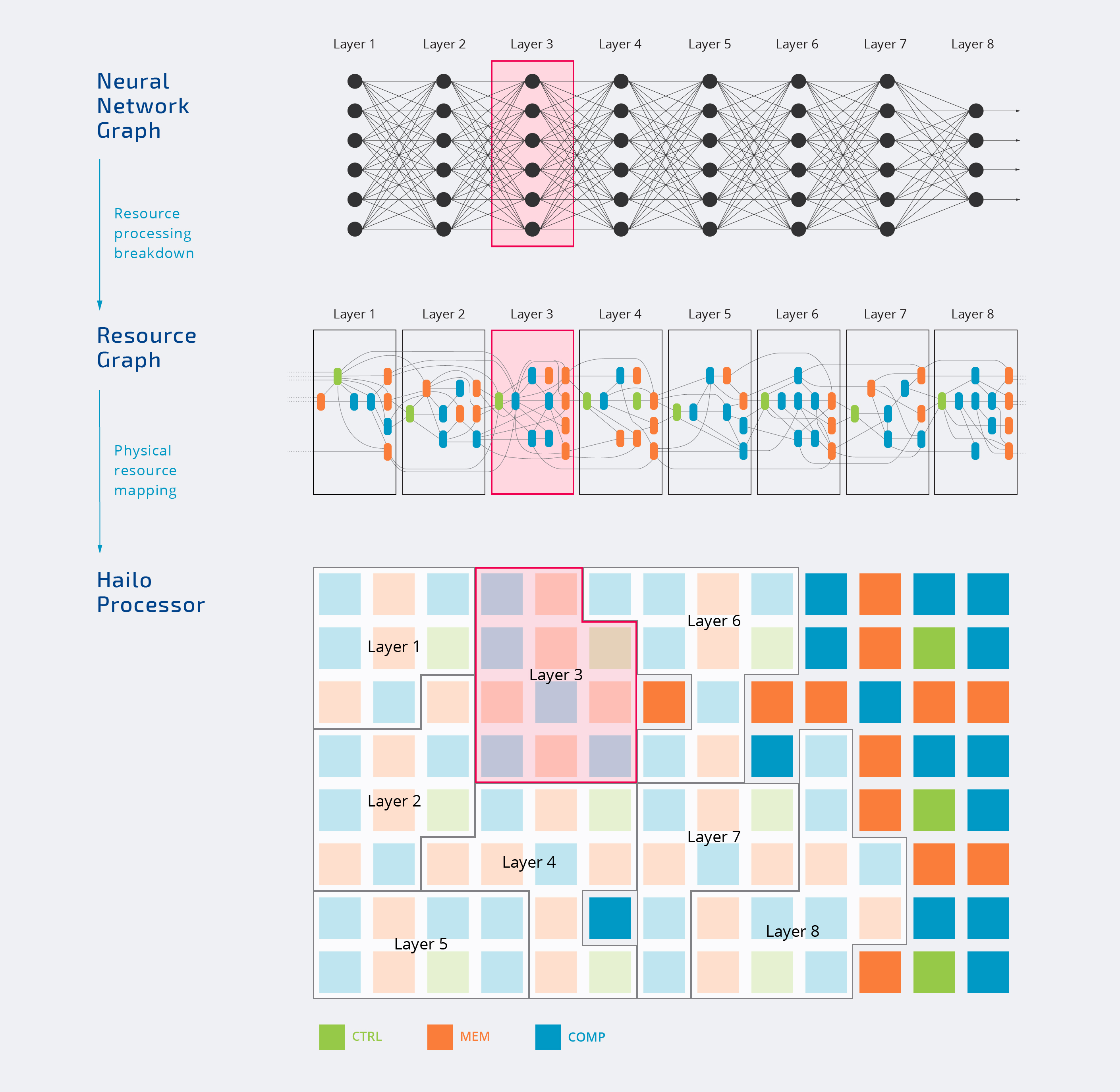

The major difference in Hailo’s architecture versus CPUs and GPUs is the lack of external memory. Instead, computing resources — memory, control and compute blocks — are distributed throughout the chip. A great deal of power is saved simply by not needing to send data on and off the chip.

Neural networks are made up of layers, each comprising a number of nodes. At the nodes, data values are multiplied by various weight values, added together, and the result is passed to the next layer. One layer may require slightly different computing resources from the next.

Hailo’s software analyses the requirements of each layer in the network and allocates relevant resources. Memory, control and compute blocks allocated to each layer are mapped onto the chip as close to each other as possible, and subsequent layers are fitted in close by. The idea is to minimise the distance the data has to travel.

“The innovation comes from the architecture itself,” Bar said. “We are not doing any tiling, we are not doing any compression, we are not doing any sparsity, none of the techniques that traditional computation architectures need to do to overcome the bandwidth issue.”

“There is no hardware-defined pipeline, so we are very flexible and can adopt other neural networks, the hardware supports them all, but we need to bridge the gap with complementary software,” he added.

Software and hardware have been closely co-developed over the last two years. A software stack, including proprietary quantisation schemes, supports TensorFlow, with ONNX support coming in Q4.

Driver Assistance

The Hailo-8 is designed for neural network acceleration in edge devices, with a particular focus on the automotive sector, targeting advanced driver assistance systems (ADAS) and autonomous driving applications. It is in the process of being certified for ASIL-B at the chip level (and ASIL-D at the system level) and is AEC-Q100 qualified.

The Hailo-8 also suits applications that require the latency and data privacy provided by performing AI inference locally on the edge device, rather than in the cloud.

“Automotive is our strategic market and most of our investors came from the automotive market,” said Bar. “We already have engagement with automotive Tier-1s and OEMs, but this will take a while to generate revenue.”

Hailo’s view of the market so far is that automotive customers today are looking beyond raw compute in their AI accelerator choices, with optimisation gaining importance.

Acting as a co-processor means the Hailo-8 can be designed in alongside SoCs already in development by automotive Tier-1s before the requirement for deep learning came along.

“For time to market, [customers] can connect the Hailo-8 as a co-processor, keeping all the development they did on the SoC, but offload the neural network tasks. This is a big advantage from their perspective,” Bar said.

How does Hailo fancy its chances against the dozens of other AI edge accelerator startups out there?

“There are many innovative technologies out there that still need to be proven,” Bar said. “There are ideas about FPGAs, analogue devices, memory-based architectures, but eventually, the challenge is to make it as a product and to put the real chip on the table. When we look around and see the available [competitor] chips, there are not actually many out there that can be integrated today.”

Mass production of the Hailo-8 will begin in Q1, 2020.

Subscribe to Newsletter

Test Qr code text s ss