Data is King for AI Sound Recognition

Article By : Sally Ward-Foxton

Robust, high-quality training data makes models more efficient — so efficient they can run on simple, low-power MCUs.

CAMBRIDGE, UK — As I tentatively squeeze the trigger, the gun fires, but the sound is not quite what I expect…

I’m standing in a semi-anechoic chamber in the audio lab of AI sound recognition company Audio Analytic, and far from the classic gunshot noise, the sound is more akin to a loud pop. In my amateur assessment, it’s more like what I imagine a balloon bursting sounds like. Except that audio expert Chris Mitchell, CEO of Audio Analytic, tells me that in audio terms a gunshot is actually nothing like the sound of a balloon bursting. I stand corrected.

Firing the gun again outside the anechoic chamber, in the warehouse that houses the lab, produces something more like the classic gunshot sound one might hear in movies. The purpose of this demonstration is to illustrate the difference between the sound that actually comes from the gun, and the sound that we more commonly associate with gunshots, which is made up of reflections and echoes of the sound from its environment.

Separating these two things is absolutely crucial if we want to make an AI that can reliably be used to detect gunshots, since we want it to respond to the sound the gun actually makes, rather than any sounds that are the product of the environment, Mitchell explains.

Audio Analytic’s laboratory in the outskirts of Cambridge also has numerous sirens, doorbells and dozens upon dozens of smoke alarms from around the world (much harder to acquire than the gun, Mitchell tells me, because of the radioactive material in the sensors). Sounds from these devices are carefully recorded, along with events like dogs barking and windows breaking, and used to train the company’s world-leading AI models for sound recognition.

These machine learning models are used to give machines a sense of hearing, allowing them to sense context based on sounds happening in the environment. This might mean a security system that listens for smoke alarms, glass breaking or gunshots, but there are a variety of applications for consumer devices that can analyse the audio scene they are in and use this context to either take action, or subtly tweak their audio output in response.

The key to training an AI model to accurately recognize sounds, Mitchell says, is data. Collection of high-quality data, and labeling that data properly, is of paramount importance to creating efficient models that can achieve accurate sound recognition, even with limited amounts of compute power.

TinyML Models

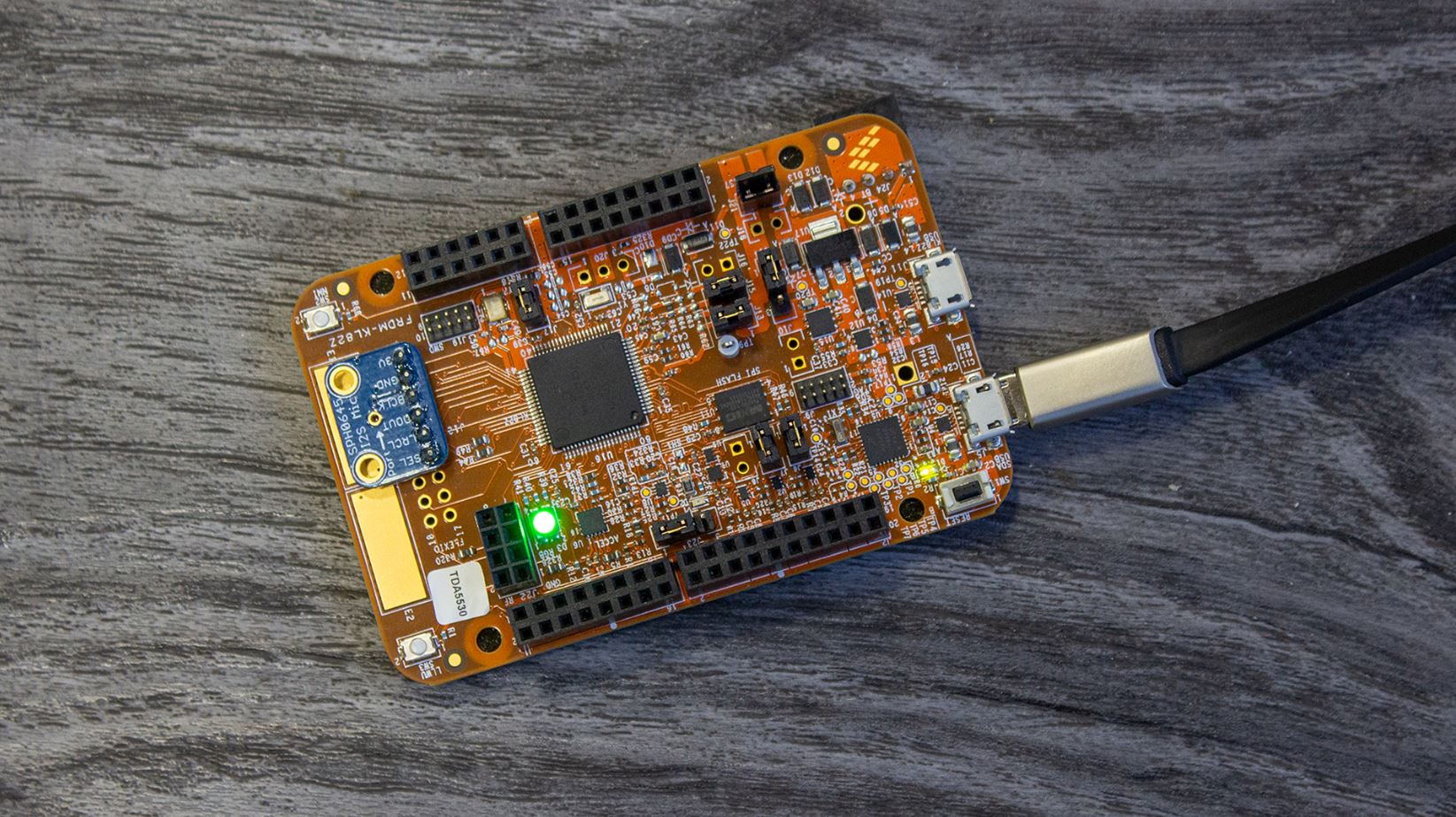

Back at Audio Analytic’s offices in central Cambridge, VP technology Dominic Binks demonstrates one of the company’s latest achievements — AI recognition of a particular sound using a model that fits on an Arm Cortex-M0+ device. In this case, the model predicts when a baby is crying; it runs on an NXP Kinetis KL82.

“With this particular processor, the best way I can describe it is it has literally nothing that helps you do machine learning,” said Binks. The KL82 in fact contains a reasonable amount of Flash (128 kB) and RAM (96 kB), but no DSP or other specialized processing IP. It consumes only a few tens of milliwatts.

Fitting the company’s ai3 software library onto the microcontroller was not an easy task. Binks said that removing functionality aimed at larger systems, such as the ability to record and play back sounds, and things like debug tools, has shrunk down the software considerably. The ‘baby cry’ detection model is also one of the smallest the company has.

Binks also described how the team wrote operations in assembly language to handle 32-bit overflows (the product of multiplying two INT32 numbers, which would otherwise result in a 64-bit number). This reduced the time taken to process each sample of audio to within the required 16 ms window (sampling at 16 kHz and taking 256 samples at a time means 16 milliseconds of audio needs to be processed at time).

“By doing this bit of assembly [code], we got down to typically 11 to 12 milliseconds run time, which gives us some head room in case we get a bit more work to do,” he said, emphasizing audio’s particular requirement for processing bandwidth rather than pure horsepower to keep up with real-time streaming of input data.

Labelled Data

Sound recognition, as opposed to speech recognition, is traditionally rather a complex task for AI. How does Audio Analytic manage to fit their model onto such a tiny device?

“It’s not so hard for us because [our model is] quite small to start with,” said Binks.

Audio Analytic’s sound recognition model, AuditoryNET, is pretty special, in large part down to the high-quality labelled data which is used to train it. Labels are essential: they tell the model which parts of the sound are pertinent, so that the baby cry model doesn’t end up creating features based on the baby’s breath sounds in between cries, for example, rather than the cry sound itself.

“Labels help the machine pick up on only the pertinent parts that correspond to that particular sound,” said Binks. “If you present it with more [than the necessary] data, there is more variability to deal with. What we’ve generally found is that the more precisely we label the data and the more data we give machine learning models [in training], the smaller they get. There’s obviously a limit, but generally speaking, because the models generalize more the more data they see, they get better at identifying what the really salient characteristics of that sound are.”

One way to visualize this concept is via Audio Analytic’s sound map, Alexandria. This diagram is a 2D representation of the 15 million sounds in the company’s audio dataset across all 700 label types in multi-dimensional space. It includes everything from emergency sirens and alarms to vocal noises such as laughs, coughs and sneezes. Classifying a particular sound, Mitchell explains, means drawing some kind of circle around the relevant points in the map.

“The more complicated and disjointed that data is and the more noise it has, the more complex shapes you have to draw, and the more prone to error the system will be. [The model] also ends up being bigger, because you’re adding more parameters to describe a bigger thing,” Mitchell explains. “You want to have a tradeoff between the smallest, most compact, crisp representation of the underlying variability of the sounds you’re capturing — no more, and no less. If 20 percent of your labels are off by 50 milliseconds, then you’ve included a whole bunch of things in the model you shouldn’t have done. So it learned a bunch of unnecessary parameters and from a memory point of view or a resource point of view, it’s doing wasteful work.”

Unlike for speech and language processing models, where large amounts of open-source training data exists, for sound there was no such dataset. Capturing and labeling this training data required a significant investment from Audio Analytic and it therefore represents a significant part of the company’s intellectual property.

The model, AuditoryNET, was also created from scratch by Audio Analytic.

Sound models are fundamentally different from widely available speech and language processing models. Compared to analyzing speech, the physical process by which the sound is produced varies much more. General sounds are also much more unbounded than speech; any sounds can follow any other sequence of sounds. So the pattern recognition problems and resultant machine learning models look quite different to their speech counterparts.

Real-world applications

The implications of embedding sound recognition onto small microcontrollers opens up many possibilities; what was a complex computationally-intense task is now a realistic prospect for smartphones, consumer electronics, earbuds, wearables, appliances, or any product with a microcontroller. Smartphones often have an M4-class processor running wake-word detection which removes that function from the application processor — it’s easy to imagine something similar, but for recognizing a wide variety of sounds, running on an inexpensive M0+ coprocessor, efficient enough for always-on operation.

One of the company’s previous demos, running on an Ambiq Micro processor could run for several years on a pair of AA batteries. This demo used an Ambiq Micro SPOT (subthreshold power optimized technology) processor, based on an ultra-low power implementation of a Cortex-M4 core, plus a Vesper piezoelectric MEMS microphone, which uses no power, even when always listening. This demo proved the ultra-low-power microphone and processor combination could respond quickly enough to detect impulsive sounds such as glass breaking.

Mitchell imagines a world where smart home systems might reduce the sound on a user’s television when a baby cry is detected and instead play live audio from the child’s room, perhaps even inserting a picture-in-picture view from a baby monitor camera system. But there are more subtle use cases too. As well as recognizing specific sounds, ai3 can classify audio environments into different scenes, and then use this information to adjust audio outcomes from consumer devices such as headphones. For example, adjusting EQ settings or boosting active noise cancellation on headphones as a person moves from one scene, perhaps a train station, to a completely different audio environment on a quiet train. This can be combined with reactions to specific sounds, such as activating transparency modes when emergency alarms or sirens are heard.

These types of applications are perfectly feasible today, with the right combination of machine learning model, training data and hardware, of course. Looking towards the future, we might imagine even more complex audio applications for AI, way beyond basic voice control, running on resource-constrained hardware as silicon vendors continue to increase the capabilities of their devices, and tailor them to the needs of machine learning. The overall effect will be to give machines a sense of hearing, enabling them to judge context for themselves more successfully using sounds, and ultimately, become smarter.

Subscribe to Newsletter

Test Qr code text s ss