AV: Are Disengagements the Wrong Metric for Testing?

Article By : Junko Yoshida

Did the autonomous vehicle industry learn the right lesson from the first AV test fatality a year ago?

It's been more than a year since the first fatal accident caused by a self-driving testing vehicle in Arizona cast a shadow over this heavily hyped technology. In the fast-moving tech world, the death of Elaine Herzberg, struck down by an Uber autonomous test vehicle, seems like old news, faded from most people’s memory.

But for safety expert Phil Koopman, associate professor at Carnegie Mellon University and co-founder of Edge Case Research, this tragedy has triggered new research, leading him to question whether the companies testing these vehicles are designing an effective safety test platform.

Referring to the post-accident media frenzy and an outbreak of Monday-morning quarterbacking on social media, Koopman specifically asks, “Did we really learn the right lesson?”

At the SAE WCX (World Congress Experience) this week, Koopman presented a paper entitled “Safety Argument Considerations for Public Road Testing of Autonomous Vehicles,” co-authored with Beth Osyk. The paper explores “the factors that affect whether public on-road AV testing will be sufficiently safe.” His goal, according to Koopman, is to provide materials that serve as “a solid starting point for ensuring the safety of on-road test programs for autonomous vehicles.”

That doesn't help

While a lot of ink has been spilled analyzing what went wrong with Uber’s autonomous vehicle, Koopman pinpointed several responses that he deems “not being helpful to improve safety of autonomous vehicle (AV) testing.”

They include:

- The argument that delaying AV deployment is irresponsible and possibly fatal (per the insistence that “deploying AVs before they're perfect will eventually save more lives”)

- Deciding which human was at fault (“Was it the victim at fault or the Uber’s safety driver?”)

- Finding out why autonomy failed

Speaking of the third point, Koopman said, “We all know autonomous vehicles today are not mature. That’s why we are testing. Nobody should be surprised about autonomy failures.”

Building a safety case

While “the proper ratio of simulation, closed course testing, and road testing is a matter of debate,” Koopman acknowledged that “at some point any autonomous vehicle will have to undergo some form of road testing.” Conceding this inevitability, Koopman argues that the AV industry should focus on “how to minimize the chances of putting other road users at risk.”

In his paper, he emphasizes the need for AV test operators to build a safety case in “a structured written argument supported by evidence.” Such example structures include:

- Timely supervisor responses

- Adequate supervisor mitigation

- An appropriate autonomy failure profile

Koopman said that it is critical for AV companies to collect data on the on-road performance of autonomous testing vehicles, for several reasons. These include the limits of human alertness — from 15 to 30 minutes, and “supervisor dropout,” the tendency of safety observers to get bored because unsafe events hardly ever happen.

The bigger question abut this data collection, however, is: Which data is the right data?

Myth of ‘disengagements’

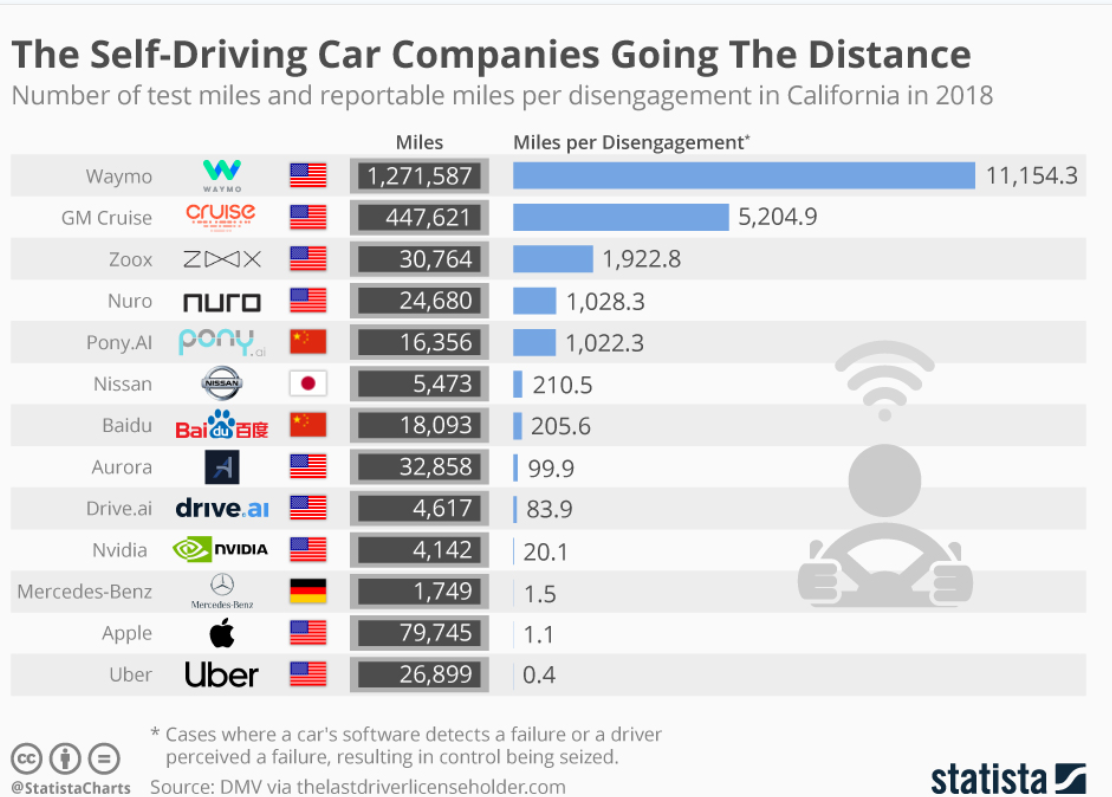

By law, people actively testing self-driving cars on public roads in California are required to disclose the number of miles driven and the frequency of which human drivers were forced to take control, a moment of crisis known as a “disengagement.”

The DMV defines disengagements as “deactivation of the autonomous mode when a failure of the autonomous technology is detected or when the safe operation of the vehicle requires that the autonomous vehicle test driver disengage the autonomous mode and take immediate manual control of the vehicle.”

Koopman flatly states, “Disengagements is the wrong metric for safe testing,” because it tends to subtly encourage test operators to minimize their interventions, which could lead to unsafe testing.

The reality today is a dearth of available data generated by autonomous vehicle testing. As Koopman wrote, “Current metrics published for autonomous vehicle testing largely deal with the logistics of testing, such as number of vehicles deployed, and miles driven. The most widely reported statistical data is so-called disengagement reports, which are not a sufficient basis for establishing safety.”

Today, disengagement data is often cited by the media and self-promoting AV companies as a metric to judge the maturity of AVs in what is typically seen as an autonomy horse race.

This approach is misleading because any serious effort to build a safer AV, disengagement data must be a metric to improve the technology, not to tout victory in a safety contest. Koopman told EE Times. “Early on in testing, the more disengagements you have, the better off you are, because they can identify design defects.”

Disengagements, whether a full-blown crisis or just a blip, can point to either a machine mistake, or a human oversight, Koopman said. In other words, you want to know every incident, mishap and near miss as a failure in the test-program safety process. “It is crucial to identify and fix the root cause of all safety problems beyond addressing any superficial symptoms,” Koopman wrote in the paper.

*Miles Per Disengagement (Source: Statista) *

Human factor

Finally, it’s important to take the human factor into serious consideration.

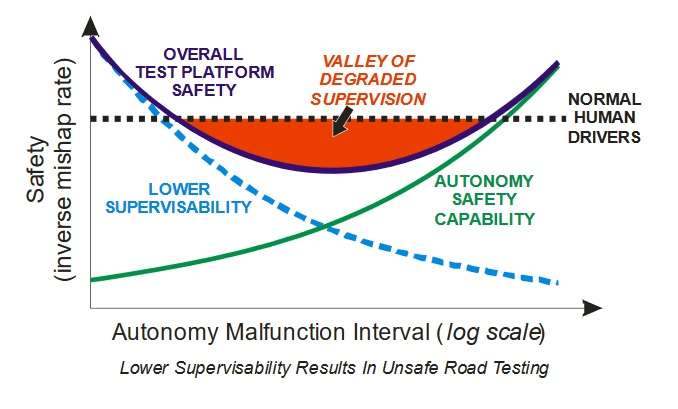

Think about AV test-driving along a familiar route in consistent driving conditions, Koopman asked.

Over time, the driver — attempting to minimize false alarm disengagements – will learn the general behavior of the vehicle and intervene only when something unusual occurs or there is clear, imminent danger. As normal as this might be as human behavior, there is risk involved, Koopman noted. “The supervisor might not have an accurate model of what is really going on inside the autonomy system.” The human in the equation cannot diagnose “latent faults” in the system “that just haven’t been activated yet.” A blip that doesn’t blossom into crisis—this time—can go unnoticed.

Valley of Degraded Supervision (Source: Phil Koopman)

In short, let’s face it, humans can’t supervise autonomy very well.

Certainly, it is unrealistic to assume that even well-trained supervisors will be completely engaged 100 percent of the time simply because they have been instructed to remain alert. “A credible safety argument must allow for and mitigate the possibility of supervisors becoming disengaged, distracted, or even potentially falling asleep during testing sessions,” Koopman noted. Imagine what a disaster it could trigger, if autonomy is designed to leave no margin of error.

Looking back on the Uber accident, the questions the public should be posing to AV companies are not about how soon Level 4 AVs will reach the commercial market. They should demand to know how strong — or weak —a safety case these tireless AV promoters have been able to make for their on-road testing.

Subscribe to Newsletter

Test Qr code text s ss