An FPGA array of many sizes

Article By : Vivek Nanda

Start-up Flex Logix is offering the ability to embed hardened logic and DSP cores in arrays that are available in nearly 50 different sizes.

« Previously: Harvard embeds Flex Logix FPGA in deep-learning SoCs

Flex Logix Technologies Inc. provides an EFLX-2.5K Logic IP core and EFLX-2.5K DSP IP core, which are building blocks for nearly 50 different-sized arrays. A licensing company would mix-and-match the logic and DSP cores depending on their need.

The EFLX-2.5K Logic IP core has 2,520 LUTs, 632 inputs and 632 output and is a complete embedded FPGA. The EFLX-2.5K core can be tiled to make larger arrays as required. The EFLX2.5K DSP core is interchangeable in EFLX arrays with the Logic IP core: the EFLX-2.5K DSP core has 40 MACs (pre-adder, 22-bit multiplier and 48-bit accumulator), which are pipelineable; the number of LUTs is 1,880.

The TSMC 16FFC/FF+ implementation offers multiple enhancements: 6-input LUTs for more logic and fewer stages leading to faster clock rates; an enhanced interconnect for more performance especially for large arrays; multiple DFT features for increased fault coverage, faster test time and increased reliability.

Figure 1: Flex Logix's array comprises logic blocks of 100 or 2.5K sizes. Logic and DSP blocks can be mixed in arrays of various sizes as well as shape. (Source: Flex Logix presentation to EE Times Asia.)

EFLX is available in two core sizes (-100 and -2.5K) today on multiple mainstream foundry processes: TSMC40ULP, TSMC28HPM/HPC and TSMC16FF+/FFC. EFLX can also be ported to any proprietary CMOS process for organisations with their own fabs.

"The FPGA that we provide is a hard IP core that drops inside a chip. We support TSMC 40nm, 28nm and 16nm and we can supply any size FPGA that the customer wants, said Geoff Tate, CEO and co-founder of Flex Logix.

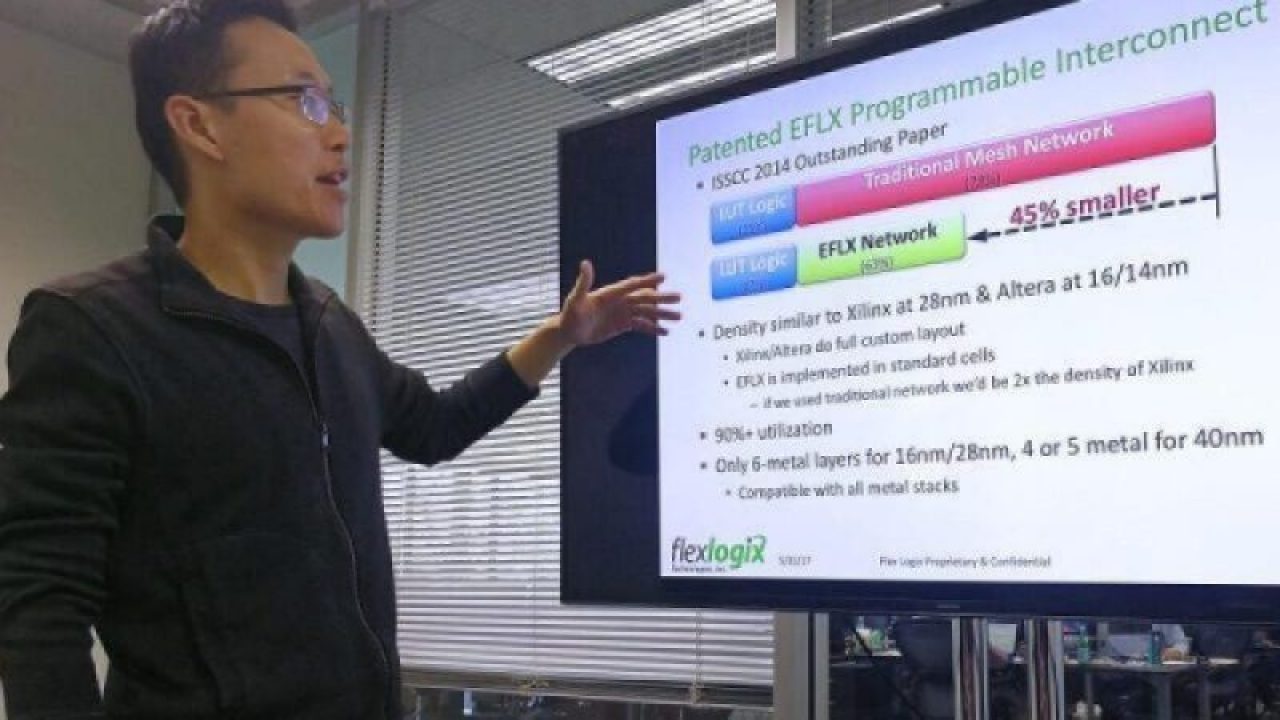

Breakthrough interconnect technology

Flex Logix utilises a new breakthrough interconnect architecture: less than half the silicon area of traditional mesh interconnect, fewer metal layers, higher utilisation and higher performance, the company said. In a traditional FPGA architecture, interconnects dominate. Talking to EE Times Asia, Cheng Wang, Senior VP Engineering (pictured above explaining us their interconnect architecture), said, "About 75% to 80% are switches that connect the logic—these logic [blocks] are like look-up tables [LUT] and so on. If you look into the interconnect architecture of Xilinx or Altera, it is generally a mesh-based interconnect. A mesh network has its advantages. It's very big [in] performance and it's area is reasonable for a smaller scale networks.

"When Xilinx and Altera started, they were in 64 LUTs and 128 LUTs. Now you are in the millions of LUT range. Over the years, they have optimised their mesh networks but a mesh network, in my opinion, is not designed for something that's got millions of nodes in it. When you have millions of nodes to connect, you have to go with something that's more hierarchical. Think about a radix butterfly.

"One of the fundamental benefits of a hierarchical network is for N nodes, where each node can be a look-up table, you need of the order of N x logN number of switches. If you are looking at a mesh network, to get the same amount of throughput you are going to need N²," he said. To take a more detailed look, see Cheng's PhD paper here.

Figure 2: The end-result of using a hierarchical network is that Flex Logix saves on space. (Source: Flex Logix presentation to EE Times Asia.)

Cheng added that over the years people have improved their mesh networks and the interconnect complexity is not N² (otherwise you couldn't implement any of today's FPGAs). "But if you were to optimise a mesh network versus optimising a hierarchical network, you will still see that this [hierarchical] will result in a much smaller area," he explained. He added that a hierarchical network, however, has a throughput limitation and when you map your design on to an FPGA you would generally get a lower performance versus putting it on a mesh-style FPGA.

Figure 3: Cheng moves RAM closer to the logic blocks.. (Source: Flex Logix presentation to EE Times Asia.)

"A lot of the research I have done was to make a hierarchical network on an FPGA ara efficient but without the performance penalty," said Cheng. "That's how we can get performance close to a Xilinx, Altera FPGA without having as much area." According to him, when you are integrating an FPGA into your silicon, the area of the IP is important. Cheng said his approach also saves on the number of nets as well, which reduces the amount of routing needed.

Subscribe to Newsletter

Test Qr code text s ss