AI Industry in Dire Need of Debiasing Tools

Article By : Ann R. Thryft

The issue of fairness didn't really come up until AIs started getting deployed, which despite a long history of development began only recently

There are lots and lots of guidelines and best practices for defining AI fairness and what to do about bias, as we describe in a companion article, Who Should Define 'AI Fairness'? There are not a lot of tools that design engineers could use to detect and correct bias in algorithms or datasets, however. Such tools are lacking both for engineers developing their own products and for customers who want to optimize third-party AI systems.

Only a few technology firms have announced open-source debiasing tools for developers, but some university researchers have created various types of debiasing tools, as we report in another companion article, Q&A: AI Researchers Answer 5 Questions. In addition, some major financial management firms like KPMG and CapGemini are designing their own comprehensive, enterprise-scale AI management solutions for their clients. These frameworks and tool suites sometimes include tools for engineers.

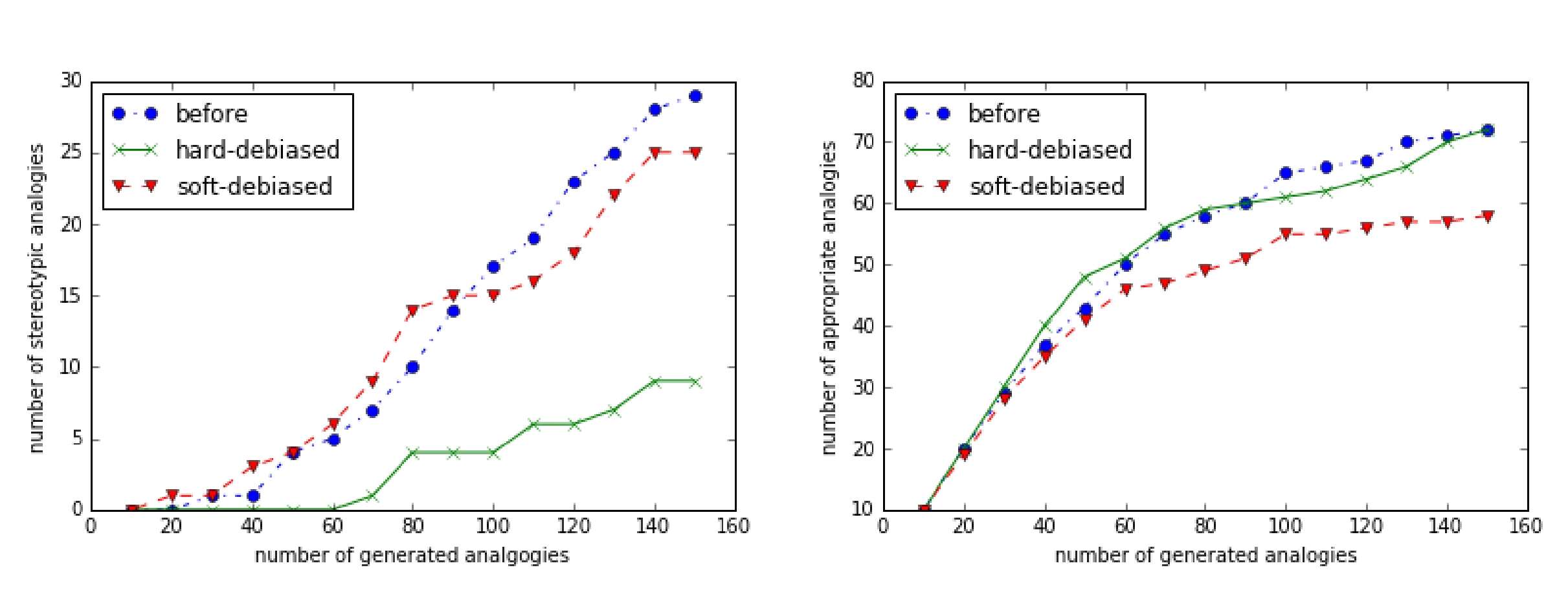

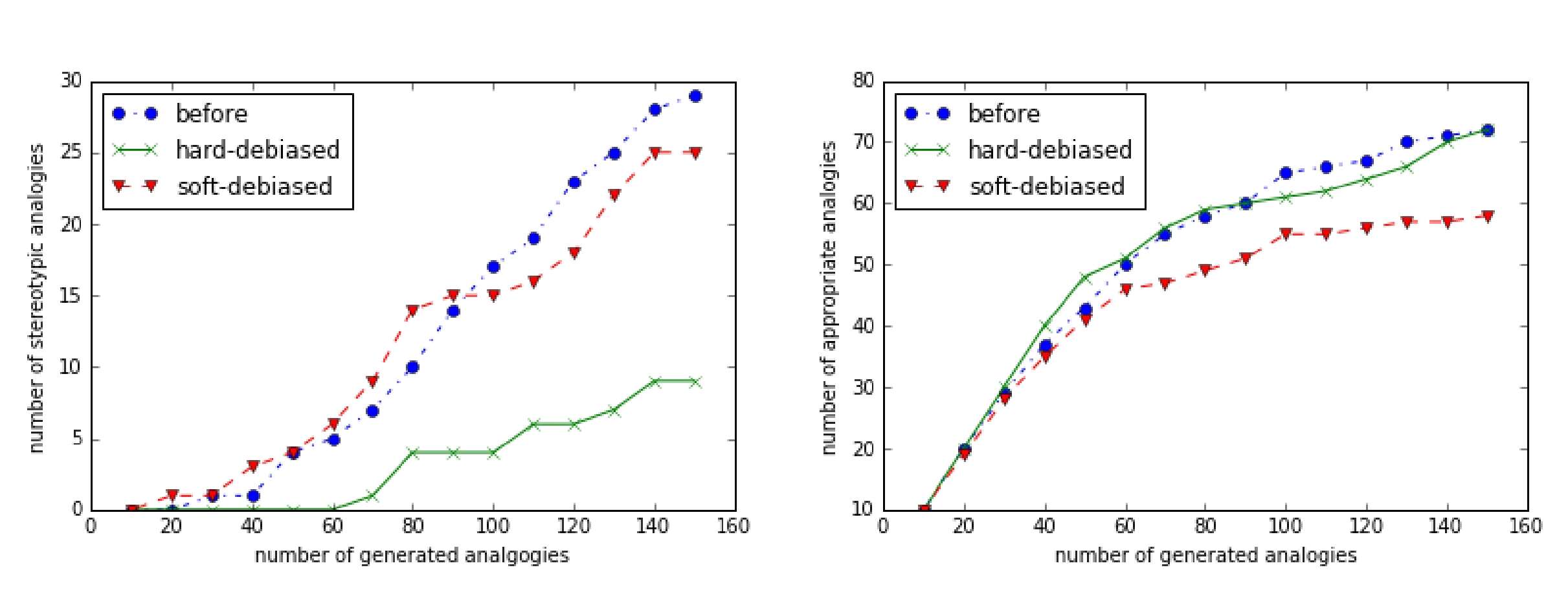

To reduce bias in word embeddings, a debiasing algorithm is used to remove gender associations in the embeddings of gender-neutral words. Shown here, the number of stereotypical (left) and appropriate (right) analogies generated by word embeddings before and after debiasing. The algorithms were also evaluated to ensure they preserved the desirable properties of the original embedding, while reducing both direct and indirect gender biases.

Some tools are appearing in specific industries that have either experienced high-profile machine-learning-gone-bad horror stories, or are at risk for them. These include financial services, such as credit scoring, credit risk, and lending, as we detail in another article, Reducing Bias in AI Models for Credit and Loan Decisions – as well as hiring decisions. There are also debiasing and auditing services available (see the "Methodologies, Frameworks…" box at the bottom of this page).

The first rudimentary AIs date back to the 1950s. One might have expected the issue would have come up before this, but questions of fairness tend not to get raised until someone is treated unfairly, and even with all that history, the deployment of AI systems is still new. "So now we're learning things we didn't even envision before," said Aleksandra Mojsilović, IBM fellow, head of AI Foundations and co-director of IBM Science for Social Good. "We're in a fact-based learning period."

Defining what is 'AI' is also problematic: the line between AI on one hand and data science or data mining on the other is blurred, because much of the latter has been repackaged and advertised as AI.

Implementing AI fairness and figuring out how that applies to the product you're developing is complex: it requires being in the mind of both the practitioner and the user, said Mojsilović. Developers need to understand what fairness means, keep it in mind, and imagine how their product might impact users positively or negatively.

That underlines why diversity is so important. "Suppose you're building a product and its model. You must check the data for balances and imbalances," she said. "Or, say you find out the data was collected inappropriately for the problem you're trying to solve. You may need to check the model to make sure it's fair and well balanced. Then, you put the algorithm into production and maybe the model sees users it did not see during its training phase. You have to check fairness then, too. Checking fairness and mitigation has to happen throughout the lifecycle of the model."

Fairness checking and mitigation tools will be both commercial and open-source, said Mojsilović. "Both are equally important, and they will play equally going forward." Eventually, such tools will be used in more sophisticated systems, so some will be vertical, such as industry-specific or user group-specific. Hybrid open-source/commercial tools systems will also be used.

New technology is often used first where laws and regulations have the most implications to those industries. So it's not surprising to see many fairness and mitigation tools being developed in finance, where many decisions made by humans and AIs are heavily scrutinized, said Mojsilović. These tools will also benefit the legal professions and credit scoring, other areas where the cost of errors is large.

Methodologies, Frameworks and Services for Debiasing AI

Some financial management firms have designed their own AI management frameworks and toolsuites for their clients that sometimes include tools for engineers. We also list some suggested methodologies that are more specific than guidelines, as well as debiasing and audit services.

Methodologies

Enterprise-scale AI management frameworks

- CapGemini – Perform AI solutions portfolio for manufacturing and other sectors addresses ethics concerns, and includes an AI Engineering module for delivering trusted AI solutions in production and at scale.

- KPMG – AI in Control framework includes a Technology management component. “Guardians of Trust” white paper discusses AI fairness and ethics in context of the wider issues of corporate-wide trust and governance in analytics.

- Accenture – AI Fairness Tool lets clients assess algorithm models and underlying data to correct for bias in both in-house developed AI and third-party solutions. “Teach and Test Tool” helps clients validate and test their AI systems, including model debugging, so they act responsibly.

Debiasing and Algorithmic Auditing Services

- O’Neil Risk Consulting & Algorithmic Auditing, Headed by Cathy O’Neil

- Alegion, Does training data prep by converting data into large-scale AI training datasets for enterprise-level AI and machine learning, and validates model, via a human-assisted training data platform to eliminate bias.

Shopping for Tools

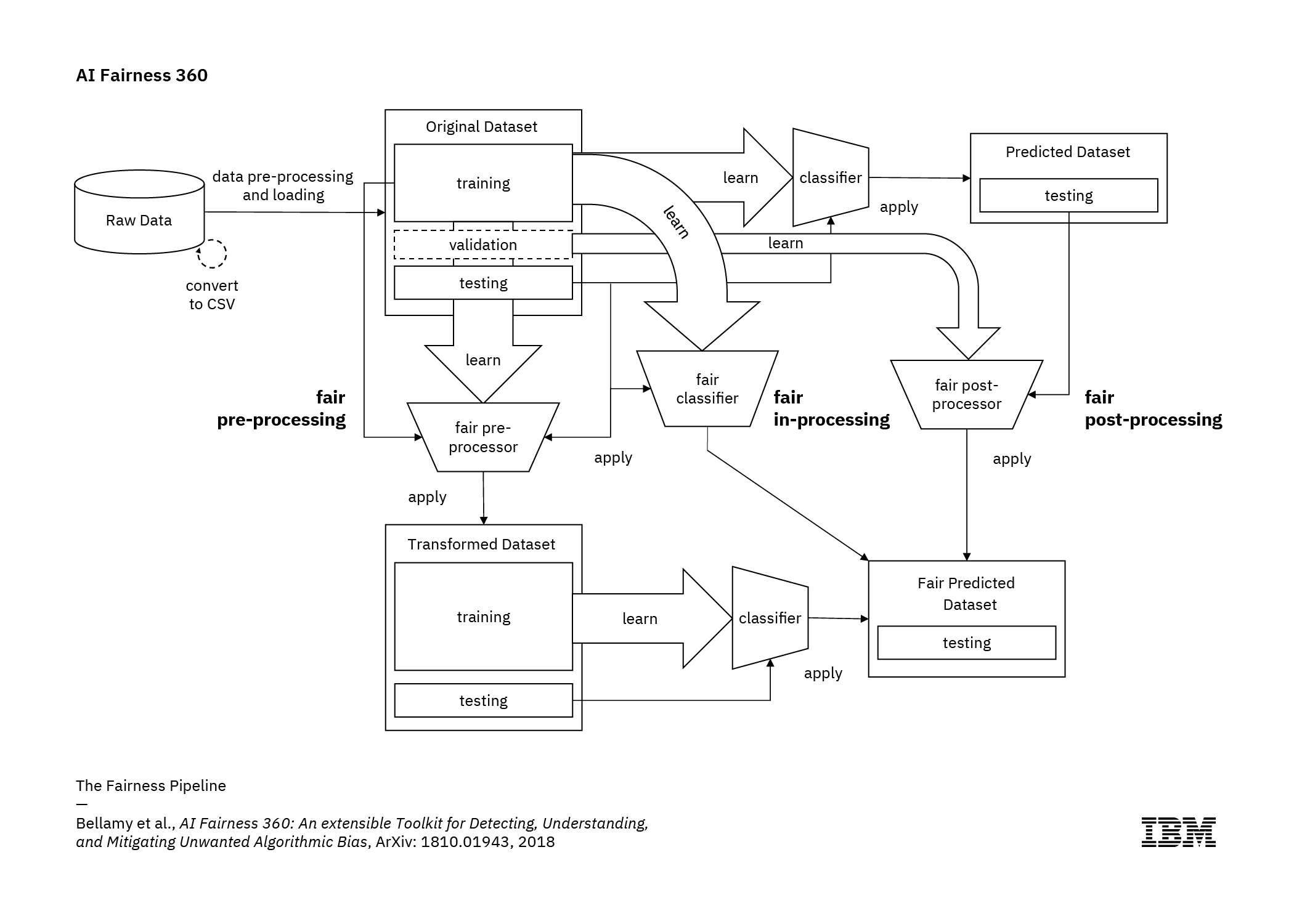

The most comprehensive set of developer tools right now is probably IBM’s AI Fairness 360 Open Source Toolkit – which can be used with various training data sets – and its accompanying technology.

"All these additional things like fairness checking are an enormous burden on programmers, data scientists, and developers," said Mojsilović. "So, it's one thing to know what trust means, and another to develop automated tools that can put these components into practice in a tool-friendly way."

The company has implemented these same algorithms in its own production systems so clients can use them, as well. Mojsilović noted that the problems are complex, and there will have to be an evolution of trust and trust tools in the industry.

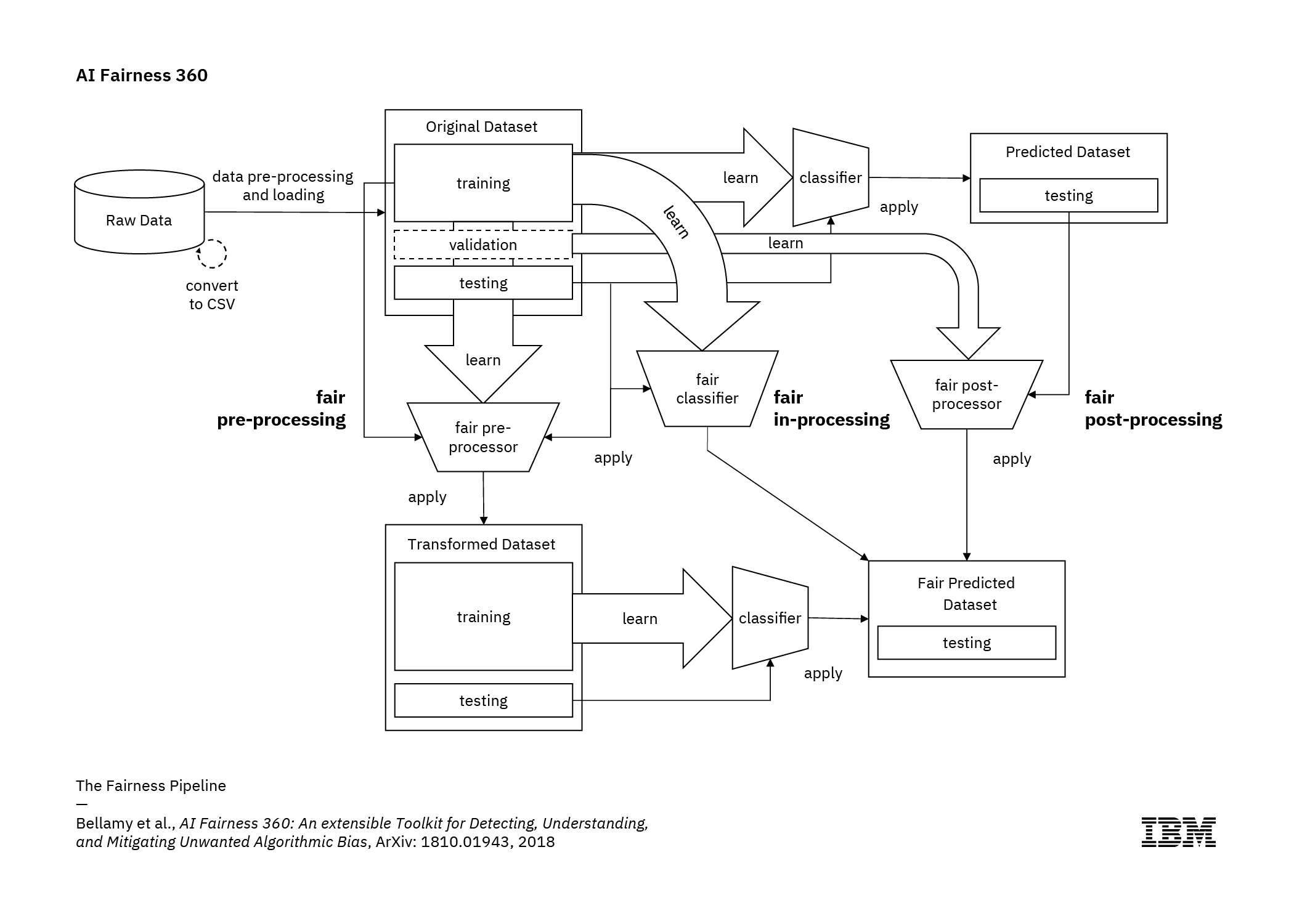

An example instantiation of this generic pipeline consists of loading data into a dataset object, transforming it into a fairer dataset using a fair pre-processed algorithm, learning a classifier from this transformed dataset, and obtaining predictions from this classifier. Metrics can be calculated on the original, transformed, and predicted datasets as well as between the transformed and predicted datasets.

It's no secret that eliminating bias can mean different things mathematically, and the process can also affect accuracy. One of the most comprehensive articulations of these problems is given by Michael Kearns, University of Pennsylvania professor of management and technology, computer and information science department. In his talk, Fair Algorithms for Machine Learning, given at the Plenary Session of the 2017 ACM Conference on Economics and Computation, Kearns discusses various ways of approaching the math of algorithmic fairness and how it interacts with accuracy.

Controlling AI Fairness at the Enterprise Scale

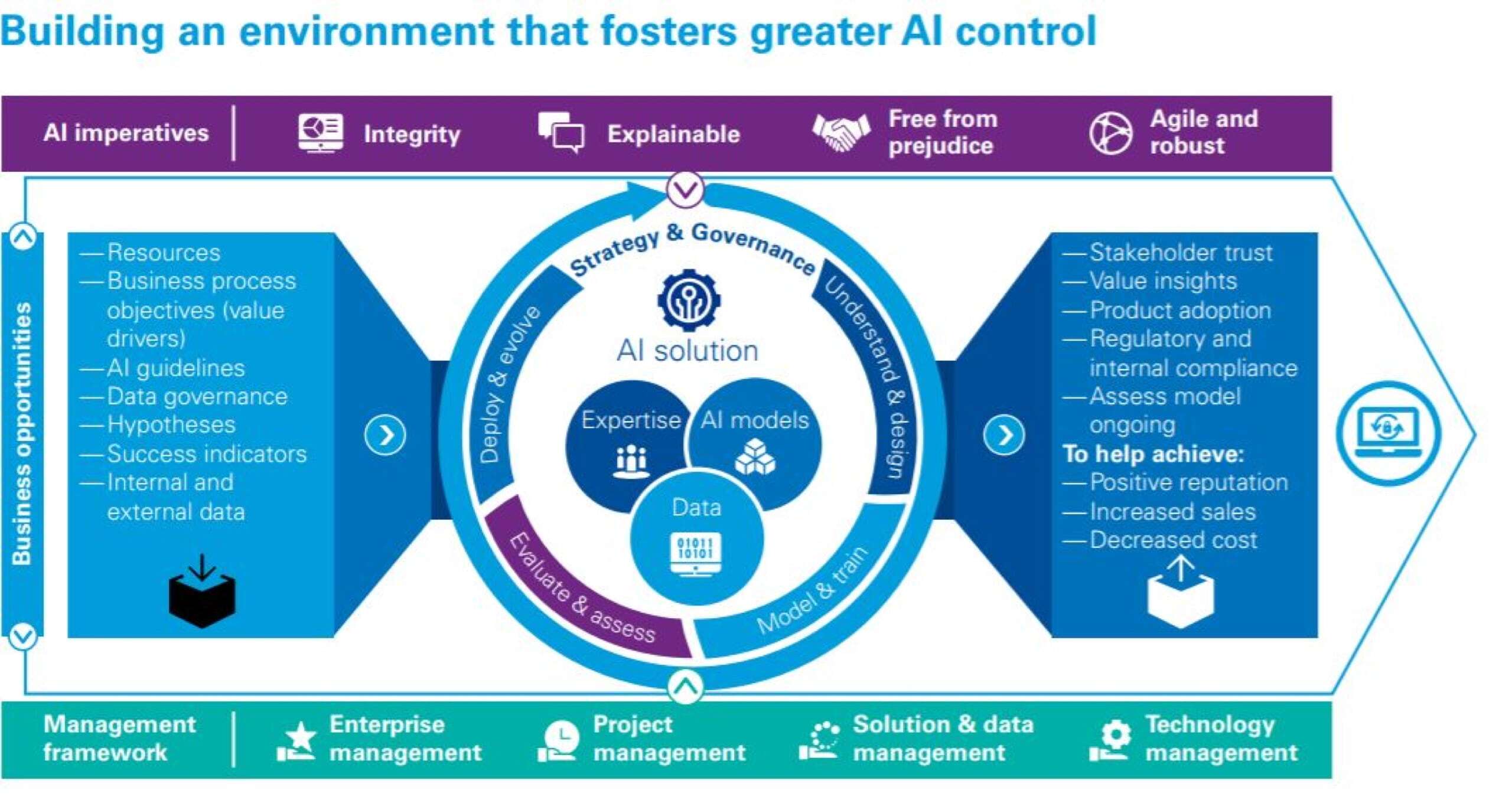

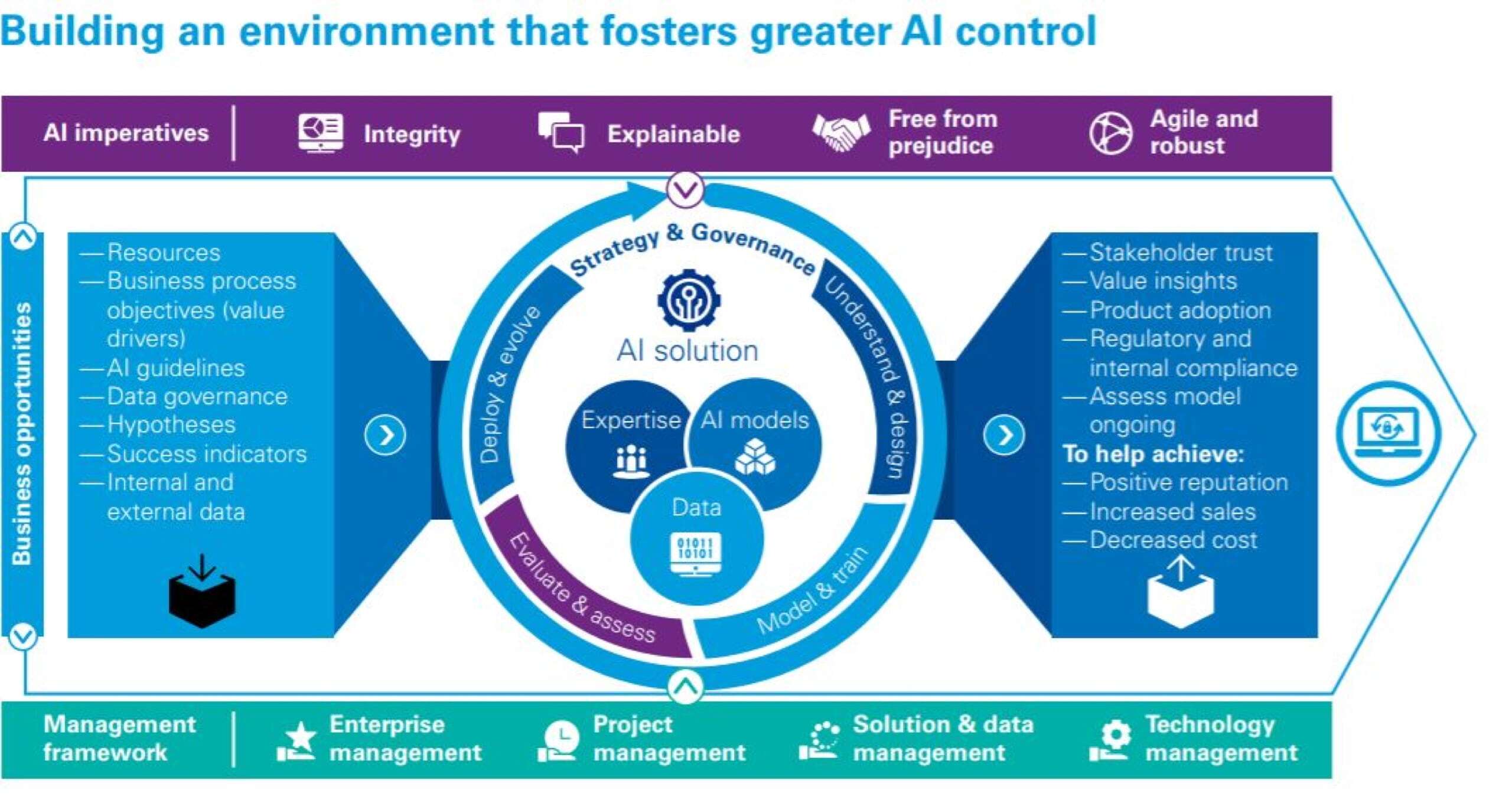

In February, KPMG launched its AI in Control framework to help businesses responsibly manage AI technologies they develop, and provide continuous control of evolving AI applications. The set of methods, tools, and recommended controls is designed to help organizations realize value from AI technologies while achieving objectives like algorithm integrity, explainability, fairness and agility.

Taking a look at what the firm did to construct this comprehensive, thoroughly thought-out, enterprise-scale solution is enlightening; managing and controlling AI technology and its application to ensure fairness and other aspects of trustworthy AI is not a simple operation. This perspective also gives a larger context for what developers’ tools are trying to accomplish, and the landscape within which they must work.

Since there's no standard today for auditing algorithms, the governance of AI can be compared in some respects to the governance used for financial reporting. But autonomy adds a new variable. "How do you make sure your decision-making isn't being impacted by an autonomous algorithm, or at least if it is being impacted, do you know how and why?" said Sander Klous, global lead of KPMG's AI In Control, a partner with KPMG in the Netherlands, and professor of big data ecosystems at the University of Amsterdam. "A similar approach can be used for independent parties to verify that the organization that created the algorithm is trusted."

While AI governance is key, users can't approach AI applications with only traditional methods for governance or control testing, said Martin Sokalski, KPMG Global Emerging Technology Risk network leader and a principal with KPMG in the US. "Instead, there must also be a mechanism for using tooling to govern, evaluate, and monitor AI."

The AI in Control framework can help businesses manage the AI technologies they develop responsibly. The set of methods, tools, and recommended controls is designed to help organizations realize value from AI technologies while also achieving algorithm integrity, explainability, fairness and agility.

To build their solution, KPMG used the three-lines-of-defense framework well known in financial audits and risk management, said Klous. Data engineers and data scientists develop algorithms at the operational level. Before an algorithm goes live certain controls must be in place, so that requires peer review. A layer above this is risk management for running this algorithm. A third layer reviews that all of the controls have been designed and implemented properly and what effects they have on the operation.

Over the last year, KPMG identified about 150 controls that can be expected in such a framework, according to Klous. Not all of them are needed for every situation or every algorithm, so users make a map of the process specifying what controls and procedures are needed for each situation and algorithm. These procedures can be quite different from financial statement audits.

"For example, before the algorithm goes live, it goes through an A/B test, a type of control you wouldn't have in auditing financial statements." Klous said. "Given the highly agile nature of algorithm development, there's also a need for all kinds of technology you can use to identify risks, such as continuous monitoring."

AI in Control works with both the algorithms deployed within an engineer's company, which the engineer may have developed, and for algorithms the engineer develops for products sold to outside customers, said Sokalski. The threshold for the required level of controls will vary by the individual AI use case, since not every AI is created equal. "We would expect to see a different controls profile applied to a predictive maintenance solution than for medical, lending, or recruiting AI-enabled applications," he said.

The Tools in the Toolbox

Several existing and emerging tools in the market help supplement and automate parts of the AI in Control delivery methodology and framework. "Our toolbox consists of a variety of tools – some commercial, some open source, some internally developed – that help achieve responsible adoption of AI," said Sokalski. We use these tools to evaluate, visualize, and solve for, ‘by design', the inherent risks of AI, including integrity, explainability, fairness, and resilience."

One commercial tool in that toolbox is IBM Watson Open Scale, which lets users evaluate AI models for bias and explainability across a broad landscape of AI platforms and providers.

Klous emphasized that several solutions do exist to deal with the challenges of fairness and bias assessments. Once these methods have been defined, they can be embedded in controls and continuously monitored with tools. "This is a necessary step needed in the case of AI due to the dynamic nature of algorithms, but it is not the hardest part," he said. "In all of these solutions the hard part is still done by human beings."

The position that needs to be taken with AI is that it won't always be perfect, and there will still be mistakes. "So the question is, how do we build a system with controls so we can identify and monitor it when something goes wrong?" said Klous. This would be a better way to manage expectations. Often, organizations have an overly narrow definition of AI, but those narrow definitions can also be problematic when identifying risks. "In my opinion, the impact of an algorithm failure is a more important characteristic than the underlying technology," he said.

Tools for Debiasing AI

There aren’t a lot of debiasing and auditing tools yet for developers to use. Here’s a list of what we’ve found from industry and academia, in addition to some industry-specific tools.

Tools

Google:

IBM:

- AI Fairness 360 – Open source toolkit for AI fairness curates advanced algorithms for detecting and eliminating different kinds of bias that can corrupt AI systems.

- Diversity in Faces – Dataset of annotations for 1M face images for advancing the study of fairness and accuracy in facial recognition technology.

- Watson OpenScale – IBM built bias detection technology into Watson OpenScale so clients can perform bias checking and mitigation in real time when AI is making its decisions.

- FactSheets for AI – These are like nutrition labels for AI, providing key details like how the model was trained, what performance levels were achieved, whether or not the model was biased, or other important metrics.

Massachusetts Institute of Technology Computer Science and Artificial Intelligence Lab (CSAIL)

- Amini, Alexander et al. “Uncovering and Mitigating Algorithmic Bias through Learned Latent Structure.” AAAI/ACM Conference on AI Ethics and Society. 2019. – This paper describes a novel, tunable debiasing algorithm to adjust the respective sampling probabilities of individual data points while training. The approach reduces hidden biases in training data and can be scaled to large datasets. A concrete algorithm for debiasing and an open source implementation of the model is provided.

Stanford Human-Centered Artificial Intelligence Institute:

Industry-specific tools

- ZestFinance – ZAML Fair tool to reduce bias in AI-powered credit scoring models is part of the company’s main ZAML platform, based on game theory.

- Pymetrics – Cloud-based human resources assessment tools help employers find candidates who best fit their needs, while reducing gender and racial biases. The company has open-sourced its Audit-AI algorithm bias detection tool.

Related