ADAS: Key Trends on ‘Perception’

Article By : Junko Yoshida

The automotive industry is still in a hunt for "robust perception" that works under any conditions - including night, fog, rain, snow, black ice, etc. The view from AutoSens 2019.

The upshot of AutoSens 2019 held here last week was that there’s no shortage of technology innovation, as tech developers, Tier 1’s and OEMs are still in a hunt for “robust perception” that can work under any road conditions — including night, fog, rain, snow, black ice, oil and others.

Although the automotive industry has yet to find a single silver bullet, numerous companies pitched their new perception technologies and product concepts.

At this year’s AutoSens in Brussels, assisted driving (ADAS), rather than autonomous vehicles (AV), was in sharper focus.

Clearly the engineering community is no longer in denial. Many acknowledge that a big gap exists between what’s possible today and the eventual launch of commercial artificial intelligence-driven autonomous vehicles — with no human drivers in the loop.

Just to be clear, nobody is saying self-driving cars are impossible. However, Phil Magney, founder and principal at VSI Labs, predicted that “Level 4 [autonomous vehicles] will be rolled out within a highly restricted operational design domain, [and built on] very comprehensive and thorough safety case.” By “a highly restricted ODD,” Magney said, “I mean specific road, specific lane, specific operation hours, specific weather conditions, specific times of day, specific pick-up and drop-off points, etc.”

Asked if the AI-driven car will ever attain “the common sense understanding” — knowing that it is actually driving and understanding its context, Bart Selman, a computer science professor at Cornell University who specializes in AI, said at the conference’s closing panel: “It will be at least 10 years away…, it could be 20 to 30 years away."

Meanwhile, among those eager to build ADAS and highly automated cars, the name of the game is in how best they can make vehicles see.

The very foundation for every highly automated vehicle is “perception” — knowing where objects are, noted Phil Koopman, CTO of Edge Case Research and professor at Carnegie Mellon University. Where AVs are weak, compared to human drivers, is “prediction” — understanding the context and predicting where the object it perceived might go next, he explained.

Moving smarts to the edge

Adding more smarts to the edge was a new trend emerging at the conference. Many vendors are adding more intelligence at the sensory node, by fusing different sensory data (RGB camera + NIR; RGB + SWIR; RGB + lidar; RGB + radar) right on the edge.

However, opinions among industry players appear split on how to accomplish that. There are those who promote sensor fusion on the edge, while others, such as Waymo, prefer a central fusion of raw sensory data on a central processing unit.

With the Euro NCAP mandate for the Driver Monitoring System (DMS) as a primary safety standard by 2020, a host of new monitoring systems also showed up at AutoSens. These include systems that monitor not only drivers, but passengers and other objects inside a vehicle.

A case in point was the unveiling of On Semiconductor’s new RGB-IR image sensor combined with Ambarella’s advanced RGB-IR video processing SoC and Eyeris’ in-vehicle scene understanding AI software.

NIR vs SWIR

The need to see in the dark — whether inside or outside a vehicle — indicates the use of IR.

While On Semiconductor’s RGB-IR image sensor uses NIR (near infrared) technology, Trieye, which also came to the show, went a step further by showing off a SWIR (short wave-based infrared) camera.

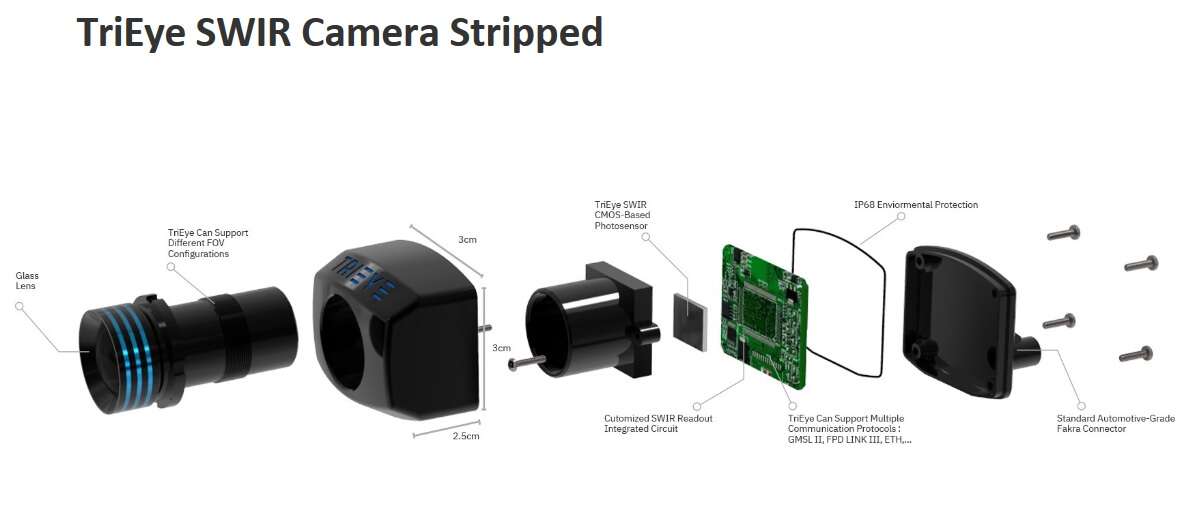

What is short-wave infrared (SWIR)? (Source: Trieye)

SWIR’s benefits include its ability to see objects under any weather/lighting conditions. More important, SWIR can also identify a road hazard — such as black ice — in advance, since SWIR can detect a unique spectral response defined by the chemical and physical characteristics of each material.

The use of SWIR cameras, however, has been limited to military, science and aerospace applications, due to the extremely high cost of the indium gallium arsenide (InGaAs) used to build it. Trieye claims it has found a way to design SWIR by using CMOS process technology. “That’s the breakthrough we made. Just like semiconductors, we are using CMOS for the high-volume manufacturing of SWIR cameras from Day 1,” said Avi Bakal, CEO and co-founder of Trieye. Compared to InGaAs sensor that costs more than $8,000, Bakal said that a Trieye camera will be offered “at tens of dollars.”

SWIR Camera Stripped (Source: Trieye)

Lack of annotated data

One of the biggest challenges for AI is the shortage of training data. More specifically, “annotated training data,” said Magney. “The inference model is only as good as the data and the way in which the data is collected. Of course, the training data needs to be labeled with metadata and this is very time consuming.”

AutoSens featured lively discussion about the GAN (generative adversarial networks) method. According to Magney, in GAN, two neural networks compete to create new data. Given a training set, this technique reportedly learns to generate new data with the same statistics as the training set.

Drive.ai, for example, is using deep learning to enhance automation for annotating data, to accelerate the tedious process of data labeling.

In a lecture at AutoSens, Koopman also touched on the arduous challenges in annotating data accurately. He suspects that much data remains unlabeled because only a handful of big companies can afford to do it right.

Indeed, AI algorithm startups attending the show acknowledged the pain that comes with paying third parties for annotating data.

GAN is one way. But Edge Case Research proposes another way to speed the development of safer perception software without labeling all that data. The company recently announced a tool called Hologram, which offers an AI perception stress-test and risk-analysis system. According to Koopman, without labeling petabytes of data but running it twice, Hologram can provide a heads-up where things look “fishy,” and areas where “you’d better go back and look again” by either collecting more data or doing more training.

Another issue that surfaced at the conference was what happens to an annotated data set if a car OEM replaces the camera and sensor used for data training.

David Tokic, vice president of marketing and strategic partnerships at Algolux, told EE Times that automotive engineers working on ADAS and AVs are concerned about two things: 1) Robust perception under all conditions and 2) Accurate and scalable vision models.

Typical camera systems deployed in ADAS or AV today are all different, with large variabilities. Depending on their lenses (different lenses provide different fields of view), sensors, and image signal processing, parameters all differ. A technology company picks one camera system, collects a large data set, annotates it and trains it in order to build an accurate neural network model tuned to the system.

But what happens when an OEM replaces the camera originally used for training data? This change could affect perception accuracy because the neural network model — tuned to the original camera — is now coping with a new set of raw data.

Does this require an OEM to train its data set all over again?

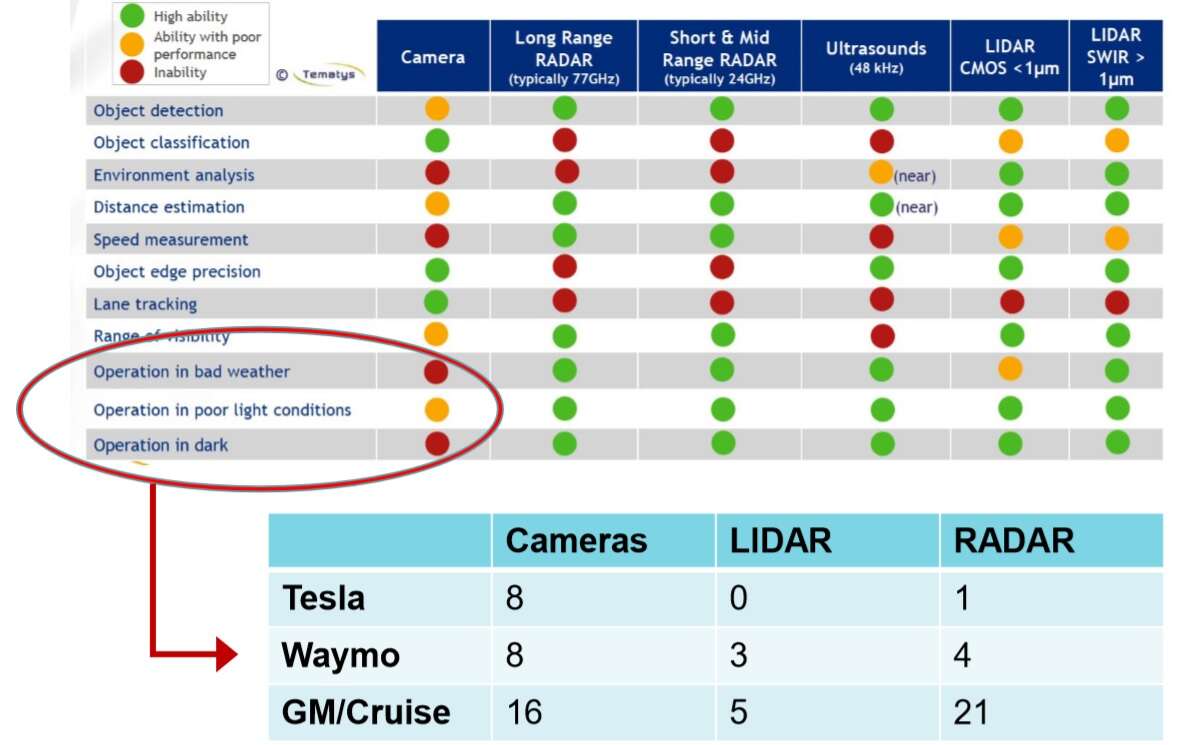

Tesla, Waymo, GM/Cruise use a host of cameras in their AVs (Source: Algolux)

Asked about the swap-ability of image sensors, VSI Labs’ Magney said, “I don’t think this is an option unless the specs are the same.” He noted, “For example at VSI, we trained our own Neural Network for the FLIR thermal camera and those training images were collected with the same specs as the camera that we deployed on. We did swap sensors later, but the specs were the same.”

Algolux, however, claims a new methodology to translate such previously created data sets “in a matter of days.” Tokic said the company’s Atlas Camera Optimization Suite achieves this by knowing “priors” — characteristics of a camera and a sensor — and applying them to detection layers. “Our mission here is to democratize a choice of cameras” for OEMs, said Tokic.

AI hardware

A host of new AI processor startups were born over the last few years, creating an AI momentum that has prompted some to declare the arrival of a hardware renaissance. Many AI chip startups have described ADAS and AVs as their target markets.

Targeting this newly emerging AI accelerator market, Ceva, for one, unveiled at the AutoSens conference the company’s new AI core and ‘Invite API.“

Curiously, though, a new generation of feature-rich vehicles hasn’t exactly launched the deployment of fresh AI chips yet — beyond those designed by Nvidia and Intel/Mobileye, and “full self-driving (FSD) computer” chips developed by Tesla for its internal use.

On Semiconductor’s RGB + IR camera announcement at AutoSens, on the other hand, revealed that the On Semi/Eyeris team has picked Ambarella’s SoC as its AI processor for in-vehicle monitoring tasks.

Acknowledging that Ambarella is not generally known as an AI accelerator outfit (it is, rather, a traditional video compression and computer vision chip company), Modar Alaoui, CEO of Eyeris, said, “We couldn’t find any AI chips that can support 10 neural networks, consume less than 5 watt and capture 30 frames per second video by using up to six cameras — all looking inside a vehicle” to run Eyeris’ AI in-vehicle monitoring algorithms. But Ambarella’s CV2AQ SoC fit the bill, he said, beating all the other much-hyped accelerators.

Alaoui is hopeful, however, that his company’s AI software will be additionally ported to three other hardware platforms by the Consumer Electronics Show in Las Vegas next January.

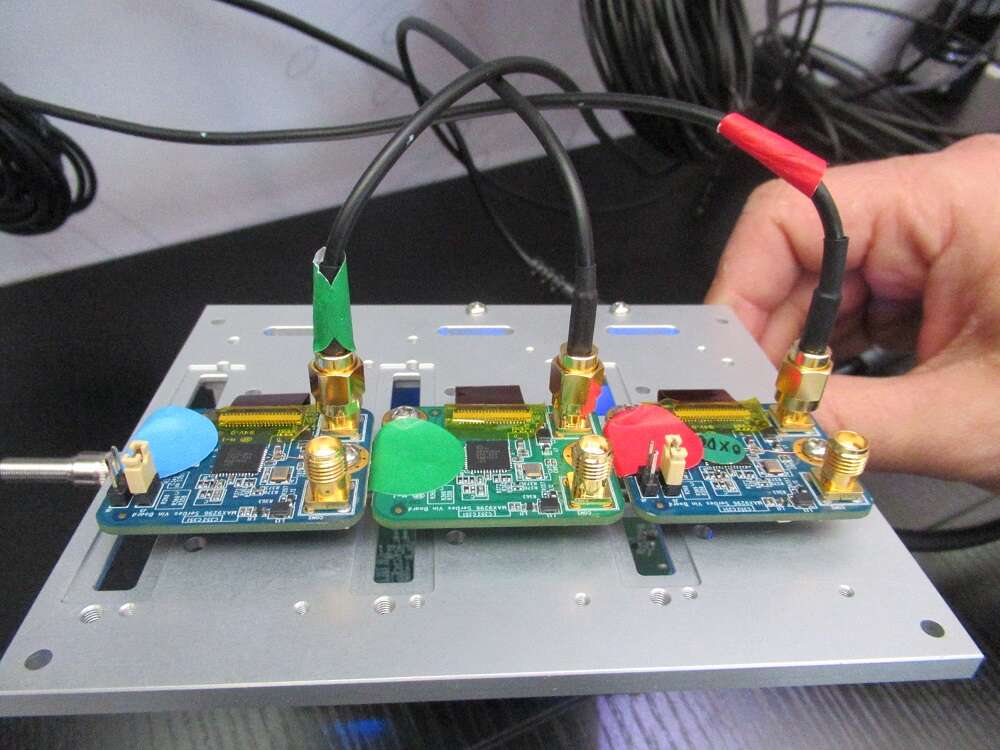

On Semi, Ambarella and Eyeris demonstrate a new in-cabin monitoring system using three RGB-IR cameras. (Photo: EE Times)

Meanwhile, On Semi stressed that driver and occupant monitoring applications require “the ability to capture images in variable lighting from direct sunlight to pitch black conditions.” It claimed that with its good NIR response, “the RGB-IR CMOS image sensor technology provides full HD 1080p output using a 3.0 µm backside illuminated (BSI) and three-exposure HDR.” Sensitive to both RGB and IR light, the sensors can capture color images in daylight and monochrome IR images with NIR illumination.

Going beyond driver monitoring system (DMS)

Alaoui boasted that Eyeris’ AI software can perform complex body and facial analytics, passenger activity monitoring and object detection. Beyond driver monitoring, “We look at everything inside a car, including surfaces [of seats] and steering wheels,” he added, stressing that the startup has gone above and beyond what Seeing Machines already accomplished.

Laurent Emmerich, director, European customer solutions at Seeing Machines, however, begged to differ. “Going beyond monitoring a driver and covering more inside a vehicle is a natural evolution,” he said. “We, too, are on track.”

Compared to startups, Seeing Machines’ edge lies in “our solid foundation in computer vision with AI expertise built over the last 20 years,” he added. Currently, the company’s driver monitoring system is adopted by “six carmakers and designed into nine programs.”

Further, Seeing Machines noted that it has also developed its own hardware — Fovio driver monitoring chip. Asked if the chip can also serve future in-vehicle monitoring systems, Emmerich explained that the IP of its chip will be applied to a configurable hardware platform.

Redundancy

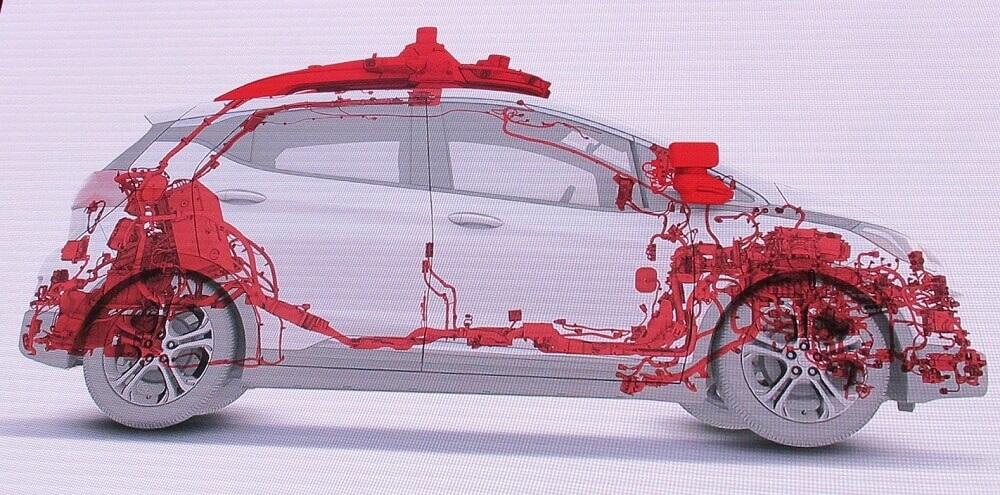

Combining additional sensors of different modalities and installing them in a vehicle is necessary not only for improving perception but also adding much needed redundancy for safety.

Outsight, a startup co-founded by Cedric Hutchings, a former Whitings CEO, was at AutoSens pitching a new highly integrated box consisting of several sensors. He explained that Outsight’s sensor fusion box was designed to “offer perception with comprehension, and localization with understanding of the full environment — including snow, ice and oil on the road.” He added, “We can even classify materials on the road by using active hyperspectral sensing.”

When asked whose sensors go inside Outsight’s box, he declined to comment. “We are not announcing our key partners at this time, as we are still tuning the right specs and right applications.”

EE Times’ subsequent discussion with Trieye revealed that Outsight will be using Trieye’s SWIR camera. Outsight is promoting its sensor fusion box, scheduled for sampling in the first quarter of 2020, to tier ones and OEMs as an added standalone system that offers “uncorrelated data” for safety and “true redundancy,” Hutchings explained.

The box uses “no machine learning,” offering instead deterministic results to make it “certifiable.”

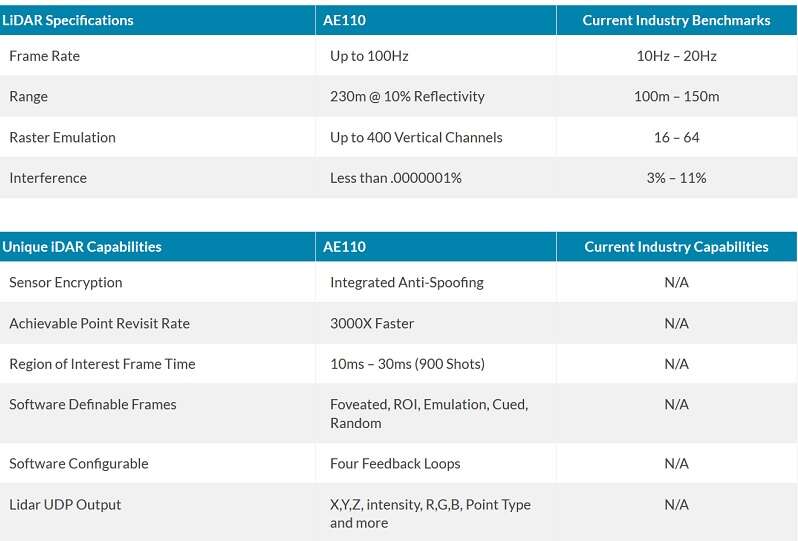

Aeye also pitched its iDAR, a solid-state MEMS lidar fused with an HD camera, for the ADAS/AV market. By combining two sensors and embedding AI, the system — operating in real time — can “address certain corner cases,” said Aravind Ratnam, AEye’s vice president of product management.

The iDAR system is designed to combine 2D camera “pixels” (RGB) and 3D lidar’s data “voxels” (XYZ) to provide a new real-time sensor data type that delivers more accurate, longer range and more intelligent information faster to an AV’s path-planning system, the company explained.

AEye’s AE110 Product Features Compared with Industry Benchmarks and Capabilities (Source: AEye)

In his presentation, Ratnam said AEye studied a variety of uses cases. “We looked at 300 scenarios, picked 56 applicable cases and narrowed them down to 20 scenarios” where the fusion of camera, lidar and AI makes sense.

Ratnam showed a scene in which a small child, out of nowhere, chases a ball into the street – right in front of a vehicle. The camera-lidar fusion at the edge works much faster, reducing the vehicle’s reaction time. He noted, “Our iDAR platform can provide very fast calculated velocity.”

Asked about the advantage of sensor fusion at the edge, a Waymo engineer at the conference told EE Times that he is uncertain if it would make a substantial difference. He asked, “Is it a difference of microseconds? I am not sure.”

AEye is confident of the added value its iDAR can offer to tier ones. By closely working with Hella and LG as key partners, AEye’s Ratnam stressed, “We have been able to drive down the cost of our iDAR. We are now offering a 3D lidar at ADAS prices.”

AEye is finishing the combined RGB and lidar system, automotive grade, embedded with AI in the next three to six months. The price will be “less than $1,000,” said Ratnam.

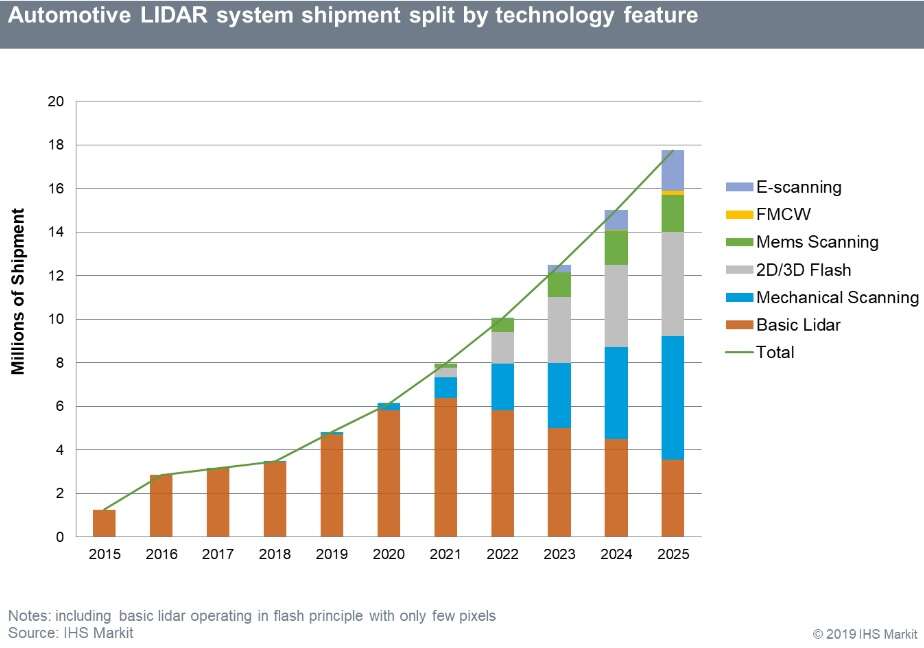

Automotive Lidar System Shipment (Source: IHS Markit

Dexin Chen, senior analyst, Automotive Semiconductors & Sensors at IHS Markit, told the conference audience that lidar suppliers have been “over-marketing, promising too much.” Going forward, he noted, physics — an advantage brought by lidars — “can talk but commercialization will decide.” Much needed are, “standardization, alliance and partnerships, supply chain management and AI partners.”

Subscribe to Newsletter

Test Qr code text s ss