Vehicular Sensor Mix: What’s Really Needed?

Article By : Junko Yoshida, EE Times

Lidar, radar, iDAR, cameras... What's the way forward for autonomous vehicles?

PARIS — Today, there’s no shortage of questions for executives and engineers at tech and auto companies grappling with the technology and business roadmap of automated vehicles (AVs). Three big unanswered questions, however, stand out.

Egil Juliussen, director of research for Infotainment and advanced driver-assistance systems (ADAS) for automotive at IHS Markit, laid out the following as the “unanswered questions” that will dog the auto industry in 2019:

- Do we really need lidars?

- Are tech/auto companies really ready to collaborate in pursuit of “network effect” for advancements of driving software?

- Will the industry solve the L2 to L3 handover problems?

Industry observers certainly see a new round of AV partnerships percolating among tech companies, tier ones and car OEMs. And several companies are trying out new technologies, such as ADAM, on the L2 to L3 handover quandary.

Speaking of that unimaginable dilemma for human drivers when machines suddenly give control back to them, “expect the resurgence of interest in driver monitoring systems among tier ones and OEMs at the 2019 Consumer Electronics Show in Las Vegas next month,” Colin Barnden, Semicast Research lead analyst, told us.

But, will ADAS cars and robocars really need lidars? Juliussen told us, “We are beginning to hear this a lot.” The issue follows the emergence of digital imaging radars “that can do a lot more than they used to,” he explained.

AEye to fuse camera/lidar data

Against this backdrop, a startup called AEye, based in Pleasanton, Calif., announced last week its first commercial product, “iDAR,” a solid-state lidar fused with an HD camera, for the ADAS/AV market.

The idea of autonomous vehicles without lidar has been floating around the tech community for almost a year. The proposition is tantalizing because many car OEMs regard lidars as too costly, and they agree that the lidar technology landscape is far from settled.

Although nobody is saying that a “lidar-free future” is imminent, many imaging radar technology developers discuss it as one of their potential goals. Lars Reger, NXP Semiconductors’ CTO, for example, told us in November that the company hopes to prove it’s possible.

AEye, however, moves into the is-lidar-necessary debate from another angle. The startup believes that car OEMs are reluctant to use current-generation lidars because their solutions today depend on an array of independent sensors that collectively produce a tremendous amount of data. “This requires lengthy processing time and massive computing power to collect and assemble data sets by aligning, analyzing, correcting, down sampling, and translating them into actionable information that can be used to safely guide the vehicle,” explained AEye.

But what if AEye uses artificial intelligence in a way that discriminately collects data information that only matters to an AV’s path planning, instead of assigning every pixel the same priority? This starting point inspired AEye to develop iDAR, Stephen Lambright, AEye’s vice president of marketing, explained to EE Times.

Indeed, AEye’s iDAR is “deeply rooted in the technologies originally developed for the defense industry,” according to Lambright. The startup’s CEO, Luis Dussan, previously worked on designing surveillance, reconnaissance, and defense systems for fighter jets. He formed AEye “to deliver military-grade performance in autonomous cars.”

Driving AEye’s iDAR development were “three principles that shaped the perception systems on military aircraft Dussan learned,” according to Lambright: 1) never miss anything; 2) understand that objects are not created equal and require different attention; and 3) do everything in real time.

In short, the goal of iDAR was to develop a sensor fusion system with “no need to waste computing cycles,” said Aravind Ratnam, AEye’s vice president of products. Building blocks of iDAR include 1550nm solid-state MEMS lidar, a low-light HD camera and embedded AI. The system is designed to “combine” 2D camera “pixels” (RGB) and 3D lidar’s data “voxels” (XYZ) to provide “a new real-time sensor data type” that delivers more accurate, longer range and more intelligent information faster to AV’s path-planning system, according Rantan.

Notably, what AEye’s iDAR offers is not post-scan fusion of a separate camera and lidar system. By developing an intelligent artificial perception system that physically fuses a solid-state lidar with a hi-res camera, AEye explains that its iDAR “creates a new data type called dynamic vixels.” By capturing x, y, z, r, g, b data, AEye says that dynamic Vixels “biomimic” the data structure of the human visual cortex.

‘Combiner’ SoC

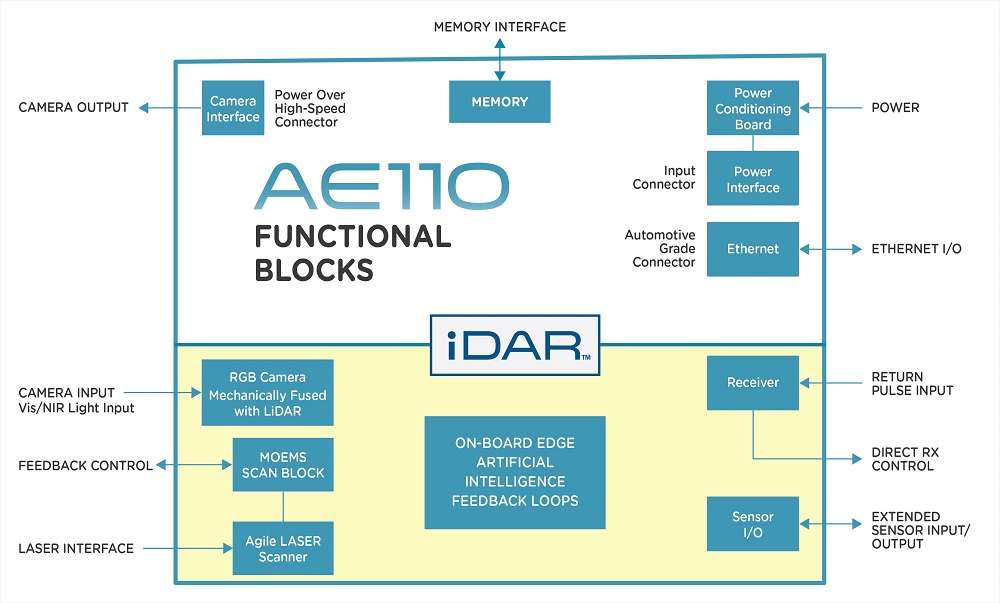

The new iDAR system called AE110, announced last week, is a fourth-generation prototype. Included in the system, according to Ratnam, is a “combiner” SoC based on Xilinx’s Zynq SoC. Zynq integrates an ARM-based processor with an FPGA. It’s designed to enable key analytics and hardware acceleration while integrating CPU, DSP, ASSP, and mixed signal functionality on one device. In 2019, AEye is planning to design its own ASIC for the combiner SoC, he added.

AEye’s iDAR AE110 block diagram (Source: AEye)

‘Vision + radar’ or ‘vision + lidar’?

AEye is promoting its combined vision/lidar sensor system while a few developers of high-precision mmWave radar chips advocate vision/radar solutions.

Mike Demler, senior analyst at the Linley Group, called AEye’s camera-lidar fusion “an interesting approach.” Acknowledging that Aeye’s implementation “may have some unique features,” Demler cautioned that AEye “isn’t the only company doing that.” He noted that Continental also sells a camera-lidar combo unit. Presumably, though, Continental is combining data from two separate sensors after pre-processing.

As Demler sees it, the advantage of AEye’s approach would be “in the sensor-fusion software.” In essence, “Treating the camera/lidar image sensors as an integrated unit could speed identification of regions-of interest, as they claim,” he noted. “But beyond that, all the strengths/weaknesses of the two sensors still applies.”

Demler noted that AEye is using a MEMS lidar, but doesn’t appear to disclose its spatial resolution. That could be a weakness compared to a scanning lidar like Velodyne’s, he speculated. “The camera sensor has highest resolution, but it can’t handle extremely bright or dark scenes, and it’s still limited by dirt and precipitation that can block the lens. So you can’t rely on that for your spatial resolution. Likewise, the lidar doesn’t function as well in precipitation as radar, so you can’t rely on that for object detection, and most lidars don’t measure velocity.”

Asked about AEye, Phil Magney, VSI Labs founder, disclosed that his firm was hired to validate lidar performance for distance and scan rate.

Magney stressed, “The iDAR sensor is unique in that it couples a camera with a lidar and fuses the data before the combined values are ingested by the central computer.” In his opinion, “this is really edge fusion as the device is fusing the raw data with the camera data before any classification occurs.” Magney added, “We also know that the device has the capacity to drill down to a subject of interest meaning that would not need to process an entire point cloud scene.”

Magney acknowledged that AEye’s iDAR device has “the potential to better classify because you have the fused camera data to work with.” He noted, “iDAR is developing classification algorithms that apply to the fused data set.”

AEye’s so-called dynamic vixels create content that is, in theory, “much richer than either cameras or lidars can produce by themselves,” said Magney. But he cautioned that “basically every pixel has a point and every point has a pixel but keep in mind the resolution of the camera is much higher than the lidar so your ratio of pixels to points is not one to one.”

Magney acknowledged, “When comparing iDAR to radar it is possible to eliminate the need for radar, because lidar and radar are both ranging instruments.”

He noted, “If you could have enough confidence in the lidar’s ability to give you proper depth perception, and can track the velocity of targets, then this is possible. It should be mentioned, iDAR has twice the scan rate (100 Hz) compared to most commercial lidar products, another advantage of their device.”

On the other hand, because more ADAS-featured cars are poised to roll out well before fully autonomous vehicles, radar appears to hold the advantage over lidars (or iDAR) in the ADAS market.

“Radar is going to work better in inclement weather and is thus best suited for ADAS, where you need safety systems running even when the conditions do not suite autonomous driving,” noted Magney. “But radar by itself is still limited in terms of what it can classify. This is determined by the firmware in the radar device. We understand that radar is getting better at classification and the companies are purporting some richer capabilities. There are some radar startup that have some pretty impressive claims.”

VSI recently validated the test and methodology in a recent performance test of the AEye iDAR sensor. Magney said the firm validated that the lidar signals were able to detect a truck on the road at a distance of 1 kilometer. VSI also confirmed the frequency of the scan rate of 100 Hz, Magney said.

“We did not validate that this sensor will lead to better performance or safety, but we did validate that it had enough intelligence to identify an object at 1,000 meters,” he added.

Asked about radars vs lidars, Demler summed it up. For high-level autonomous vehicles, no company at this point is claiming lidar isn’t necessary. He said, “Sure, you can build a self-driving car without it, but that doesn’t mean it functions as well under all conditions or is as safe as a camera/lidar/radar system.”

In Demler’s opinion, what AEye’s iDAR doesn’t replace radar. And TI’s mmWave imaging radar doesn’t replace lidar, Demler explained. “Most AV developers are using all three, and in fact they are using other sensors as well,” Demler said. “Ultrasonic sensors have their place, as do infrared sensors.”

He said: “Safety and redundancy demand backups, and multiple sensor types are required because no one type works best under all conditions.”

Who will make iDARs?

Last week,AEye also disclosed the second close of its Series B financing, which will take the company’s total funding beyond $60 million

AEye said that included among its Series B investors are automotive OEMs, tier ones and tier twos, as well as strategic investors Hella Ventures, Subaru-SBI Innovation Fund, LG Electronics and SK Hynix.”

AEye’s Lambright pointed out the significance of Hella Ventures and LG Electronics joining the Series B round. AEye is counting on tier one partners like these to bring up the volume of iDAR production and lower the unit cost. Lambright estimated the initial cost of iDAR in 2021 will be lower than $1,000 per unit.

— Junko Yoshida, Global Co-Editor-In-Chief, AspenCore Media, Chief International Correspondent, EE Times

Subscribe to Newsletter

Test Qr code text s ss