Pros and Cons with 7nm Processors

Article By : Rick Merritt

AMD's 7nm presentation raised hopes, but software issues and production costs are still worries

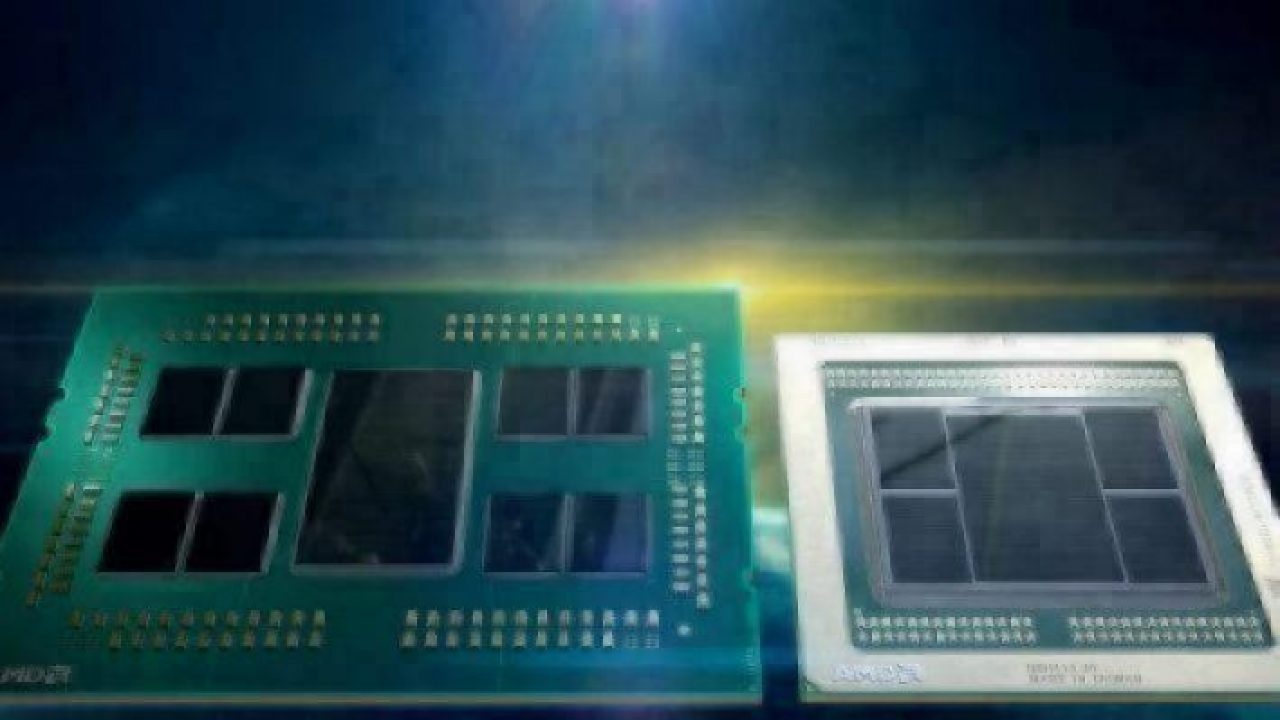

SAN JOSE, Calif. — AMD’s announcement of a 7-nm Epyc x86 CPU and Vega GPU generated a wave of hope that the chips will lower rising costs of high-end processors. The news also provided an example of the diminishing returns of leading-edge process technology and raised concerns about the quality of open-source code for accelerators.

In a Twitter conversation tagging our story on the news, a scientific researcher in Germany lamented that the $10,000+ price tags on high-end Nvidia V100s make them “nothing we can order easily with our funding guidelines.”

A researcher in England called the prices, which can top $15,000 for buyers in Europe, “not sustainable,” pointing to Intel and Nvidia gross margins in excess of 63%.

“I think Nvidia is making great products, but … this problem can only be solved by increased competition in both the CPU and GPU markets,” he said. “Please step forward, AMD, Cavium/Marvell, Fujitsu, Ampere … our science is suffering due to the current situation.”

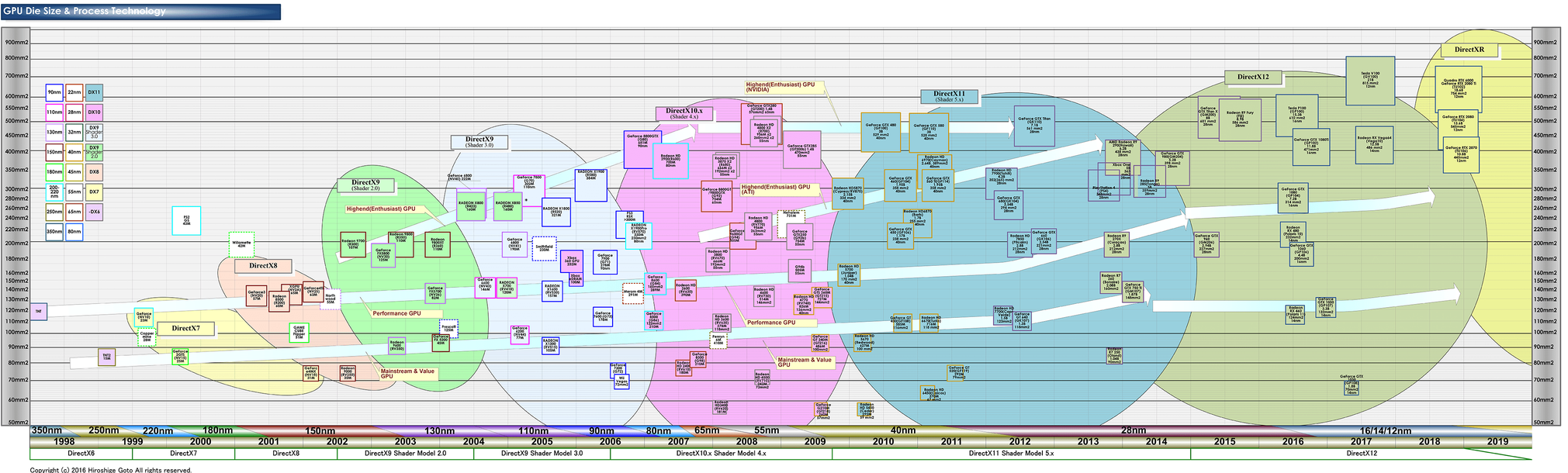

Another person on the discussion thread posted a chart (below) showing the growing die sizes of Nvidia GPUs as the company pursued higher-performance parts.

First-generation Nvidia general-purpose graphics processors cost about $1,500, and “we all worried at how expensive they were compared to consumer GPUs,” said one researcher, bemoaning a 10× rise in costs. (Source: Hiroshige Goto)

AMD has clearly tried to shave costs with its 7-nm Epyc x86 CPU by implementing its memory controller and I/O in a 14-nm die. But how much price competition comes with the new AMD parts remains to be seen. If the 7-nm products are more successful than anticipated, AMD could find itself constrained by the number of wafers that it can get from TSMC, now its sole 7-nm provider.

Just before the parts debuted, Intel announced that it was focusing all of its capacity on high-end devices, claiming that it could not fill a resurgence of PC demand. However, overall demand is actually not rising; rather, Intel may have been trying to tempt AMD to go after low-end sockets, said analyst Mario Morales of International Data Corp.

With the exception of a modest uptick in enterprise demand, the PC market is not growing. In fact, the market in China for desktops and notebooks — which represents the largest slice of the overall PC market — is declining slightly, said Morales.

Researchers welcome the potential for new AMD parts to spark price competition. But they also complained on Twitter about the state of software for general-purpose graphics processors (GP-GPUs). Most of Nvidia’s code is still proprietary, and open-source code from both it and AMD is subpar, they said.

Poor-quality code has been the “Achilles’ heel of the AMD GPU compute software stack from the beginning,” said one researcher.

“I am very happy that the AMD Radeon open-source Linux driver actually includes all compute components (in contrast to Nvidia),” said another researcher. “But then buggy open-source software still inhibits wide adoption.”

“Please make CUDA an open ecosystem where other parties can provide input into future features or implement their own runtime for the CUDA API,” said a researcher in England, referring to Nvidia’s GP-GPU software.

“If everyone implements CUDA, they have to implement the CUDA performance model, and that blocks innovation, which is a bad thing in acceleration,” said another. “We need an ecosystem that is more adaptive to all the innovations from other processor companies.”

“I’ll add the need for documentation [for] Nvidia-specific OpenCL extensions, too … and hire some people to handle bug tracker issues about it,” said yet another.

“The situation won’t improve until customers require good-quality implementations of open parallel programming standards … and it’s our science that suffers,” said a researcher in the U.S.

A handful of representatives from AMD, Intel, and Nvidia jumped into the fray, defending their efforts.

“OpenMP 5.0 is now coming out, which is also super exciting for GPU computing,” said one vendor. “I know AMD’s Greg Rodgers and Nvidia’s Jeff Larkin put a lot of hard work into this along with rest of OpenMP working group to make this happen.”

On the hardware side, AMD’s Epyc and Vega are among the first reality checks on the 7-nm node.

TSMC said in March 2017 that its process would offer up to 35% speed gains or 60% lower power compared to its 16FF+ node. However, AMD is only claiming that its chips will sport 25% speed gains or 50% less power compared to its 14-nm products.

“TSMC may have been measuring a basic device like a ring oscillator — our claims are for a real product,” said Mark Papermaster in an interview the day that the 7-nm chips were revealed.

“Moore’s Law is slowing down, semiconductor nodes are more expensive, and we’re not getting the frequency lift we used to get,” he said in a talk during the launch, calling the 7-nm migration “a rough lift that added masks, more resistance, and parasitics.”

Looking ahead, a 7-nm-plus node using extreme ultraviolet lithography (EUV) will “primarily leverage efficiency with some modest device performance opportunities,” he said in the interview.

For AMD, the use of a mix of 7-nm and 14-nm die on a standard organic package was a move similar to Samsung going to 3D NAND. It gave the company breathing room on costs with an approach that others are likely to adopt.

There’s no doubt that leading-edge nodes are presenting historic challenges.

Intel is facilitating a 7-nm fab with EUV in Chandler, Arizona, now, but no production is expected until at least late next year. Even Intel’s current 14-nm yields are “above threshold but vary widely by product,” said Jim McGregor, principal of Tirias Research.

— Rick Merritt, Silicon Valley Bureau Chief, EE Times

Subscribe to Newsletter

Test Qr code text s ss