AMD Makes 7nm Splash

Article By : Rick Merritt

Set to bring new, shrunk EPYC and Vega CPUs and GPUs to market ahead of Intel and NVIDIA

SAN FRANCISCO — Advanced Micro Devices launched its first 7-nm CPU and GPU at the lucrative target of the data center. It showed working chips that delivered comparable performance to Intel’s 14-nm Xeon and Nvidia’s 16-nm Volta.

AMD has yet to reveal many details about the new chips and their performance. However, analysts are generally bullish that the company will be able to continue a significant comeback since it launched its first Zen-based chips on a 14-nm process in late 2016.

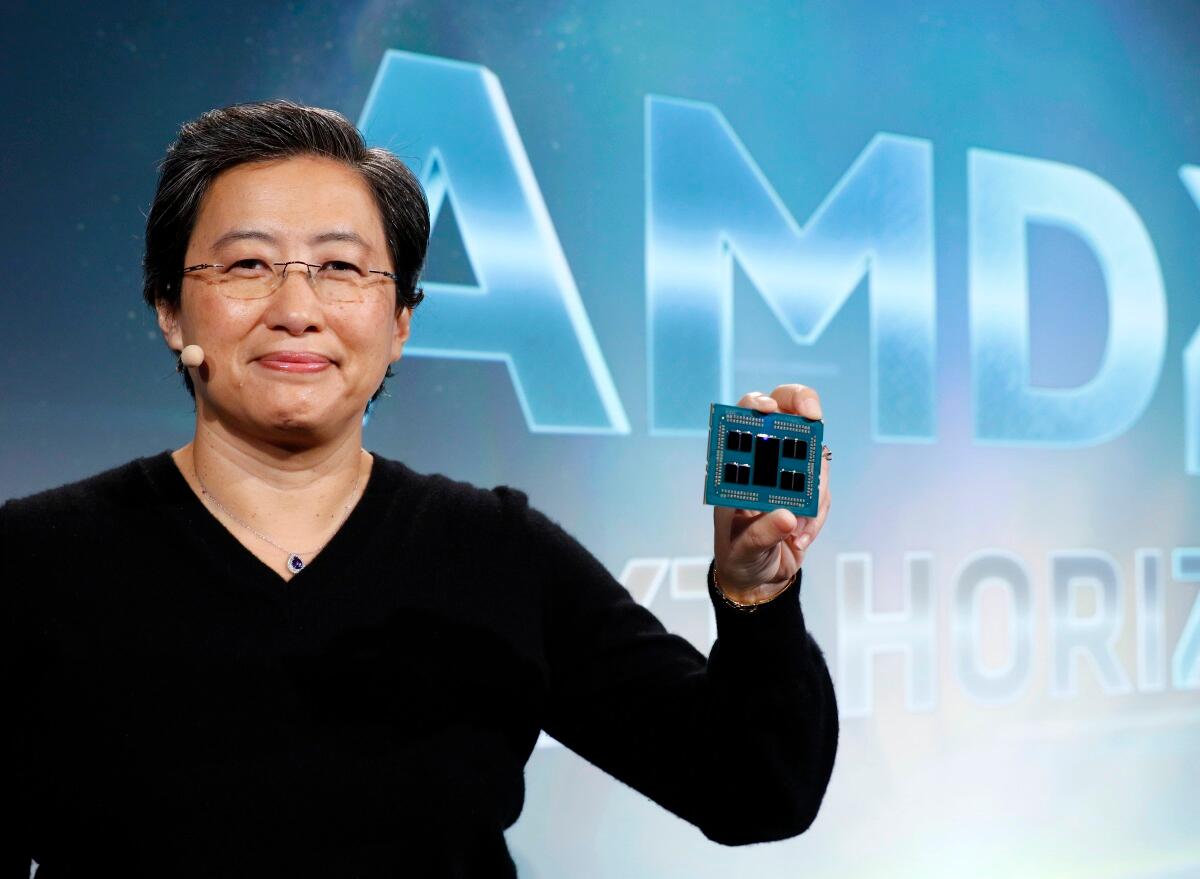

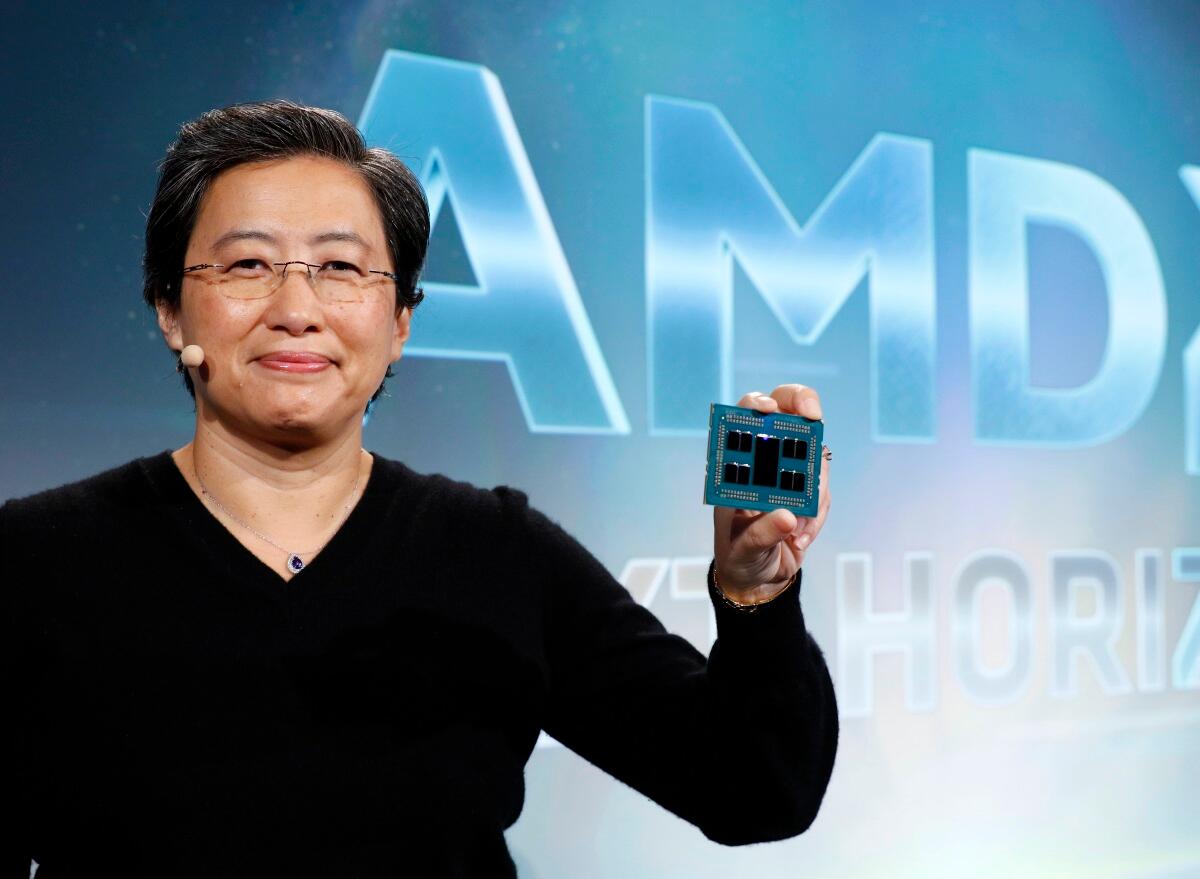

“We are all about high performance … The idea is to be incredibly ambitious and recognize it’s a journey,” said chief executive Lisa Su in a press and analyst event here. “AMD is totally committed to the data center. This is our space and this is where we will lead.”

She demonstrated a single 7-nm Epyc x86 processor narrowly beating a system with two Intel Skylake Xeons in a rendering job. Separately, AMD showed benchmarks that roughly put its 7-nm Vega GPU on par with an Nvidia V100 in inference tasks.

Startup Highwai showed the 7-nm Vega running its AI simulation software for robocar navigation. The AMD chip seemed roughly comparable to Volta GPUs that it has been developing its code on, said Raul Diaz, chief technologist and co-founder of the company.

“We haven’t had time to do any systematic comparisons yet,” he said, noting that AI training is the app most in need of more performance.

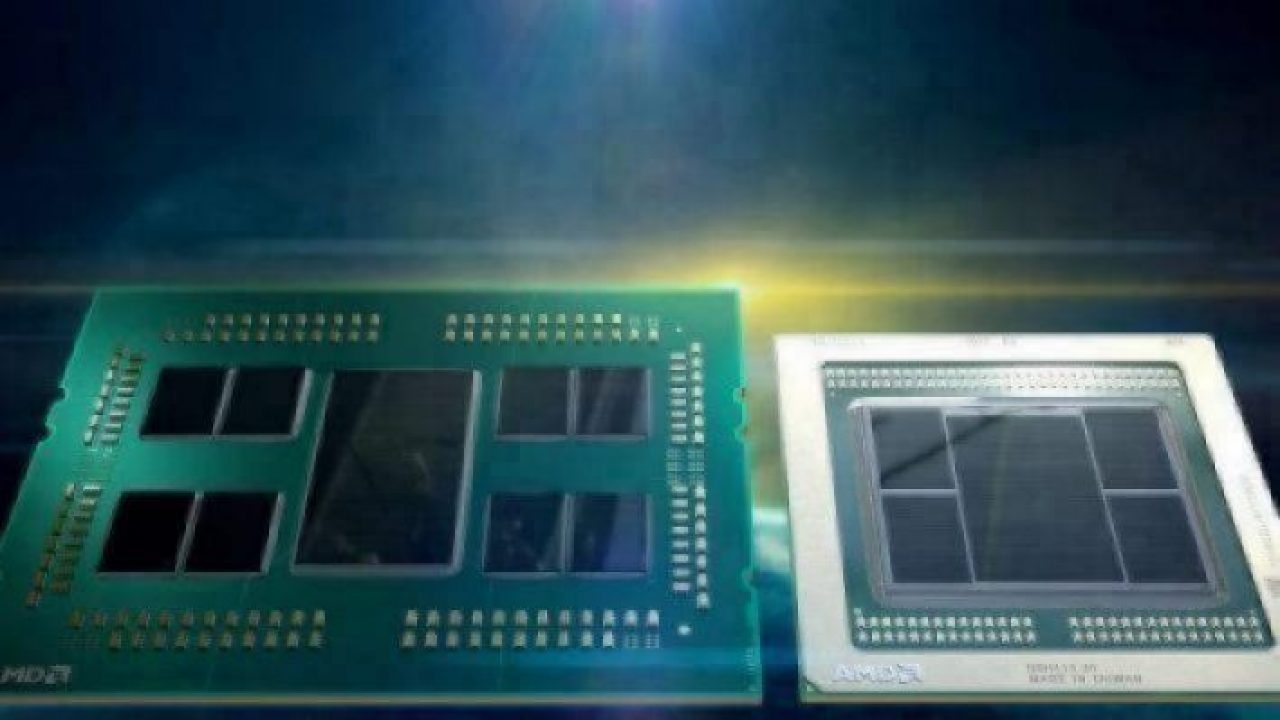

Epyc (left) and Vega both leverage multi-die packaging techniques. (Images: AMD)

The existing 14-nm Epyc, launched in May 2017, boosted AMD’s negligible 0.5% share of x86 servers to 1.5%. With its customer relationships now back on track, the 7-nm version could push AMD up to “high single digits” in x86 server market share by mid-2019, said Mario Morales of International Data Corp. (IDC).

To date, the Zen-based x86 chips have boosted AMD’s share of overall microprocessor units to 9.23% in the second quarter of this year, up from 7.43% in the second quarter of 2016, said Shane Rao of IDC. In revenue terms, AMD has seen its processor share grow to 5.3% from 2.64% over the same period, IDC estimated. Intel’s x86 continues to dominate all architectures across both categories at over 90%.

AMD continued its proactive use of creative packaging to deliver a lower-cost Epyc. A single module includes up to eight 7-nm processor die linked with AMD’s Infinity fabric to a single 14-nm I/O chip with memory controller. The approach is an extension of the 14-nm Epyc that uses four die on a single package.

“One big die [for the 14-nm Epyc] would have cost 1.7x more … Analog I/Os don’t scale as well as digital logic, so its fine to keep them on 14 nm … Others will take a similar approach,” said Mark Papermaster, AMD’s chief technologist.

Analysts such as Patrick Moorhead of Moor Insights & Strategy agreed.

“I believe this is the future of the entire chip industry as manufacturing large, monolithic dies is getting harder and more expensive,” said Moorhead. “The next steps in the industry will be to adopt 2.5D and 3D packages where these chiplets are stacked on top of each other.”

The 7-nm Epyc, aka Rome, is the first x86 server processor to use PCIe Gen 4. It sports up to 128 lanes of the interconnect compared to 96 lanes of Gen 3 for Intel’s current high-end server chips.

Rome packs up to 64 dual-threaded Zen 2 cores, twice as many as the 14-nm Naples chip that used first-generation Zen cores. The chips and a next-generation Milan will all fit into the same socket, so companies do not have to design new motherboards.

Overall, Rome delivers twice the throughput of Naples and four times its floating-point performance, said AMD. However, it declined to give target data rates, specific benchmarks or other details about the chip said to still be in a working prototype slated to ship sometime in 2019.

At the same time that it is attacking Intel’s leading-edge Xeon server chips, AMD is providing a head-to-head alternative to Nvidia’s Volta for machine learning and commercial graphics.

“This industry needs competition,” said David Wang, a former AMD exec who took over its graphics group a year ago after Intel hired away Raj Koduri to work on a GPU of its own, due in 2020.

The 7-nm AMD Vega packs 13.2 billion transistors. Like the new Epyc, AMD said that it delivers 25% more performance than the previous 14-nm chip. The high-end MI60 version of it for GPU computing sports 64 compute units, 4,096 streaming processors, and up to 32 GBytes HBM2 memory as well as support for PCIe Gen 4.

The company took a different approach to AI than its rival Nvidia, which bolted on multiply-accumulate units to its GPU. AMD added support in all of its compute units for formats from 4- and 8-bit integers to 16-, 32- and 64-bit floating-point math. They use mixed-precision 32-bit accumulators.

“We wanted a highly flexible accelerator, not one dedicated to FP16,” said Evan Groenke, an AMD senior product manager.

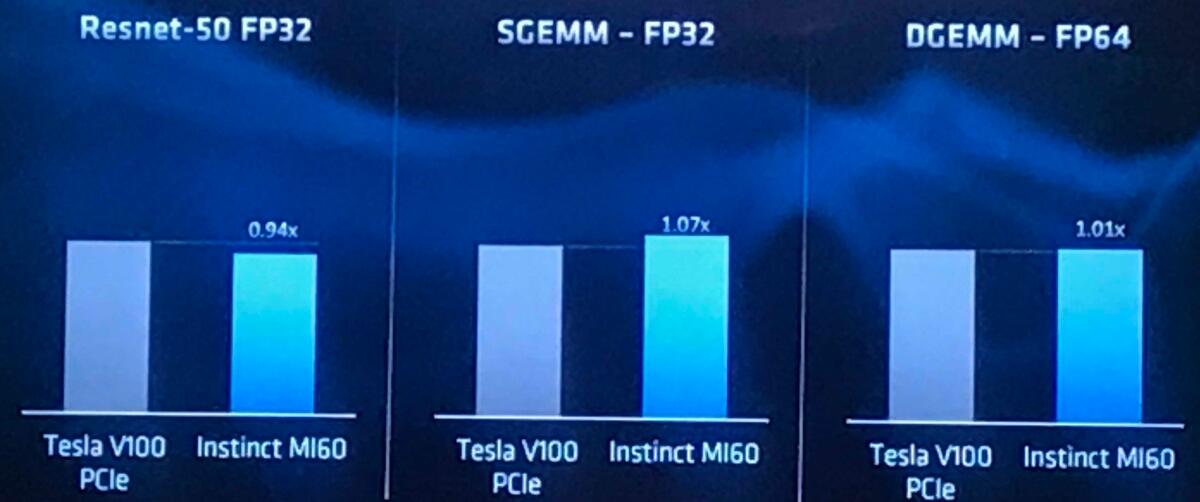

The result is a chip that generally delivers within 7% of a Volta’s performance before optimizations with less than half the die area (331 mm2 compared to 800+ mm2). “You don’t need large dedicated silicon blocks to get performance gains in machine learning,” said Groenke.

On average, the 7-nm Vega comes within 7% of Nvidia’s Volta in AI jobs.

Specifically, AMD said that Vega will deliver 29.5 Tera FP16 operations/second for AI training. In inference jobs, it can hit 59 TOPS for 8-bit integer and 118 TOPS for 4-bit integer tasks.

In addition, AMD added hardware virtualization to the chip. Thus, one 7-nm Vega can support up to 16 virtual machines or a single virtual machine can split its work across more than eight GPUs.

“This is part of our differentiation, and its free,” said Wang. “There’s no licensing — it comes with the GPU.”

Cloud giants will get early versions of the MI60 cards before the end of the year. An MI50 version with roughly 10% less performance supporting up to 16-GB HBM2 will be available before April.

Sales of the cards will depend on uptake of the open-source ROCm software that AMD released for GPU computing. The company announced an updated version of the code now supporting more machine-learning frameworks, math libraries, Docker, and Kubernetes.

In a keynote, Papermaster sketched out improvements in the 7-nm version of the Zen x86 cores. He also said that versions for TSMC’s 7-nm-plus process are on track for delivery in 2020, probably similar to the 12-nm upgrade that AMD gave its original Zen parts.

The Zen 2 cores sport an improved branch predictor, better instruction pre-fetchung, a larger micro-op cache, and a re-optimized instruction cache. Floating-point registers and load/store units were doubled to 256 bits wide.

As a result, AMD claims that its 7-nm x86 chips will beat Intel’s 10-nm versions — now expected late next year — in both performance as well as time to market. In addition, the company enhanced its Infinity fabric, a cache-coherent processor link, although it declined to give details.

Separately, the Zen 2 cores implement in hardware fixes for the Spectre side-channel attacks that AMD had patched in software. The chips were not vulnerable to the Meltdown or Foreshadow attacks, said Papermaster.

AMD is already gaining momentum in the data center, where it had virtually no presence two years ago. Its Epyc is now used by Baidu, Dropbox, Microsoft Azure, Oracle, and Tencent. Alibaba, Baidu, and Microsoft are using AMD’s GPUs.

Lisa Su shows off a 7-nm Epyc that will ship sometime next year.

“Our momentum is clearly there,” said Lisa Su, pegging the data center as a $29 billion total available market.

Amazon became its latest and most high-profile customer, with AWS announcing plans for M5a, R5a, and T5a instances based on Epyc. “We want to support every workload out there, and AMD is one of the things our customers are interested in,” said Matt Garman, vice president of compute services at AWS.

Intel was quick to respond that its Xeon chips make up the vast majority of all AWS instances, including 54 services based on its latest Skylake chips.

“The world’s largest cloud provider offering Epyc is the biggest news of the day,” said analyst Moorhead. “This is a testament to Epyc’s capabilities,” he said, adding that Intel’s quick response is a sign that competition is on in the x86 space again.

— Rick Merritt, Silicon Valley Bureau Chief, EE Times

Subscribe to Newsletter

Test Qr code text s ss