Renesas to Push Dynamically Reconfigurable Processors

Article By : Junko Yoshida, EE Times

Proposes “real-time continuous AI” instead of stat-based AI, to ease industry transition to AI age

TOKYO — Every company that has pledged its faith to “smart manufacturing” is pledging its hopes for AI.

This brave new world requires a big investment in high-cost AI systems, along with the cost of setting up a “learning” platform and contacting cloud service providers. The grand plan starts with big data collection so that the machine can learn and figure out something previously unknown.

That’s the theory.

In the real world, however, many companies are finding AI hard to implement. Some blame their inexperience in AI, or a shortage of in-house data scientists cable of making the most of AI. Others complain that they have not been able to establish the proof of concept of their installed AI systems. In any case, manufacturers are beginning to realize that AI is not an “if you build it, they will come” deal.

Enter Renesas Electronics.

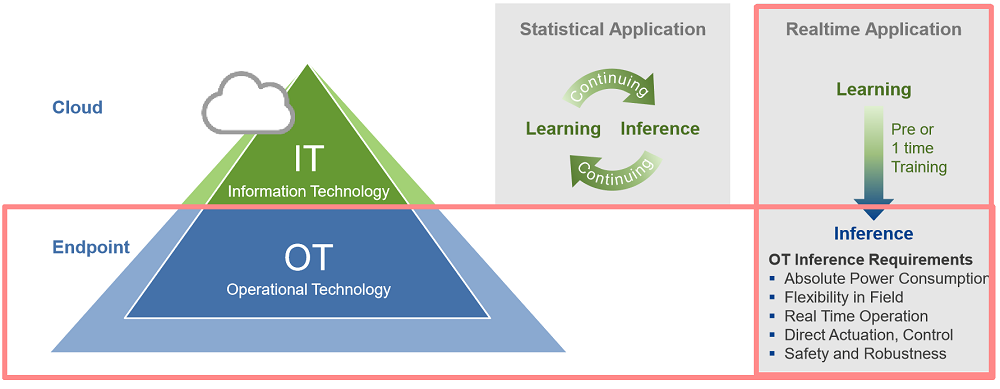

The Japanese chip company claims a leading position in the global factory automation market. It is proposing “real-time continuous AI” for the world of operational technology (OT). This approach contrasts sharply with “statistical AI,” often pitched by big data companies to promote automation in the world of information technology (IT).

Statistical AI for IT vs. continuous AI for OT (Source: Renesas)

Yoshikazu Yokota, executive vice president and general manager of industrial solution business unit at Renesas, told EE Times that embedded AI is critical for fault detection and predictive maintenance in OT. When an anomaly pops up in any given system or process, embedded AI can “make decisions locally and real time,” he explained. Renesas proposed the idea for “AI at endpoints” three years ago and started to experiment with it in its own Naka semiconductor fab.

“Our plan is to enable real-time inference in OT, while incrementally increasing AI capabilities at endpoints,” said Yokota.

By bringing AI in baby steps to factory floors, Renesas hopes to help customers currently struggling to complete the proof of concept on their own AI implementation and understand their return of investment in AI.

When to apply AI to OT

Mitsuo Baba, senior director of the strategy and planning division of Renesas’ Industrial Solution business unit, told us that AI can be best applied to OT when specific issues — in production lines for example — are already identified.

For example, suppose there is a highly skilled operational manager who is experienced enough to detect certain anomalies in a factory. Instead of sending this manager to check out every stage of the manufacturing process, “We could use AI to draw the line — and define — when and where an abnormal situation begins to emerge during the production defects,” said Baba. AI could be the watchful eye monitoring the production line continuously, to keep small products defects from advancing to the next stage of production.

In such a factory automation example, AI needs to be trained only once based on pre-identified issues. AI inference runs on endpoint devices in real time, without returning to the cloud. Baba said 30Kbytes of data is usually enough for end-point inference, compared to statistical AI doing both learning and inference, which typically demands processing data as big as 300 megabytes in the cloud.

In short, Renesas is advocating AI inference that can be done on an MCU.

Rather than replacing existing production lines with brand-new AI enabled machines, which would be costly, Renesas is proposing an “AI Unit Solution” kit that can be attached to current production equipment.

Baba said Renesas has no plans to challenge AI chip companies like Nvidia. “Our goal is to lead a new market segment of embedded AI, in which data required for inference is so small that it can even run on existing MCU/MPU,” Baba said.

AI roadmap

Pitching MCU-based “embedded AI” (or “e-AI”) is a smart move. The strategy plays to Renasas’ strength. Renesas dominates factory floors, building infrastructure and home appliances with its microcontrollers, SoCs and microprocessors.

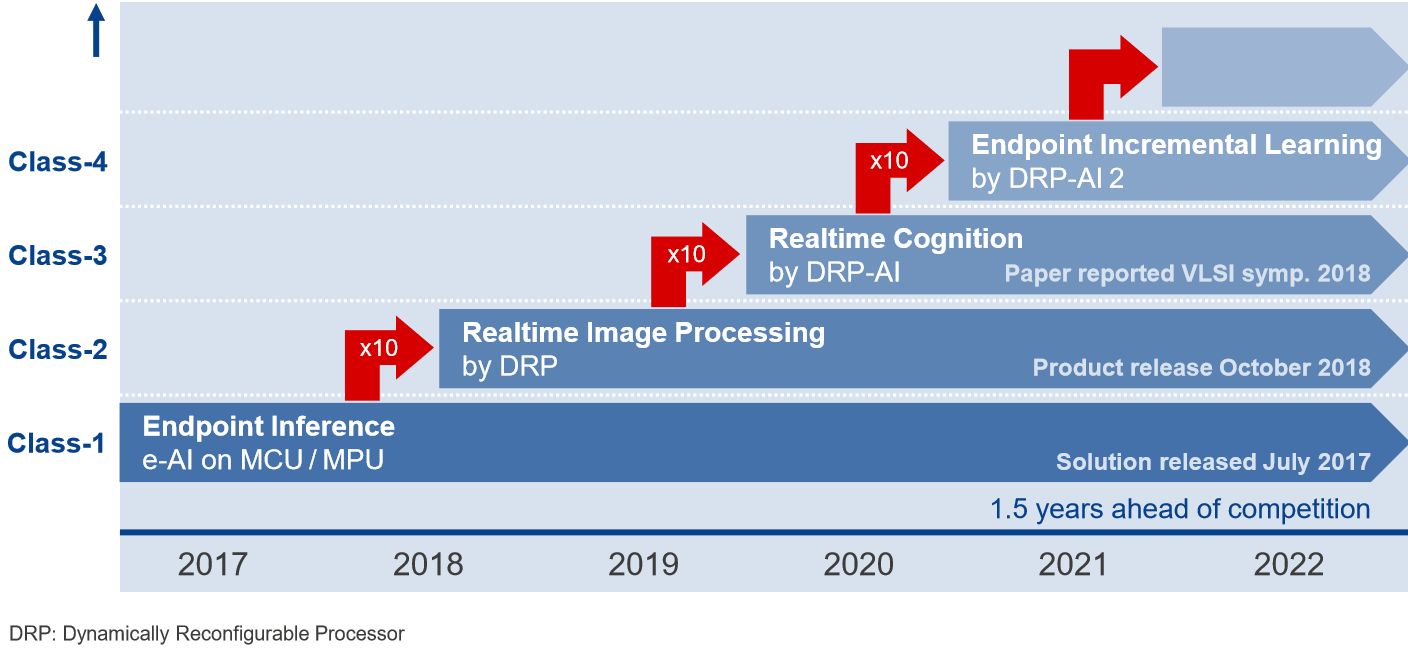

Renesas is also cognizant that endpoint inference on MCU/MPU will eventually need more complex AI processing, prompting customers to demand a roadmap for enhanced e-AI.

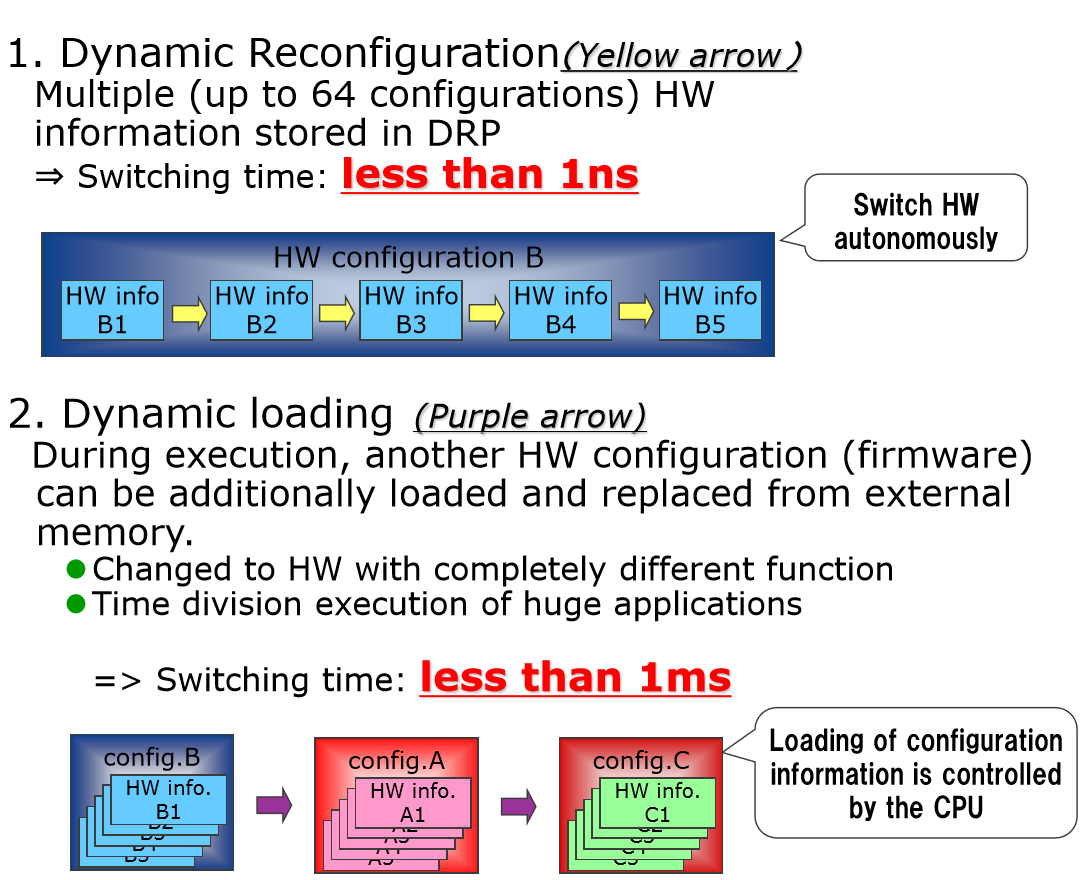

Renesas is announcing next month what the company calls DRP — a dynamically reconfigurable processor — as an AI accelerator that works in tandem with its own MCU.

Renesas’ roadmap for e-AI capability enhanced by DRP (Source: Renesas)

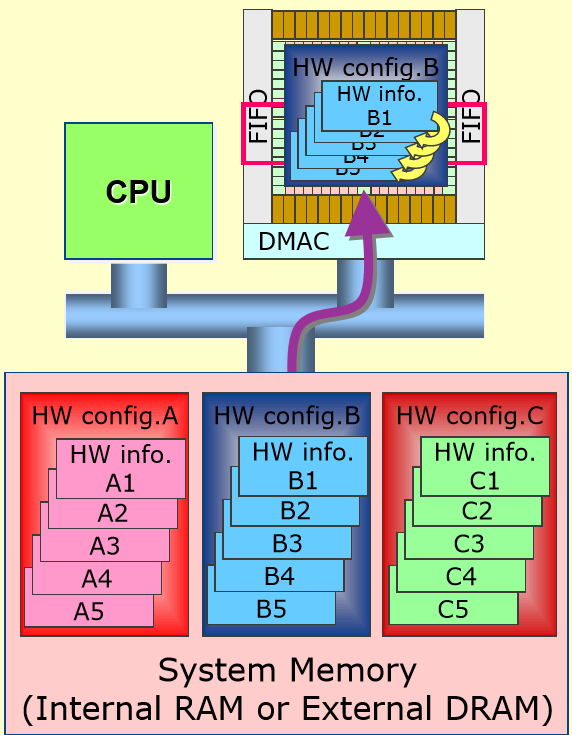

DRP, Renesas’ home-grown processor, isn’t necessarily new. Originally designed as programmable hardware, it was used in Renesas’ custom SoCs for such products as professional video equipment and digital cameras. When an SoC must run applications that change constantly, Baba said DRP’s flexibility comes in handy, as software modifications prompt the hardware to reconfigure its processing elements on the DRP. “Designers can write software in C language. Then use Renesas’ dedicated complier maps and hardware resources accordingly,” he explained.

He noted that similar attempts at dynamically reconfigurable processors have been made by DARPA and at least one FPGA company, Xilinx.

With market interest in AI rapidly growing, Renesas sees DRP a perfect fit for an AI accelerator. Even better is that the same DRP can run different AI inference dynamically via time-share, switching software on reconfigured hardware.

Renesas plans to integrate DRP as a core inside an MCU. The company’s e-AI roadmap enhanced by DRP covers a broad range of AI processing, starting with real-time image processing (scheduled to launch in October), real-time cognition (2020) and endpoint incremental learning (2022).

The concept of embedded AI is expected to spread throughout the industry. Earlier this year during the Mobile World Congress, STMicroelectronics presenters described machine learning as a key to “distributed intelligence” in the embedded world. A day will come, said ST, when a network of tiny MCUs is smart enough to detect wear and tear in machines on the factory floor or find anomalies in a building, without necessarily reporting sensor readings back to data centers.

At that time, ST demonstrated three AI solutions: a neural network converter and code generator called STM32 CubeMX.AI, ST’s own Deep Learning SoC (codenamed Orlando V1), and a neural network hardware accelerator (currently under development using an FPGA) that can be eventually integrated into the STM32 microcontroller.

Fully aware of the competitive landscape, Renesas’ Baba spoke confidently. With Renesas already rolling out e-AI products, “We think we have at least a 1.5 year-lead,” Baba said.

Notably, Renesas isn’t just providing e-AI hardware. In offering fault detection and predictive maintenance, Renesas provides tools — e-AI translator and e-AI checker — that can convert pre-trained neural network models based on open source frameworks such as Caffe and Tensor Flow that can run inference on Renesas MCUs and accelerators.

Renesas boasted that it can adapt neural network models in which learning has been completed “in a period as short as a single day, and the sequence of AI processing, from sensor data collection to data processing, analysis, and evaluation/judgment, can be performed in real time.”

Endpoint vs. Edge

The notion of “moving AI to the edge” is gaining popularity. Yohann Tschudi, technology and market analyst of the Semiconductor & Software division at Yole Développement, recently explored in a report whether the semiconductor content of AI systems will be based in the cloud, in the system, or at the device level.

He wrote:

While it was possible to calculate the weights of neurons in a huge network with a graphics processing unit (GPU), it was necessary to create accelerators, more commonly called neural engines, to make these millions of calculations possible on portable devices.

Here, Tschudi is referencing AI in portable devices, typically defined as “edge devices.” But what about AI on “endpoint devices”? How is end-point different from edge?

While edge devices such as smartphones are designed to connect directly to external network infrastructure, endpoint devices often exist in a closed, internal network, Baba explained. While edge devices belong to the IT world, endpoint devices are designed for deeply embedded OT. Requirements for embedded AI in OT are:

- It must control systems real time

- It offers safety and robustness

- It needs to be extremely low power

Embedded AI is particularly powerful in such tasks as continuous monitoring. Hence, lower power is a must, Baba explained. More important, however, Renesas hopes to see embedded AI moving into non-electrical systems. “Imagine a water faucet,” said Baba. “If e-AI is inside the faucet, it can automatically control types of water streams, volume and temperature depending on what one is washing under the running water.”

Is e-AI different from traditional machine vision?

If the task of e-AI on production lines were simply to categorize defect/no defect, isn’t it doing the same thing as traditional machine vision has always done? Machine vision provides imaging-based automatic inspection and analysis for process control, robot guidance and other inspections in industrial applications.

Not quite, said Baba.

E-AI offers intelligence based on fused multi-modal sensory data. Consider motors, for example. Before a motor completely breaks down, it will show early signs. While vision can detect some of such signs, high-pitched frequency sounds inaudible to humans are often the better clues. When a screw gets loose, vibration ensues. Accelerometers can sense this, although vibrations can vary widely.

To be effective, AI must sort out and learn the nuances of captured sensory data, while the inference engine at the endpoint diagnoses what’s going on and take appropriate actions in real time.

GE to Adopt Renesas AI Unit solutions

Every chip company pitching AI solutions in smart factories is reportedly carrying out its own proof of concept. Renesas, too, ran a two-year AI pilot program at its Naka fab. Under the program, Renesas engineers attached an AI unit to semiconductor manufacturing equipment on the factory floor. It collected data at 20x speed and ran AI analysis at the endpoint without being connected to a wide-area network and going back to the cloud.

By adding an e-AI solution to the fault detection and classification systems it has been using for years, Renesas now claims that it improved the precision of anomaly detection six-fold.

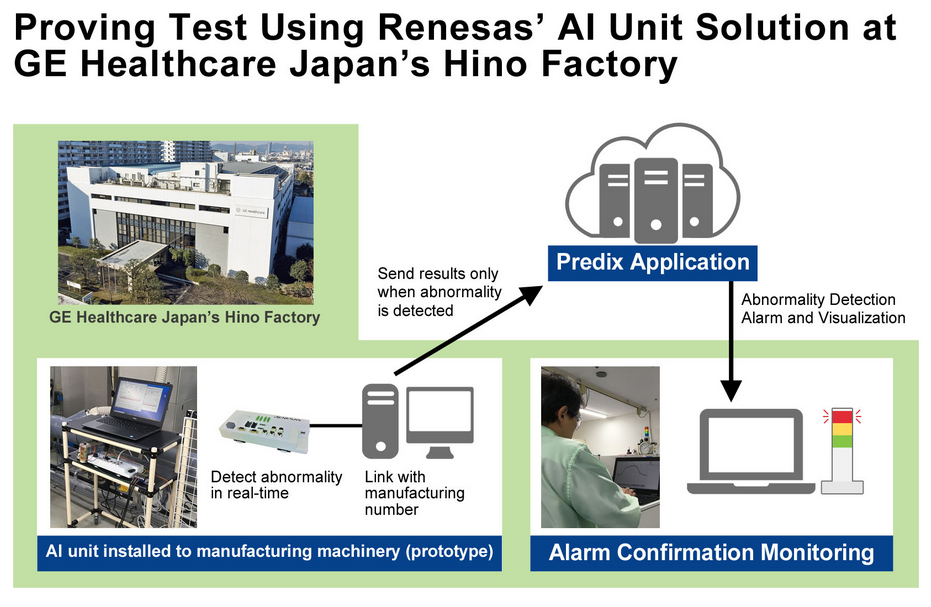

Upon hearing about the case study at Renesas’ Naka fab, GE Healthcare Japan’s Hino factory adopted Renesas’ AI unit solution to test its efficacy.

Systems developed for fault detection and predictive maintenance were installed at existing facilities equipment or machinery as an add-on AI unit. GE reported that it cut overall product defects by 65 percent where the test was applied.

“Many of the medical equipment products manufactured at this site are expensive devices, therefore improving product yield by reducing unsatisfactory products is a constant theme for improvement,” said Kozaburo Fujimoto, Hino factory manager for GE Healthcare Japan, in a statement. Until now, defects and problems were “detected by the experience and intuition of the workers on the factory floor,” he added.

— Junko Yoshida, Global Co-Editor-In-Chief, AspenCore Media, Chief International Correspondent, EE Times

Subscribe to Newsletter

Test Qr code text s ss