NVIDIA Steps Up Ray Tracing Game

Article By : Kevin Krewell, Principal Analyst, Tirias Research

The massive Turing GPU promises enough power for real time ray tracing, paired with GDDR6

The history of real-time graphics used in video games and interactive media is a history of compromises.

The goal of graphics vendors has been to create images as realistic as possible within a frame time (nominally 1/30th of a second). But when it comes to truly realistic images, the gold standard has been ray tracing — where computers model the flight of light rays within a scene bouncing off surfaces where it gains surface color and texturing.

For a high-resolution, complex scene, with many rays per surface point, each frame can take hours to fully render. This is how movie studios render their computer generated images (CGI) for digital special effects.

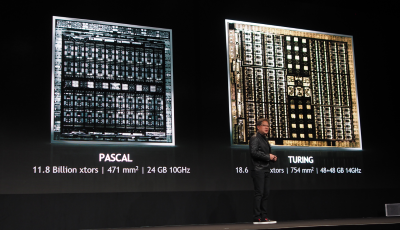

Most of that rendering takes place in server farms filled with Intel Xeon processors today. At this year’s Siggraph (the yearly technical conference on graphics technology), Nvidia may have changed all that. The company introduced its new Turing architecture, which includes a ray-tracing engine that speed up the ray tracing process by six times the performance of its existing Pascal GPU. Nvidia rates the Turing at 10 giga-light-rays/second. With that speed, real-time ray tracing is possible.

There are a lot of features of the Turing architecture, which uses the same 12nm process as Nvidia used for its Volta machine learning GPU. The main compute cores in Turing have a dual pipeline and a shared L1 cache. It’s possible to process 16T floating point operations (FLOPS) in parallel with 16T integer operations in the top of the line Quadro RTX8000. Turing also adds in the Tensor processing capabilities from Volta. Nvidia has not revealed the details of the architecture yet, only the performance numbers. I expect those details will come with the launch of the consumer version of Turing sometime soon.

Turing will be the first to use the new fast GDDR6 memory and has a video buffer of up to 48GB. In addition, two Quadro RTX cards can be connected by a special NVLink bridge where the two cards have coherent access to the combined video memory of both cards. This means with two cards, the total local memory can be up to 96GB.

Supporting larger local memory is important for movies and complex CAD designs in order to handle the rendering of large scenes. In the past, professional movie renderers couldn’t use graphics cards for movie rendering due to the relatively small frame buffer sizes. Now, with up to 96GBs of accessible local memory, it should be possible to cover the vast majority of the rendered scenes. A number of the professional renderers for movies will be adding Nvidia Quadro RTX support.

The key to Turing’s ray tracing capabilities is the blending of all these technologies. With the RT cores, more rays can be cast in a scene. Still, there s need to “de-noise” the scene for viewing. Turing can use a couple of techniques, including a machine-learning algorithm, to denoise the scene fast enough for real time playback. To make ray tracing run in real time, Nvidia also created a lower resolution version of a scene and then upscaled it to full resolution using another machine learning technique.

The chip itself is quite an accomplishment. At 754mm2, it’s the second largest GPU (only the 814mm2 Volta is larger) and it has 18.6 billion transistors. The new chip will support 8K displays. Nvidia will be launching products in the third quarter.

There will be three versions of Turing: the Quadro RTX 5000 ($2,300) with 16GB of GDDR6 memory and rated at 6G-Rays/sec; the Quadro RTX 6000 ($6,300) with 24GB of GDDR6 memory and rated at 10G-Rays/sec; and the top of the line Quadro RTX 8000 ($10,000) with 48GB of GDDR6 memory and rated at 10G-Rays/sec. These Turing cards are designed for professional workstations and render farms. It’s also likely Nvidia will be rolling out consumer versions of Turing sometime in the third quarter as well.

— Kevin Krewell is a principal analyst at Tirias Research.

Subscribe to Newsletter

Test Qr code text s ss